Towards Interpreting BERT for Reading Comprehension Based QA

Sahana Ramnath, Preksha Nema, Deep Sahni, Mitesh M. Khapra

Interpretability and Analysis of Models for NLP Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

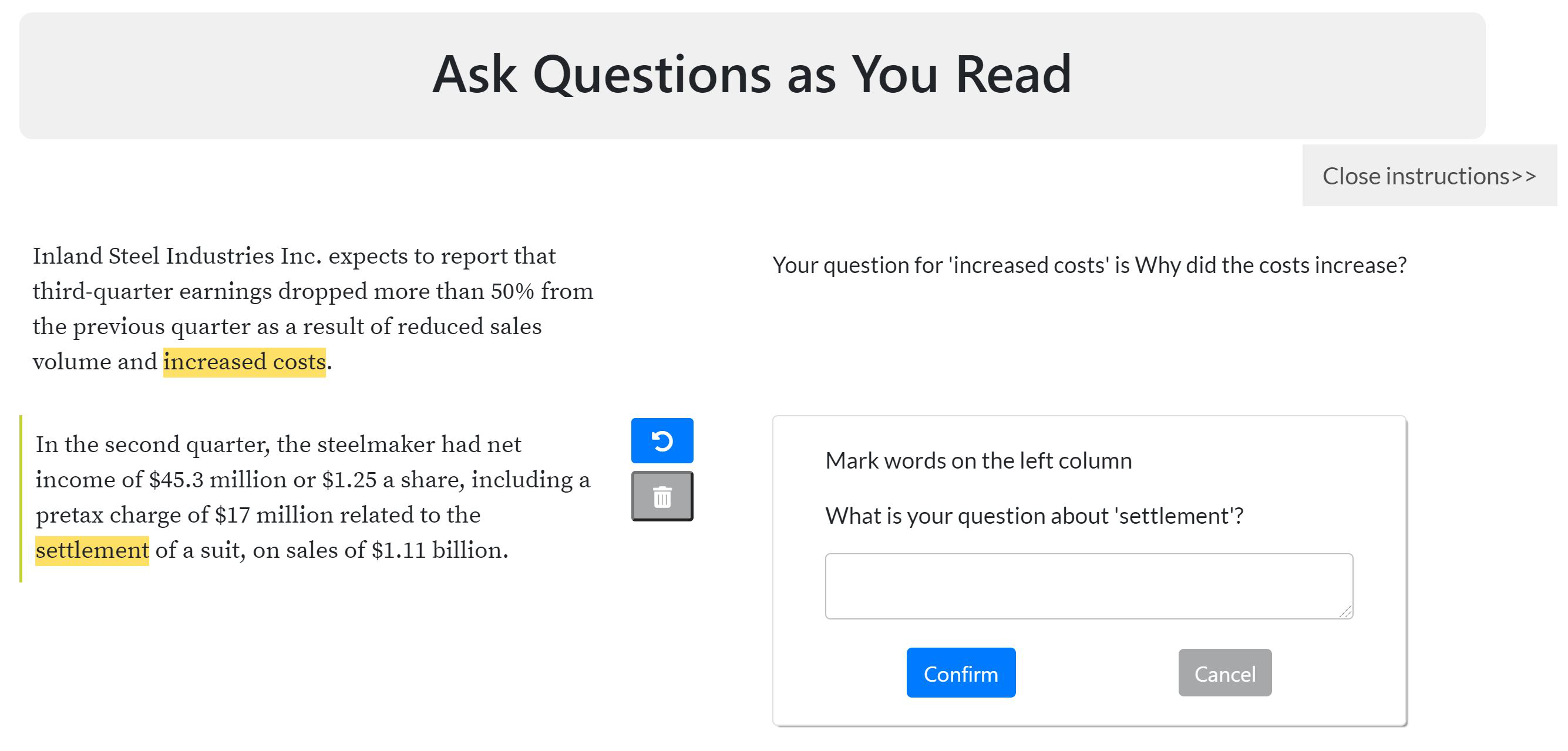

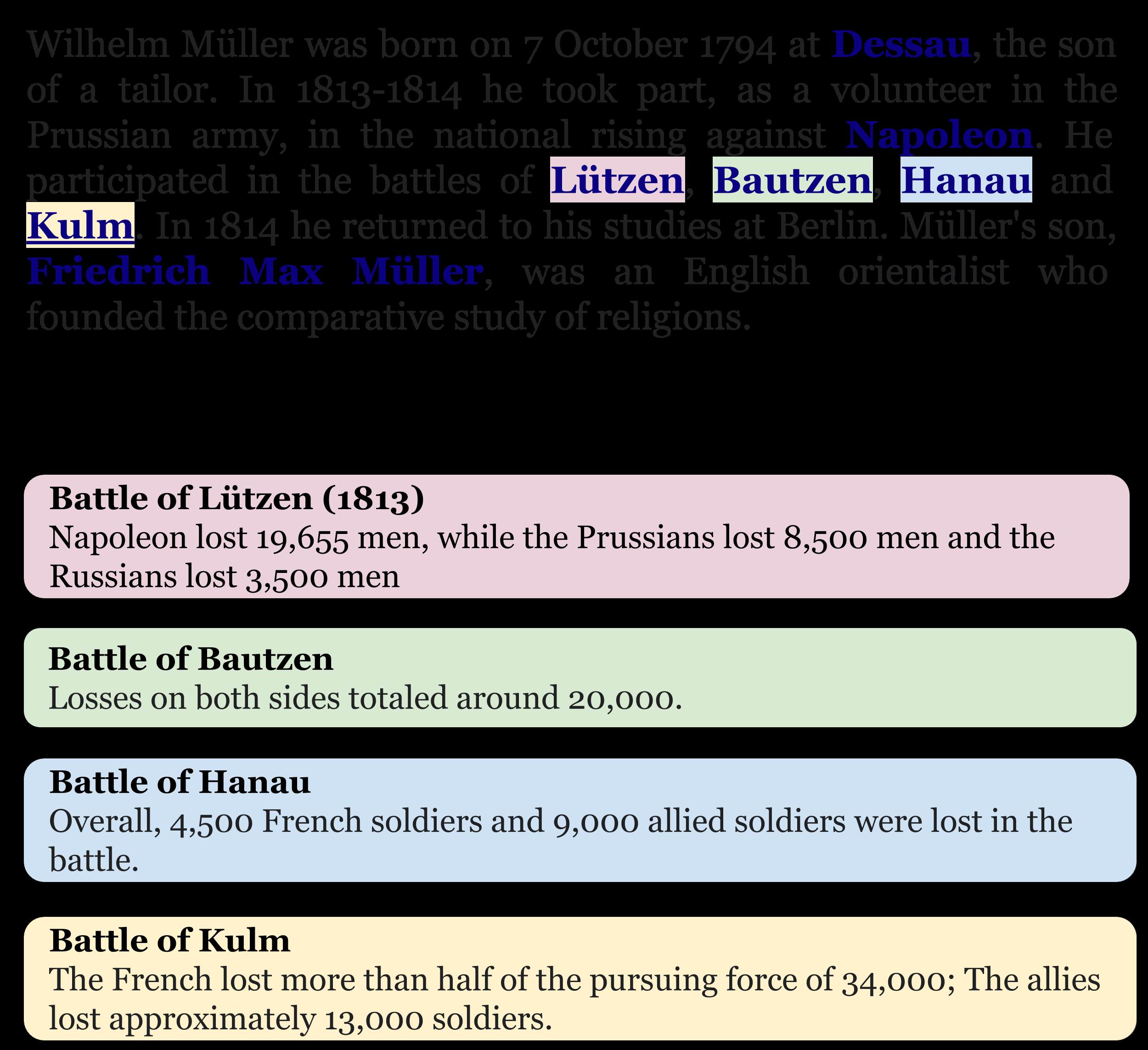

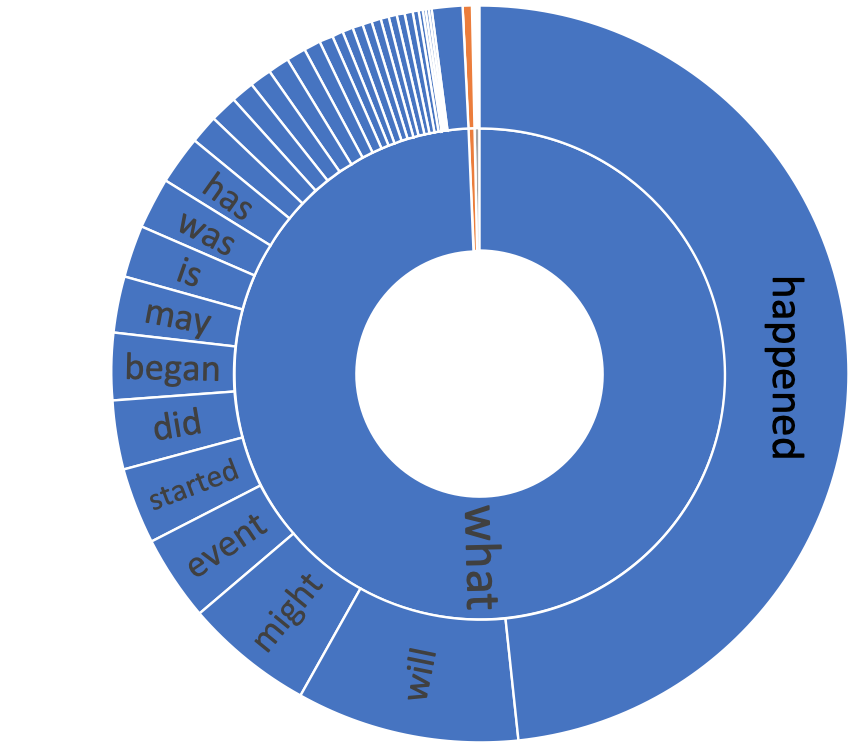

BERT and its variants have achieved state-of-the-art performance in various NLP tasks. Since then, various works have been proposed to analyze the linguistic information being captured in BERT. However, the current works do not provide an insight into how BERT is able to achieve near human-level performance on the task of Reading Comprehension based Question Answering. In this work, we attempt to interpret BERT for RCQA. Since BERT layers do not have predefined roles, we define a layer's role or functionality using Integrated Gradients. Based on the defined roles, we perform a preliminary analysis across all layers. We observed that the initial layers focus on query-passage interaction, whereas later layers focus more on contextual understanding and enhancing the answer prediction. Specifically for quantifier questions (how much/how many), we notice that BERT focuses on confusing words (i.e., on other numerical quantities in the passage) in the later layers, but still manages to predict the answer correctly. The fine-tuning and analysis scripts will be publicly available at https://github.com/iitmnlp/BERT-Analysis-RCQA.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

A Simple and Effective Model for Answering Multi-span Questions

Elad Segal, Avia Efrat, Mor Shoham, Amir Globerson, Jonathan Berant,

IIRC: A Dataset of Incomplete Information Reading Comprehension Questions

James Ferguson, Matt Gardner, Hannaneh Hajishirzi, Tushar Khot, Pradeep Dasigi,

TORQUE: A Reading Comprehension Dataset of Temporal Ordering Questions

Qiang Ning, Hao Wu, Rujun Han, Nanyun Peng, Matt Gardner, Dan Roth,

Inquisitive Question Generation for High Level Text Comprehension

Wei-Jen Ko, Te-yuan Chen, Yiyan Huang, Greg Durrett, Junyi Jessy Li,