Inquisitive Question Generation for High Level Text Comprehension

Wei-Jen Ko, Te-yuan Chen, Yiyan Huang, Greg Durrett, Junyi Jessy Li

Language Generation Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Inquisitive probing questions come naturally to humans in a variety of settings, but is a challenging task for automatic systems. One natural type of question to ask tries to fill a gap in knowledge during text comprehension, like reading a news article: we might ask about background information, deeper reasons behind things occurring, or more. Despite recent progress with data-driven approaches, generating such questions is beyond the range of models trained on existing datasets. We introduce INQUISITIVE, a dataset of ~19K questions that are elicited while a person is reading through a document. Compared to existing datasets, INQUISITIVE questions target more towards high-level (semantic and discourse) comprehension of text. We show that readers engage in a series of pragmatic strategies to seek information. Finally, we evaluate question generation models based on GPT-2 and show that our model is able to generate reasonable questions although the task is challenging, and highlight the importance of context to generate INQUISITIVE questions.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

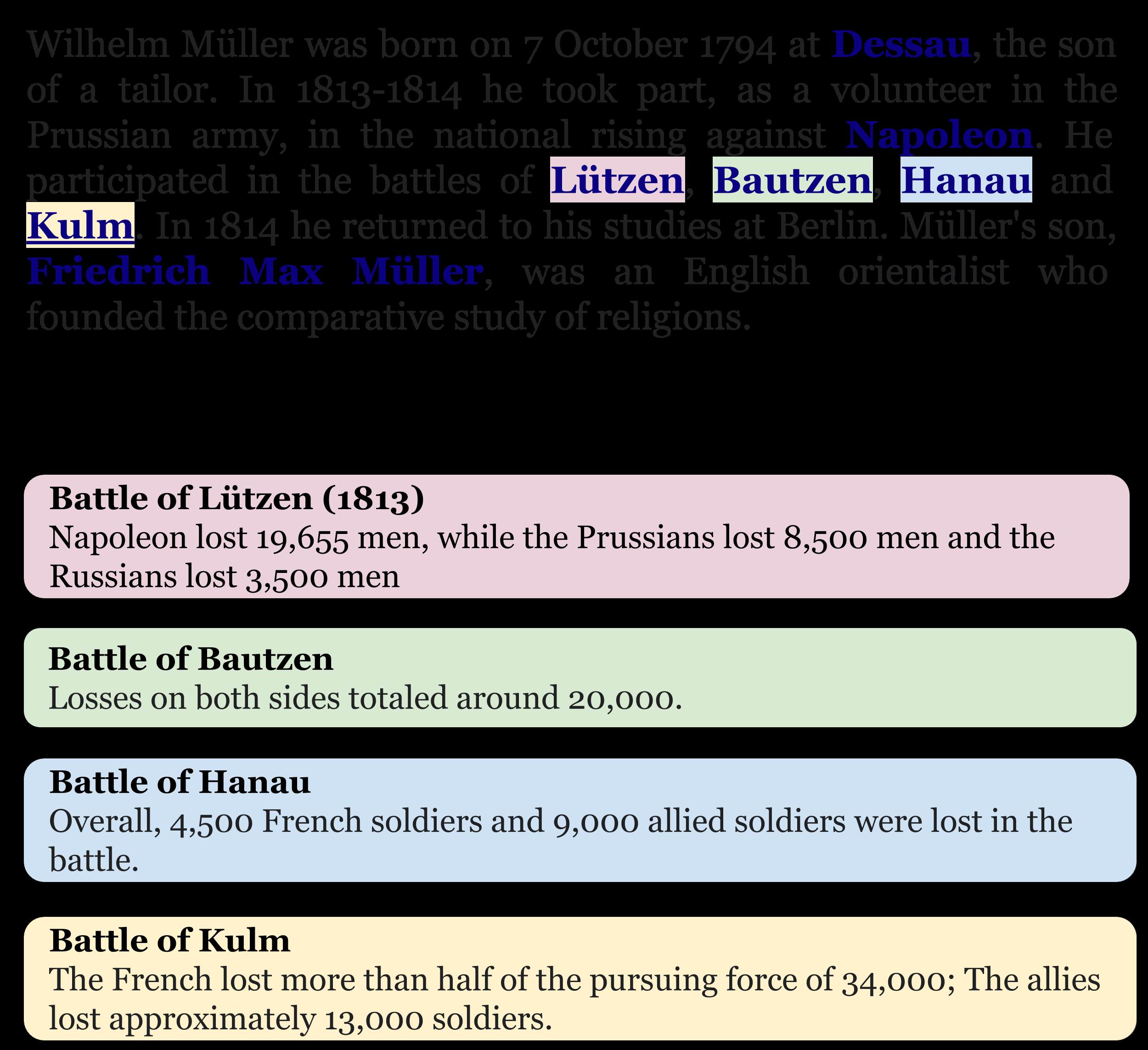

IIRC: A Dataset of Incomplete Information Reading Comprehension Questions

James Ferguson, Matt Gardner, Hannaneh Hajishirzi, Tushar Khot, Pradeep Dasigi,

PathQG: Neural Question Generation from Facts

Siyuan Wang, Zhongyu Wei, Zhihao Fan, Zengfeng Huang, Weijian Sun, Qi Zhang, Xuanjing Huang,

Unsupervised Commonsense Question Answering with Self-Talk

Vered Shwartz, Peter West, Ronan Le Bras, Chandra Bhagavatula, Yejin Choi,

Context-Aware Answer Extraction in Question Answering

Yeon Seonwoo, Ji-Hoon Kim, Jung-Woo Ha, Alice Oh,