Entity Linking in 100 Languages

Jan A. Botha, Zifei Shan, Daniel Gillick

Information Extraction Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

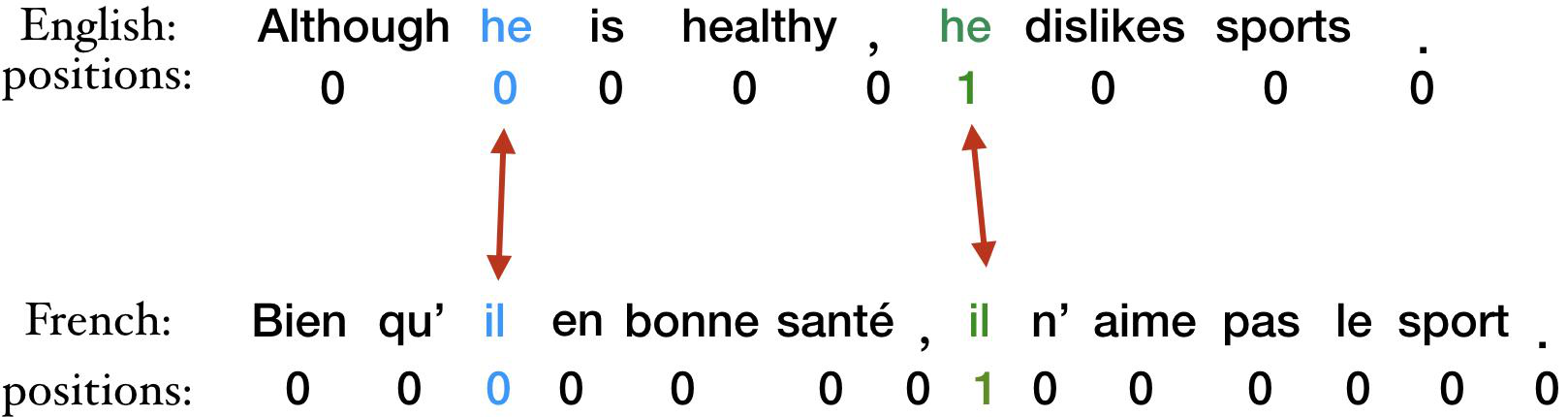

We propose a new formulation for multilingual entity linking, where language-specific mentions resolve to a language-agnostic Knowledge Base. We train a dual encoder in this new setting, building on prior work with improved feature representation, negative mining, and an auxiliary entity-pairing task, to obtain a single entity retrieval model that covers 100+ languages and 20~million entities. The model outperforms state-of-the-art results from a far more limited cross-lingual linking task. Rare entities and low-resource languages pose challenges at this large-scale, so we advocate for an increased focus on zero- and few-shot evaluation. To this end, we provide Mewsli-9, a large new multilingual dataset matched to our setting, and show how frequency-based analysis provided key insights for our model and training enhancements.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

LAReQA: Language-Agnostic Answer Retrieval from a Multilingual Pool

Uma Roy, Noah Constant, Rami Al-Rfou, Aditya Barua, Aaron Phillips, Yinfei Yang,

Scalable Zero-shot Entity Linking with Dense Entity Retrieval

Ledell Wu, Fabio Petroni, Martin Josifoski, Sebastian Riedel, Luke Zettlemoyer,

MAD-X: An Adapter-Based Framework for Multi-Task Cross-Lingual Transfer

Jonas Pfeiffer, Ivan Vulić, Iryna Gurevych, Sebastian Ruder,