LAReQA: Language-Agnostic Answer Retrieval from a Multilingual Pool

Uma Roy, Noah Constant, Rami Al-Rfou, Aditya Barua, Aaron Phillips, Yinfei Yang

Machine Translation and Multilinguality Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

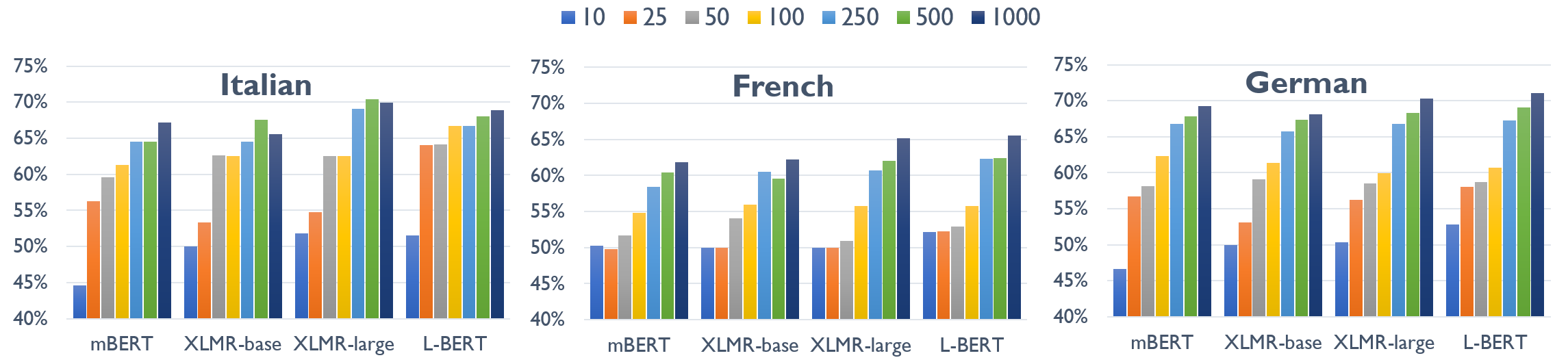

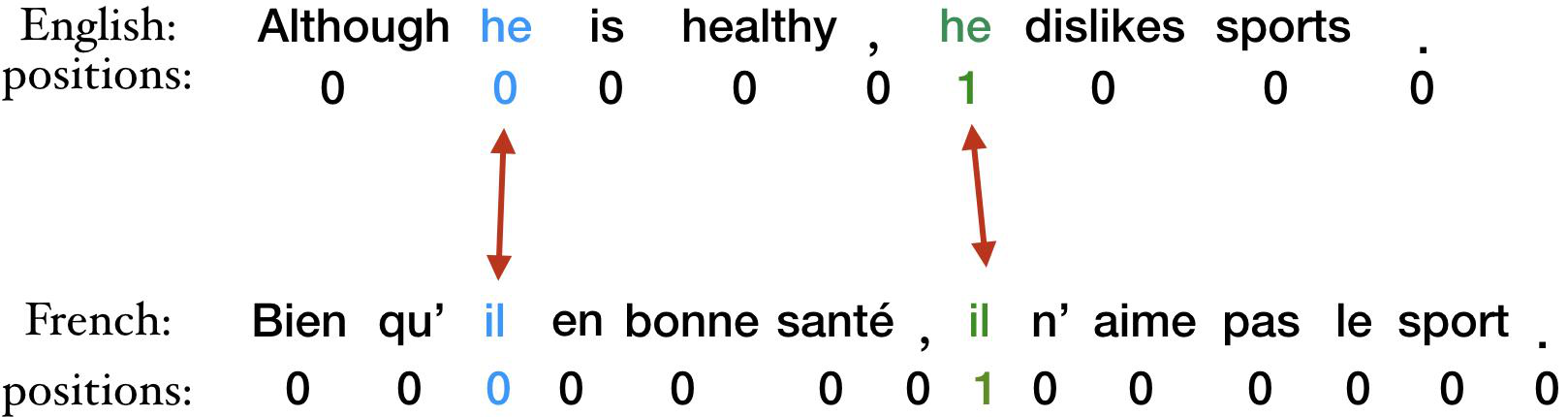

We present LAReQA, a challenging new benchmark for language-agnostic answer retrieval from a multilingual candidate pool. Unlike previous cross-lingual tasks, LAReQA tests for ``strong'' cross-lingual alignment, requiring semantically related \textit{cross}-language pairs to be closer in representation space than unrelated \textit{same}-language pairs. This level of alignment is important for the practical task of cross-lingual information retrieval. Building on multilingual BERT (mBERT), we study different strategies for achieving strong alignment. We find that augmenting training data via machine translation is effective, and improves significantly over using mBERT out-of-the-box. Interestingly, model performance on zero-shot variants of our task that only target ``weak" alignment is not predictive of performance on LAReQA\@. This finding underscores our claim that language-agnostic retrieval is a substantively new kind of cross-lingual evaluation, and suggests that measuring both weak and strong alignment will be important for improving cross-lingual systems going forward. We release our dataset and evaluation code at \url{https://github.com/google-research-datasets/lareqa}.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

MAD-X: An Adapter-Based Framework for Multi-Task Cross-Lingual Transfer

Jonas Pfeiffer, Ivan Vulić, Iryna Gurevych, Sebastian Ruder,

XL-WiC: A Multilingual Benchmark for Evaluating Semantic Contextualization

Alessandro Raganato, Tommaso Pasini, Jose Camacho-Collados, Mohammad Taher Pilehvar,