Table Fact Verification with Structure-Aware Transformer

Hongzhi Zhang, Yingyao Wang, Sirui Wang, Xuezhi Cao, Fuzheng Zhang, Zhongyuan Wang

Semantics: Sentence-level Semantics, Textual Inference and Other areas Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

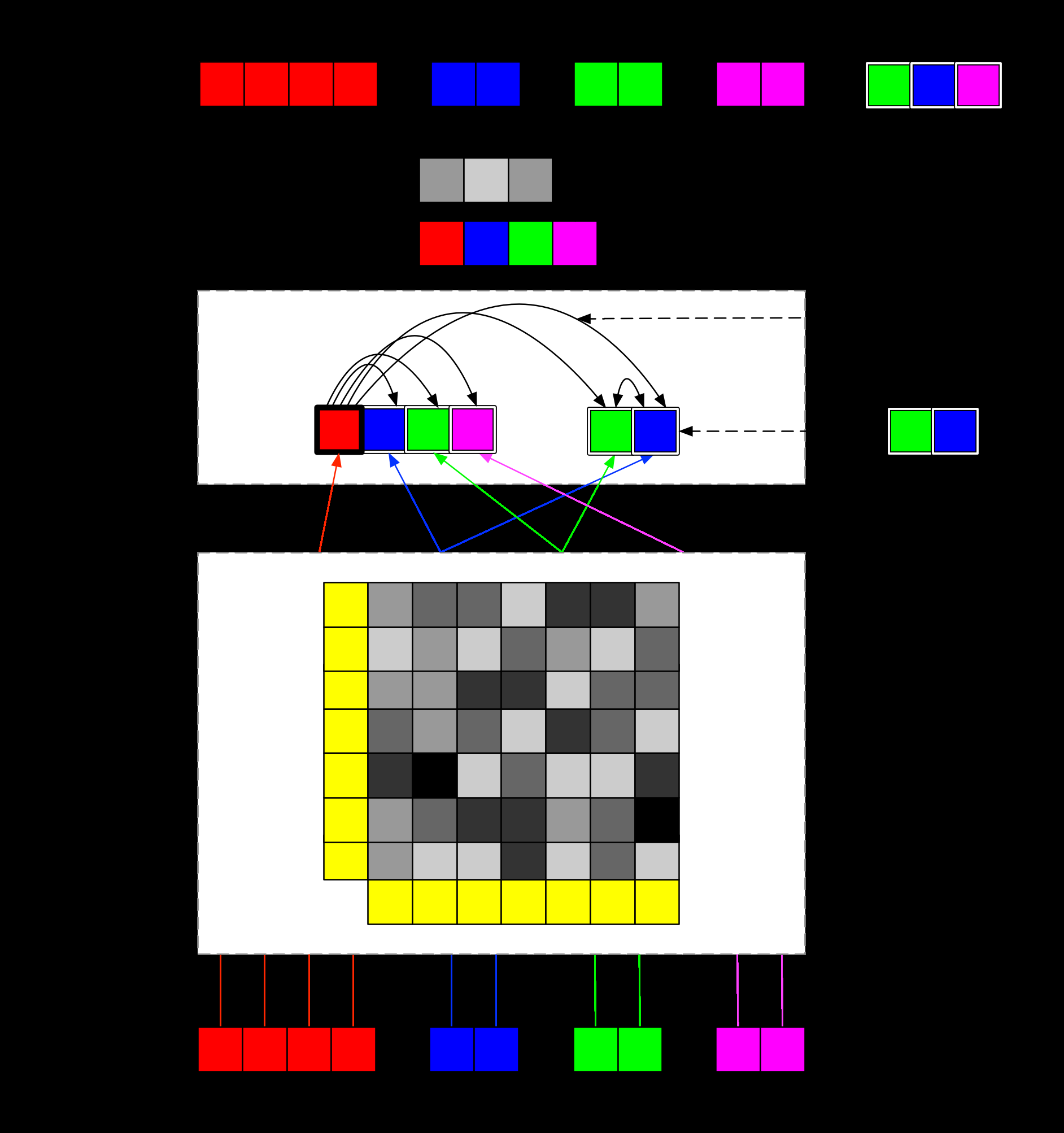

Verifying fact on semi-structured evidence like tables requires the ability to encode structural information and perform symbolic reasoning. Pre-trained language models trained on natural language could not be directly applied to encode tables, because simply linearizing tables into sequences will lose the cell alignment information. To better utilize pre-trained transformers for table representation, we propose a Structure-Aware Transformer (SAT), which injects the table structural information into the mask of the self-attention layer. A method to combine symbolic and linguistic reasoning is also explored for this task. Our method outperforms baseline with 4.93% on TabFact, a large scale table verification dataset.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

ETC: Encoding Long and Structured Inputs in Transformers

Joshua Ainslie, Santiago Ontanon, Chris Alberti, Vaclav Cvicek, Zachary Fisher, Philip Pham, Anirudh Ravula, Sumit Sanghai, Qifan Wang, Li Yang,

Retrofitting Structure-aware Transformer Language Model for End Tasks

Hao Fei, Yafeng Ren, Donghong Ji,

Stepwise Extractive Summarization and Planning with Structured Transformers

Shashi Narayan, Joshua Maynez, Jakub Adamek, Daniele Pighin, Blaz Bratanic, Ryan McDonald,

Multi-Step Inference for Reasoning Over Paragraphs

Jiangming Liu, Matt Gardner, Shay B. Cohen, Mirella Lapata,