Multi-Step Inference for Reasoning Over Paragraphs

Jiangming Liu, Matt Gardner, Shay B. Cohen, Mirella Lapata

Question Answering Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Complex reasoning over text requires understanding and chaining together free-form predicates and logical connectives. Prior work has largely tried to do this either symbolically or with black-box transformers. We present a middle ground between these two extremes: a compositional model reminiscent of neural module networks that can perform chained logical reasoning. This model first finds relevant sentences in the context and then chains them together using neural modules. Our model gives significant performance improvements (up to 29% relative error reduction when combined with a reranker) on ROPES, a recently-introduced complex reasoning dataset.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

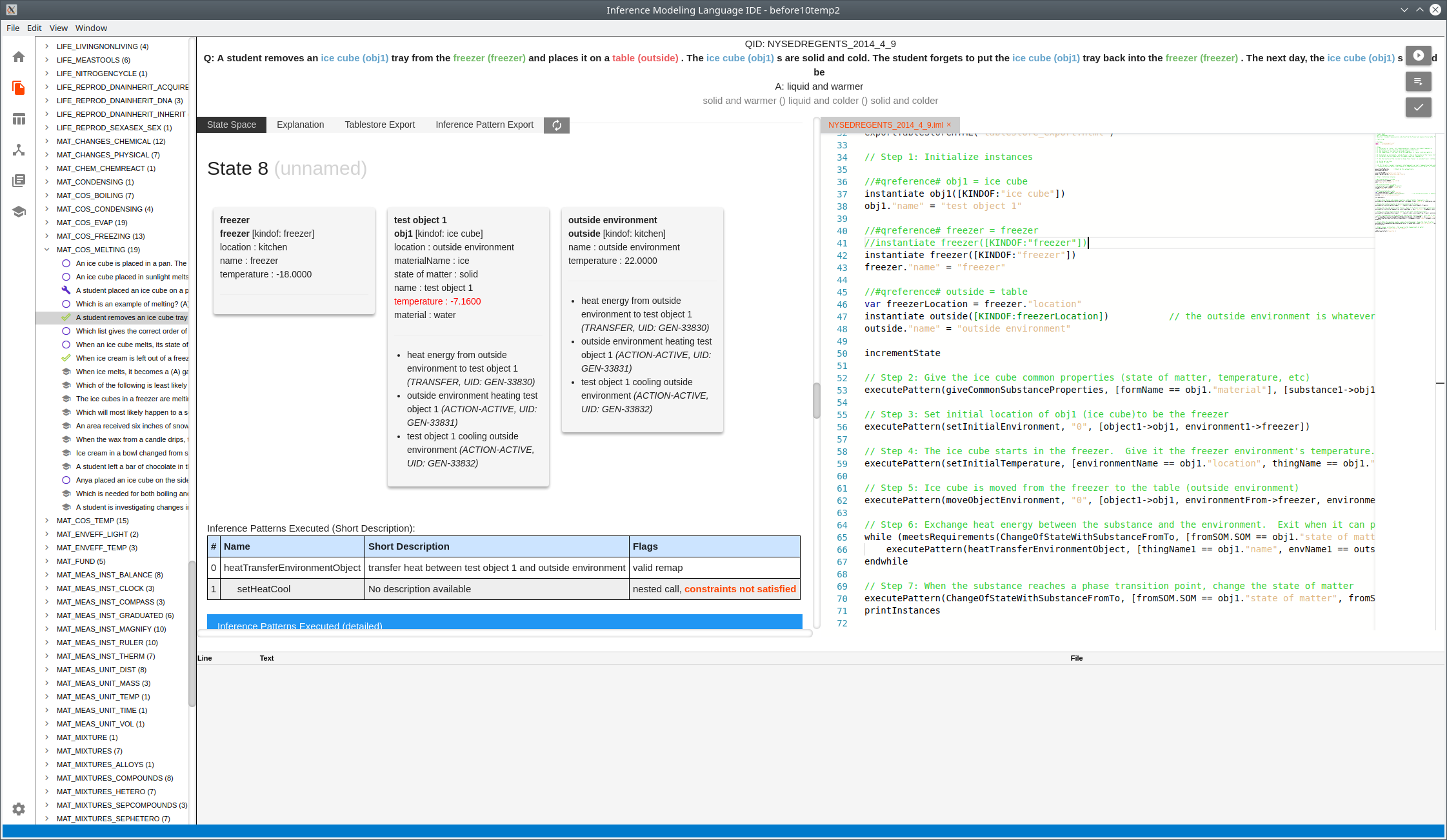

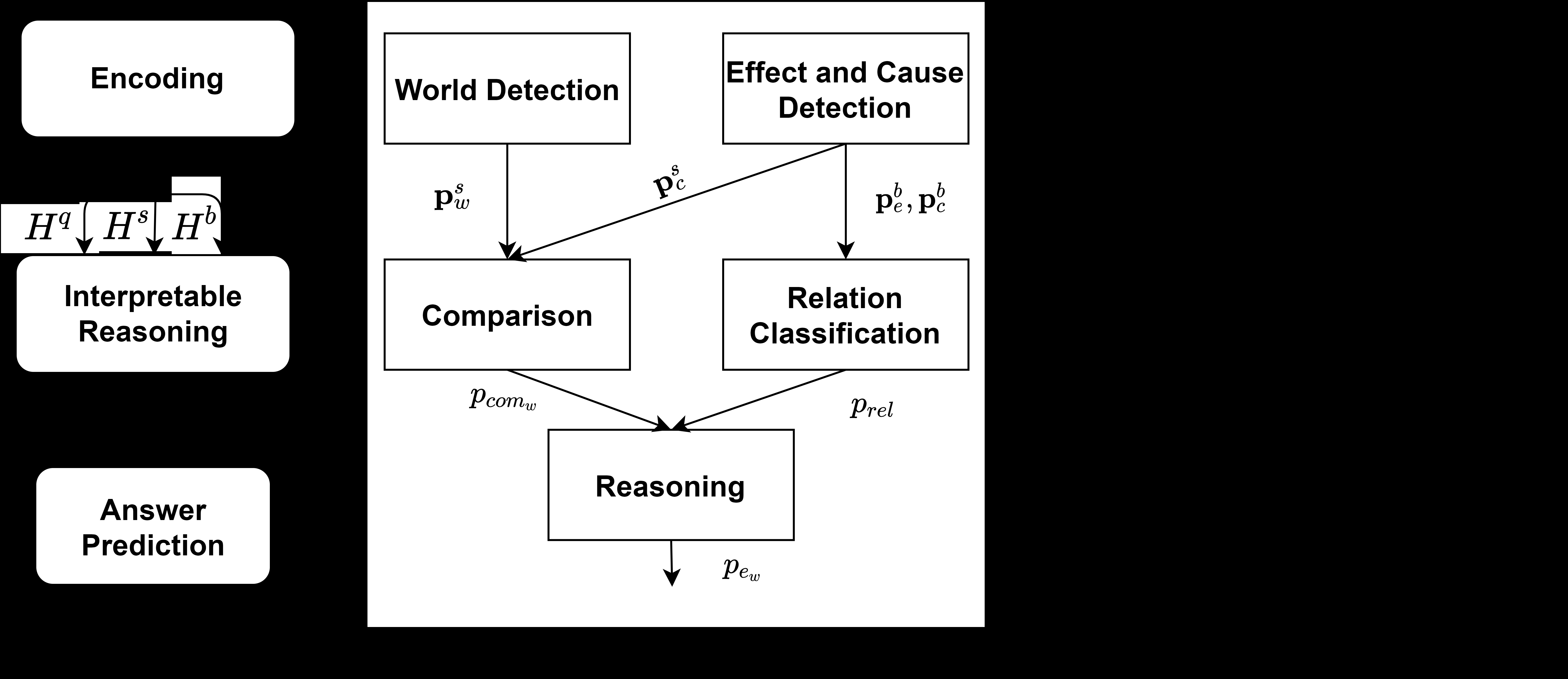

Towards Interpretable Reasoning over Paragraph Effects in Situation

Mucheng Ren, Xiubo Geng, Tao Qin, Heyan Huang, Daxin Jiang,

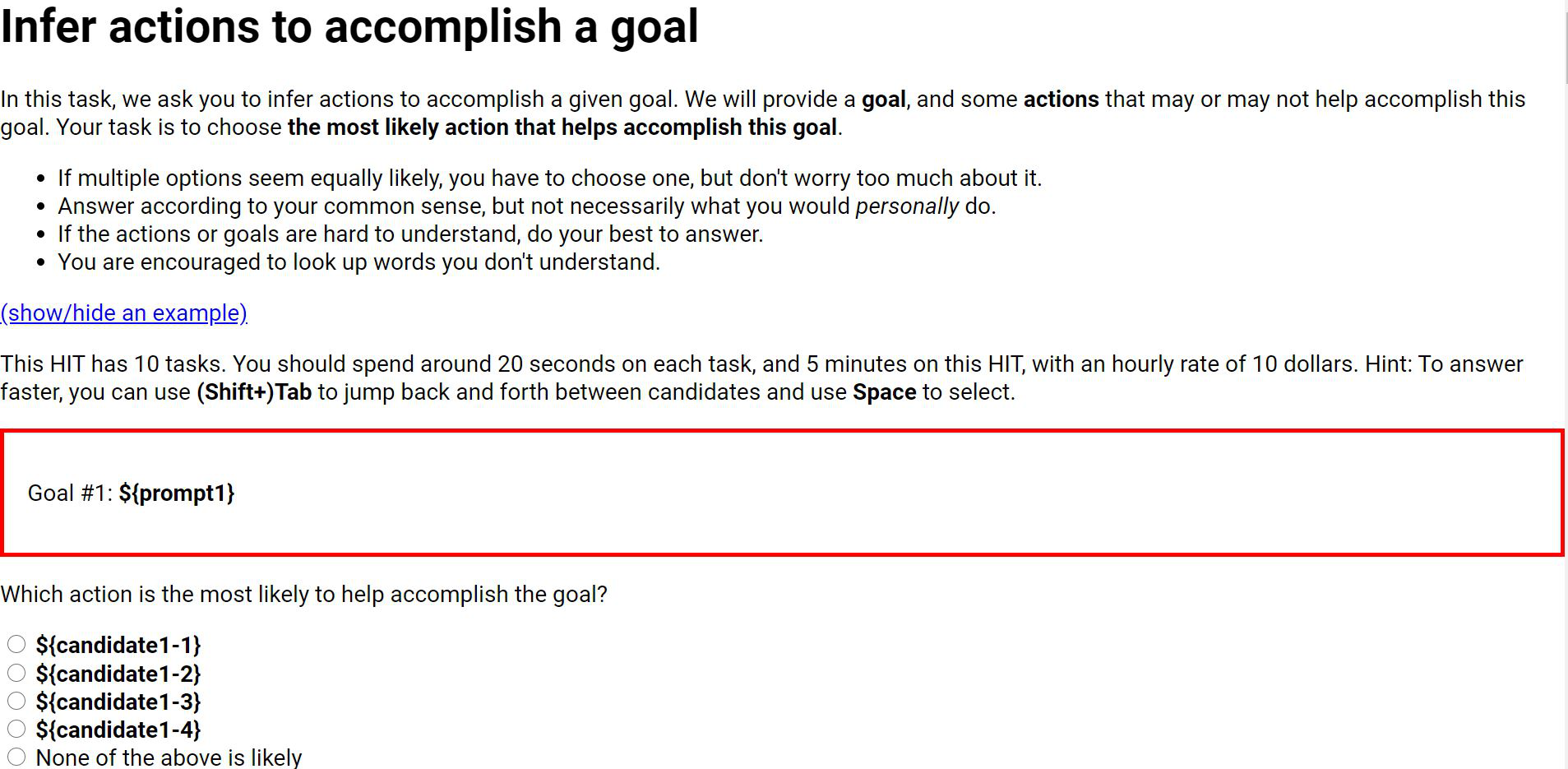

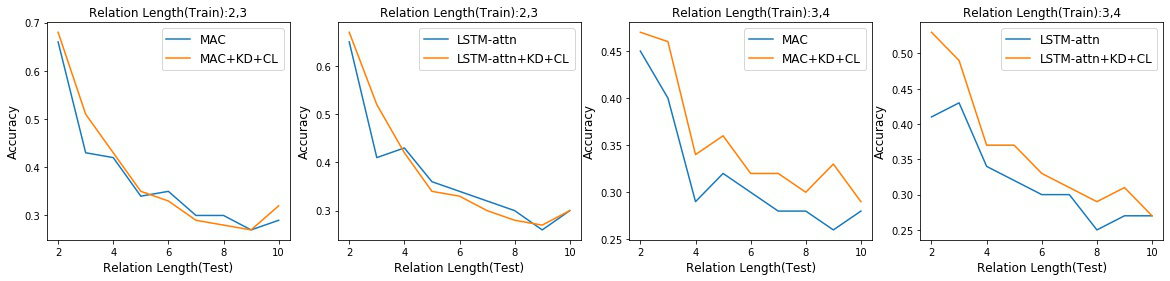

Distilling Structured Knowledge for Text-Based Relational Reasoning

Jin Dong, Marc-Antoine Rondeau, William L. Hamilton,

Reasoning about Goals, Steps, and Temporal Ordering with WikiHow

Li Zhang, Qing Lyu, Chris Callison-Burch,