Knowledge-Grounded Dialogue Generation with Pre-trained Language Models

Xueliang Zhao, Wei Wu, Can Xu, Chongyang Tao, Dongyan Zhao, Rui Yan

Dialog and Interactive Systems Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

We study knowledge-grounded dialogue generation with pre-trained language models. To leverage the redundant external knowledge under capacity constraint, we propose equipping response generation defined by a pre-trained language model with a knowledge selection module, and an unsupervised approach to jointly optimizing knowledge selection and response generation with unlabeled dialogues. Empirical results on two benchmarks indicate that our model can significantly outperform state-of-the-art methods in both automatic evaluation and human judgment.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Semantic Role Labeling Guided Multi-turn Dialogue ReWriter

Kun Xu, Haochen Tan, Linfeng Song, Han Wu, Haisong Zhang, Linqi Song, Dong Yu,

MinTL: Minimalist Transfer Learning for Task-Oriented Dialogue Systems

Zhaojiang Lin, Andrea Madotto, Genta Indra Winata, Pascale Fung,

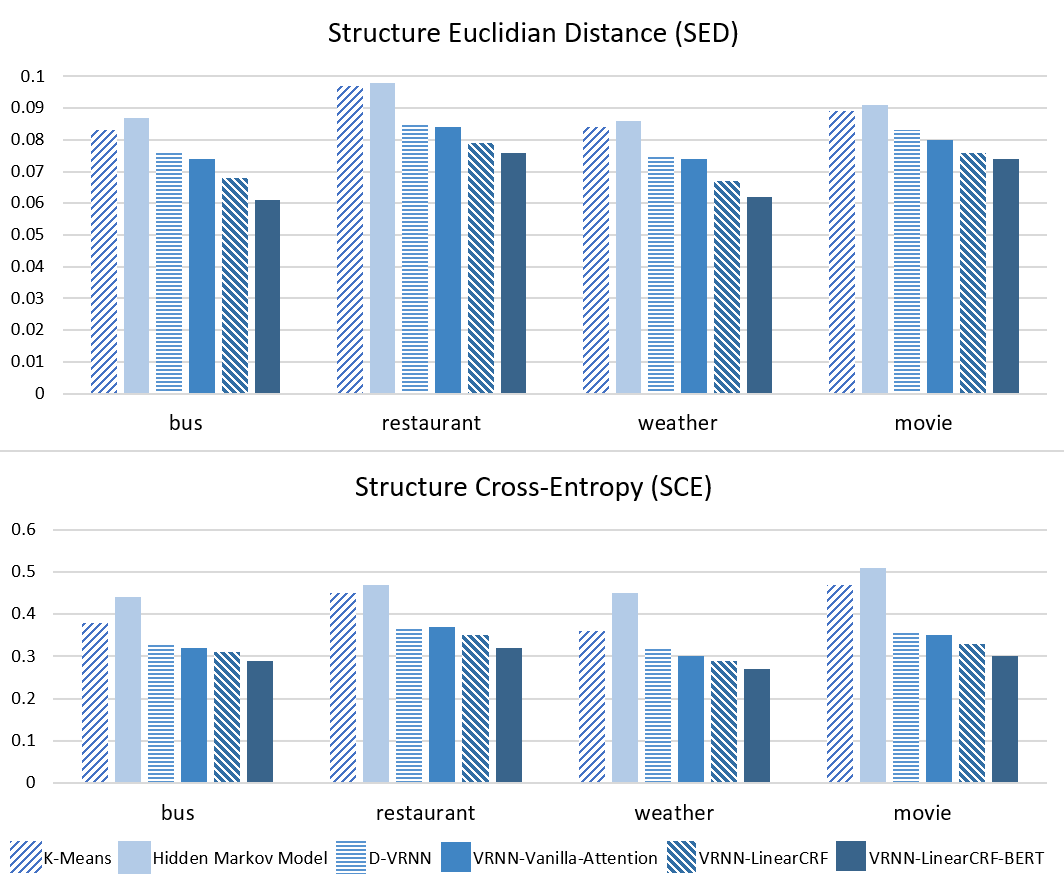

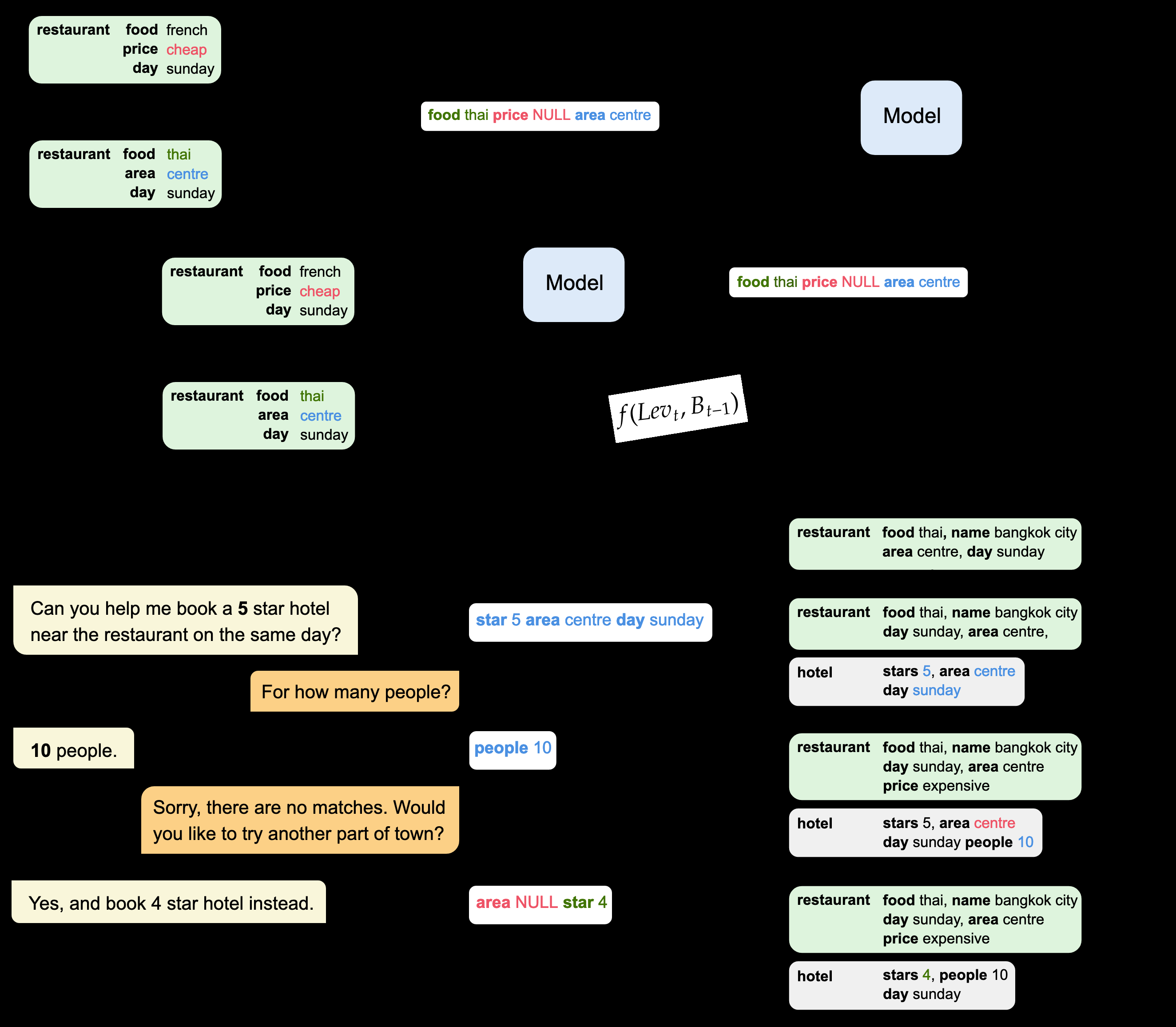

Structured Attention for Unsupervised Dialogue Structure Induction

Liang Qiu, Yizhou Zhao, Weiyan Shi, Yuan Liang, Feng Shi, Tao Yuan, Zhou Yu, Song-Chun Zhu,