Probing Task-Oriented Dialogue Representation from Language Models

Chien-Sheng Wu, Caiming Xiong

Dialog and Interactive Systems Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

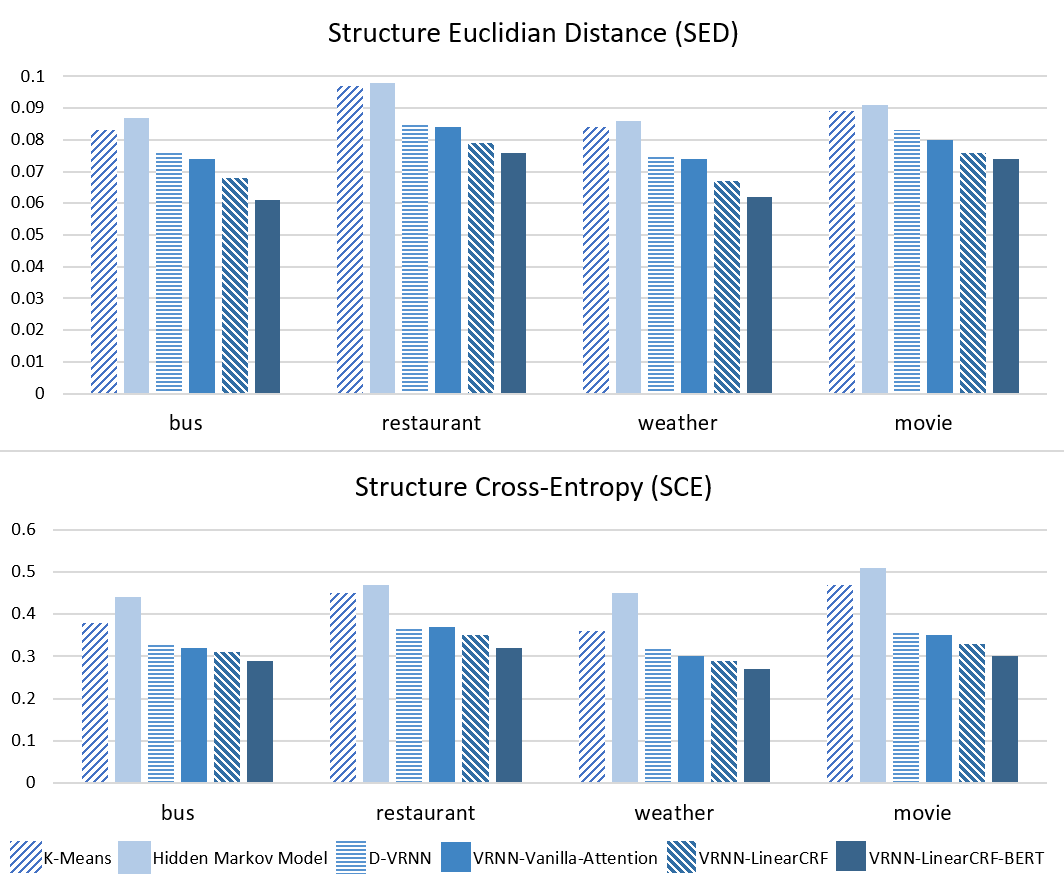

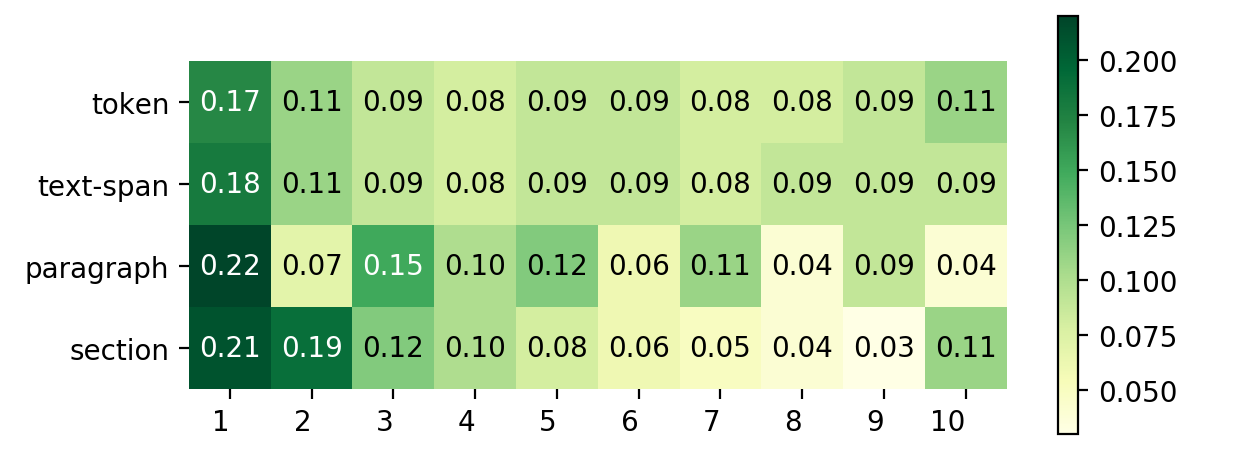

This paper investigates pre-trained language models to find out which model intrinsically carries the most informative representation for task-oriented dialogue tasks. We approach the problem from two aspects: supervised classifier probe and unsupervised mutual information probe. We fine-tune a feed-forward layer as the classifier probe on top of a fixed pre-trained language model with annotated labels in a supervised way. Meanwhile, we propose an unsupervised mutual information probe to evaluate the mutual dependence between a real clustering and a representation clustering. The goals of this empirical paper are to 1) investigate probing techniques, especially from the unsupervised mutual information aspect, 2) provide guidelines of pre-trained language model selection for the dialogue research community, 3) find insights of pre-training factors for dialogue application that may be the key to success.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Structured Attention for Unsupervised Dialogue Structure Induction

Liang Qiu, Yizhou Zhao, Weiyan Shi, Yuan Liang, Feng Shi, Tao Yuan, Zhou Yu, Song-Chun Zhu,

Multi-turn Response Selection using Dialogue Dependency Relations

Qi Jia, Yizhu Liu, Siyu Ren, Kenny Zhu, Haifeng Tang,

doc2dial: A Goal-Oriented Document-Grounded Dialogue Dataset

Song Feng, Hui Wan, Chulaka Gunasekara, Siva Patel, Sachindra Joshi, Luis Lastras,

Knowledge-Grounded Dialogue Generation with Pre-trained Language Models

Xueliang Zhao, Wei Wu, Can Xu, Chongyang Tao, Dongyan Zhao, Rui Yan,