PERL: Pivot-based Domain Adaptation for Pre-trained Deep Contextualized Embedding Models

Roi Reichart, Eyal Ben David, Carmel Rabinovitz

Machine Learning for NLP Tacl Paper

You can open the pre-recorded video in a separate window.

Abstract:

Pivot-based neural representation models have led to significant progress in domain adaptation for NLP. However, previous works that follow this approach utilize only labeled data from the source domain and unlabeled data from the source and target domains, but neglect to incorporate massive unlabeled corpora that are not necessarily drawn from these domains. To alleviate this, we propose PERL: A representation learning model that extends contextualized word embedding models such as BERT (Devlin et al., 2019) with pivot-based fine-tuning. PERL outperforms strong baselines across 22 sentiment classification domain adaptation setups, improves in-domain model performance, yields effective reduced-size models and increases model stability.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

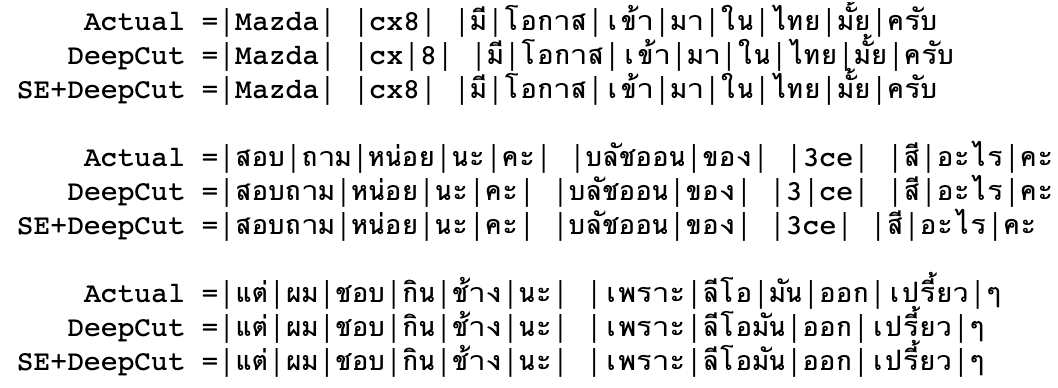

Domain Adaptation of Thai Word Segmentation Models using Stacked Ensemble

Peerat Limkonchotiwat, Wannaphong Phatthiyaphaibun, Raheem Sarwar, Ekapol Chuangsuwanich, Sarana Nutanong,

VCDM: Leveraging Variational Bi-encoding and Deep Contextualized Word Representations for Improved Definition Modeling

Machel Reid, Edison Marrese-Taylor, Yutaka Matsuo,

Improving Low Compute Language Modeling with In-Domain Embedding Initialisation

Charles Welch, Rada Mihalcea, Jonathan K. Kummerfeld,