Pre-training for Abstractive Document Summarization by Reinstating Source Text

Yanyan Zou, Xingxing Zhang, Wei Lu, Furu Wei, Ming Zhou

Summarization Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

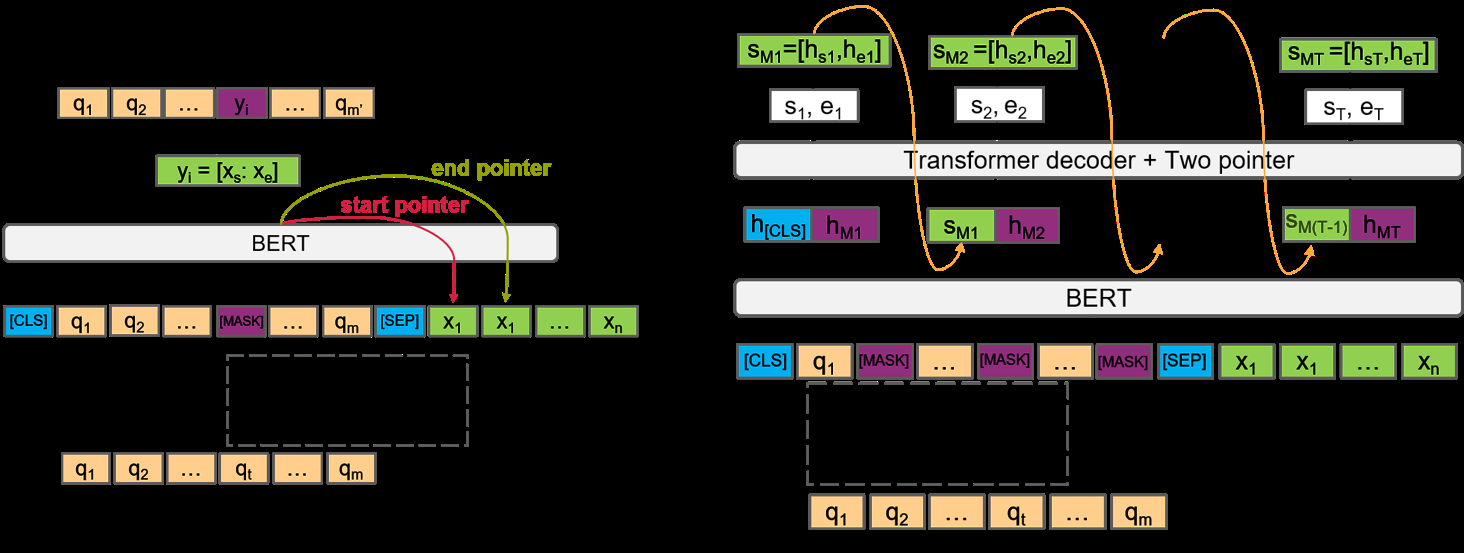

Abstractive document summarization is usually modeled as a sequence-to-sequence (SEQ2SEQ) learning problem. Unfortunately, training large SEQ2SEQ based summarization models on limited supervised summarization data is challenging. This paper presents three sequence-to-sequence pre-training (in shorthand, STEP) objectives which allow us to pre-train a SEQ2SEQ based abstractive summarization model on unlabeled text. The main idea is that, given an input text artificially constructed from a document, a model is pre-trained to reinstate the original document. These objectives include sentence reordering, next sentence generation and masked document generation, which have close relations with the abstractive document summarization task. Experiments on two benchmark summarization datasets (i.e., CNN/DailyMail and New York Times) show that all three objectives can improve performance upon baselines. Compared to models pre-trained on large-scale data (larger than 160GB), our method, with only 19GB text for pre-training, achieves comparable results, which demonstrates its effectiveness.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Multi-Fact Correction in Abstractive Text Summarization

Yue Dong, Shuohang Wang, Zhe Gan, Yu Cheng, Jackie Chi Kit Cheung, Jingjing Liu,

On Extractive and Abstractive Neural Document Summarization with Transformer Language Models

Jonathan Pilault, Raymond Li, Sandeep Subramanian, Chris Pal,

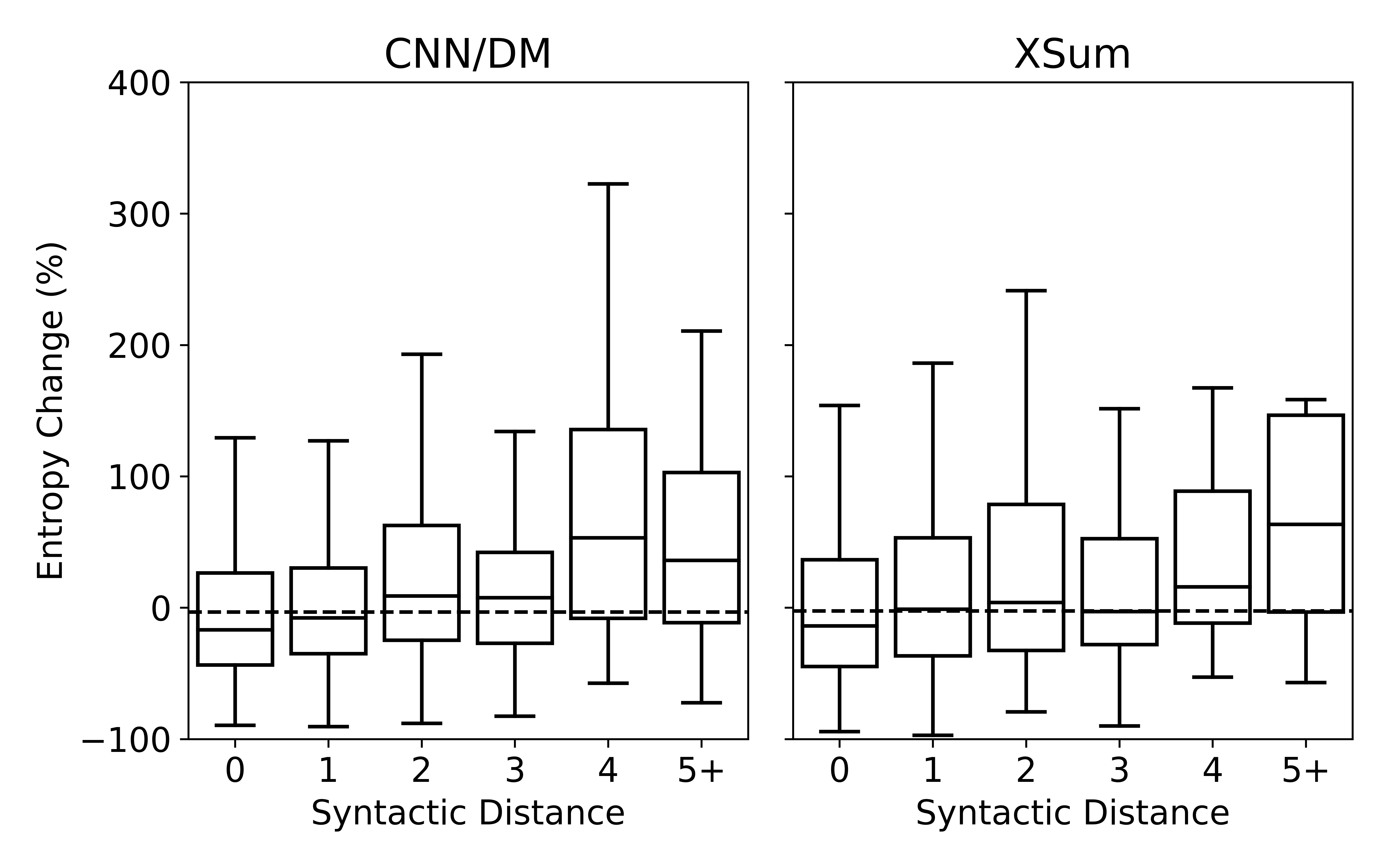

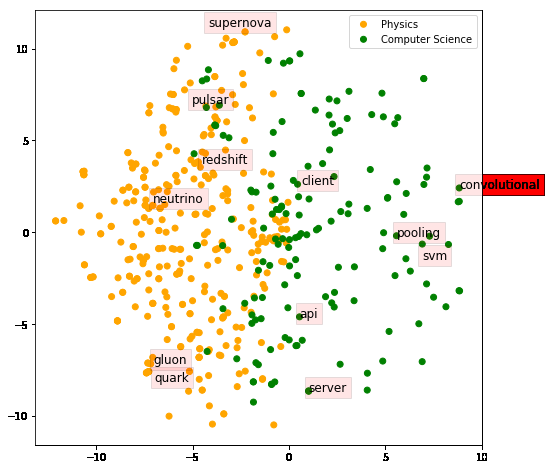

Understanding Neural Abstractive Summarization Models via Uncertainty

Jiacheng Xu, Shrey Desai, Greg Durrett,