Multi-Fact Correction in Abstractive Text Summarization

Yue Dong, Shuohang Wang, Zhe Gan, Yu Cheng, Jackie Chi Kit Cheung, Jingjing Liu

Summarization Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Pre-trained neural abstractive summarization systems have dominated extractive strategies on news summarization performance, at least in terms of ROUGE. However, system-generated abstractive summaries often face the pitfall of factual inconsistency: generating incorrect facts with respect to the source text. To address this challenge, we propose Span-Fact, a suite of two factual correction models that leverages knowledge learned from question answering models to make corrections in system-generated summaries via span selection. Our models employ single or multi-masking strategies to either iteratively or auto-regressively replace entities in order to ensure semantic consistency w.r.t. the source text, while retaining the syntactic structure of summaries generated by abstractive summarization models. Experiments show that our models significantly boost the factual consistency of system-generated summaries without sacrificing summary quality in terms of both automatic metrics and human evaluation.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Evaluating the Factual Consistency of Abstractive Text Summarization

Wojciech Kryscinski, Bryan McCann, Caiming Xiong, Richard Socher,

On Extractive and Abstractive Neural Document Summarization with Transformer Language Models

Jonathan Pilault, Raymond Li, Sandeep Subramanian, Chris Pal,

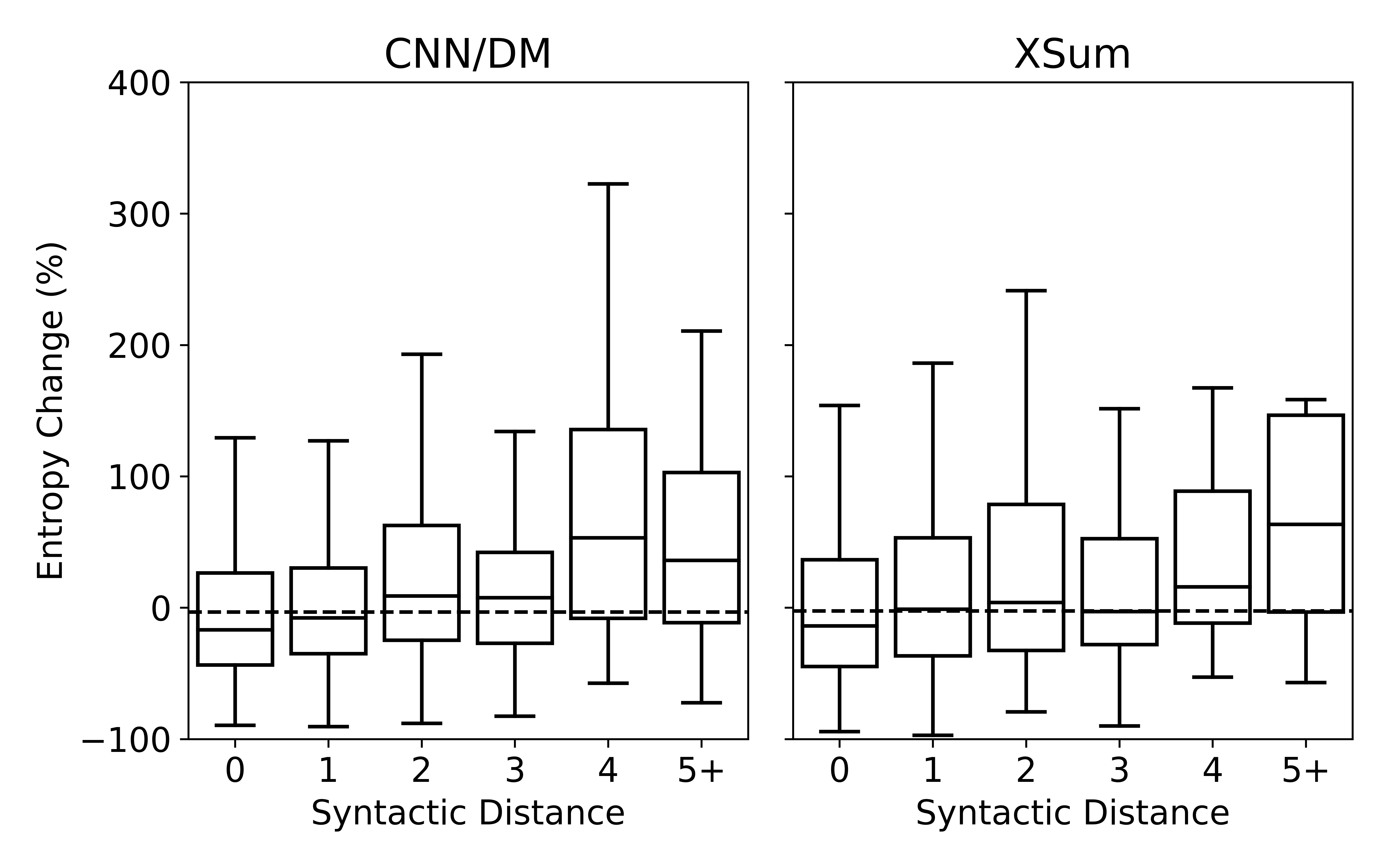

Understanding Neural Abstractive Summarization Models via Uncertainty

Jiacheng Xu, Shrey Desai, Greg Durrett,

What Have We Achieved on Text Summarization?

Dandan Huang, Leyang Cui, Sen Yang, Guangsheng Bao, Kun Wang, Jun Xie, Yue Zhang,