Understanding Neural Abstractive Summarization Models via Uncertainty

Jiacheng Xu, Shrey Desai, Greg Durrett

Summarization Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

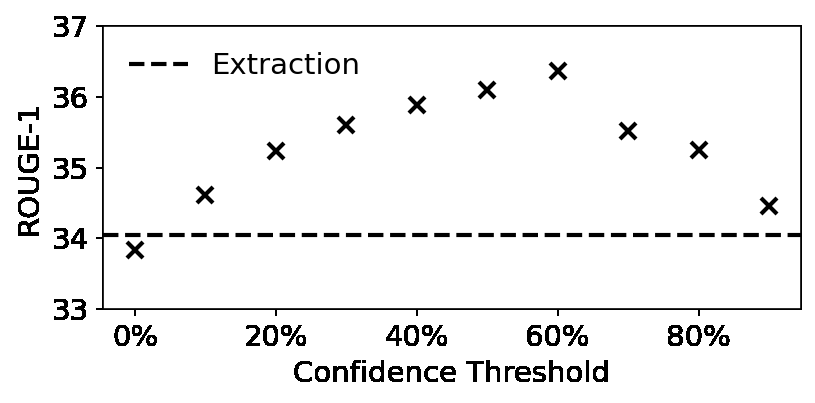

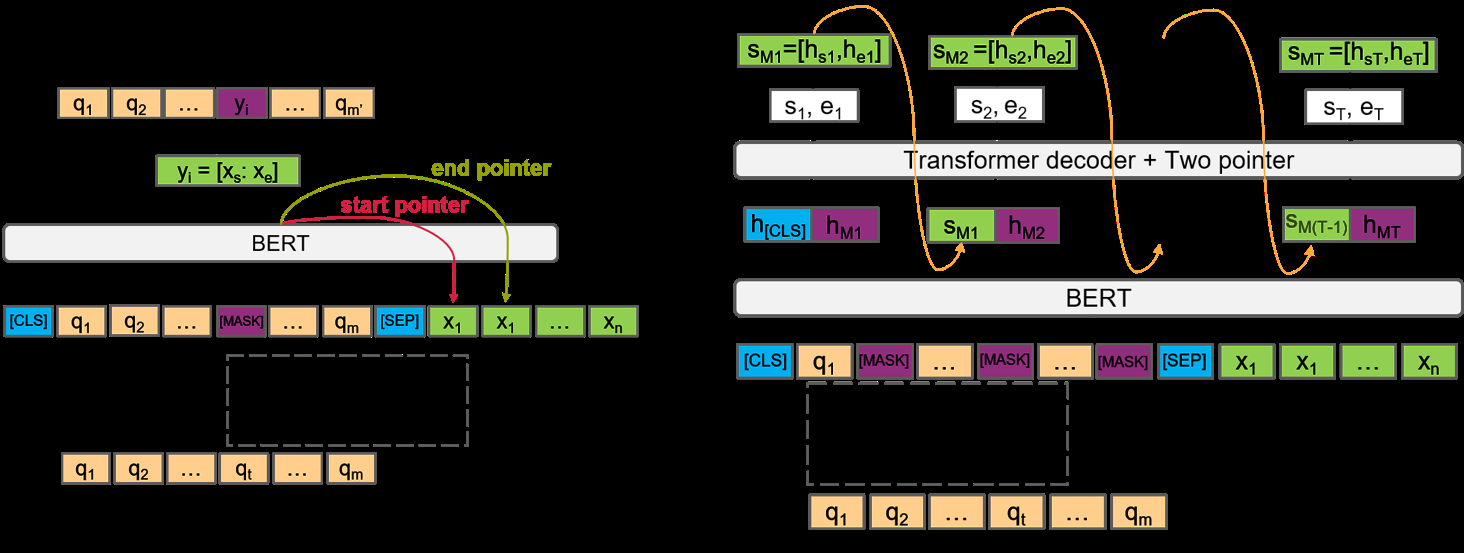

An advantage of seq2seq abstractive summarization models is that they generate text in a free-form manner, but this flexibility makes it difficult to interpret model behavior. In this work, we analyze summarization decoders in both blackbox and whitebox ways by studying on the entropy, or uncertainty, of the model's token-level predictions. For two strong pre-trained models, PEGASUS and BART on two summarization datasets, we find a strong correlation between low prediction entropy and where the model copies tokens rather than generating novel text. The decoder's uncertainty also connects to factors like sentence position and syntactic distance between adjacent pairs of tokens, giving a sense of what factors make a context particularly selective for the model's next output token. Finally, we study the relationship of decoder uncertainty and attention behavior to understand how attention gives rise to these observed effects in the model. We show that uncertainty is a useful perspective for analyzing summarization and text generation models more broadly.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Compressive Summarization with Plausibility and Salience Modeling

Shrey Desai, Jiacheng Xu, Greg Durrett,

Multi-Fact Correction in Abstractive Text Summarization

Yue Dong, Shuohang Wang, Zhe Gan, Yu Cheng, Jackie Chi Kit Cheung, Jingjing Liu,

Neural Extractive Summarization with Hierarchical Attentive Heterogeneous Graph Network

Ruipeng Jia, Yanan Cao, Hengzhu Tang, Fang Fang, Cong Cao, Shi Wang,

Q-learning with Language Model for Edit-based Unsupervised Summarization

Ryosuke Kohita, Akifumi Wachi, Yang Zhao, Ryuki Tachibana,