Exploring Semantic Capacity of Terms

Jie Huang, Zilong Wang, Kevin Chang, Wen-mei Hwu, JinJun Xiong

Semantics: Lexical Semantics Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

We introduce and study semantic capacity of terms. For example, the semantic capacity of artificial intelligence is higher than that of linear regression since artificial intelligence possesses a broader meaning scope. Understanding semantic capacity of terms will help many downstream tasks in natural language processing. For this purpose, we propose a two-step model to investigate semantic capacity of terms, which takes a large text corpus as input and can evaluate semantic capacity of terms if the text corpus can provide enough co-occurrence information of terms. Extensive experiments in three fields demonstrate the effectiveness and rationality of our model compared with well-designed baselines and human-level evaluations.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Coreferential Reasoning Learning for Language Representation

Deming Ye, Yankai Lin, Jiaju Du, Zhenghao Liu, Peng Li, Maosong Sun, Zhiyuan Liu,

Character-level Representations Improve DRS-based Semantic Parsing Even in the Age of BERT

Rik van Noord, Antonio Toral, Johan Bos,

Compositional and Lexical Semantics in RoBERTa, BERT and DistilBERT: A Case Study on CoQA

Ieva Staliūnaitė, Ignacio Iacobacci,

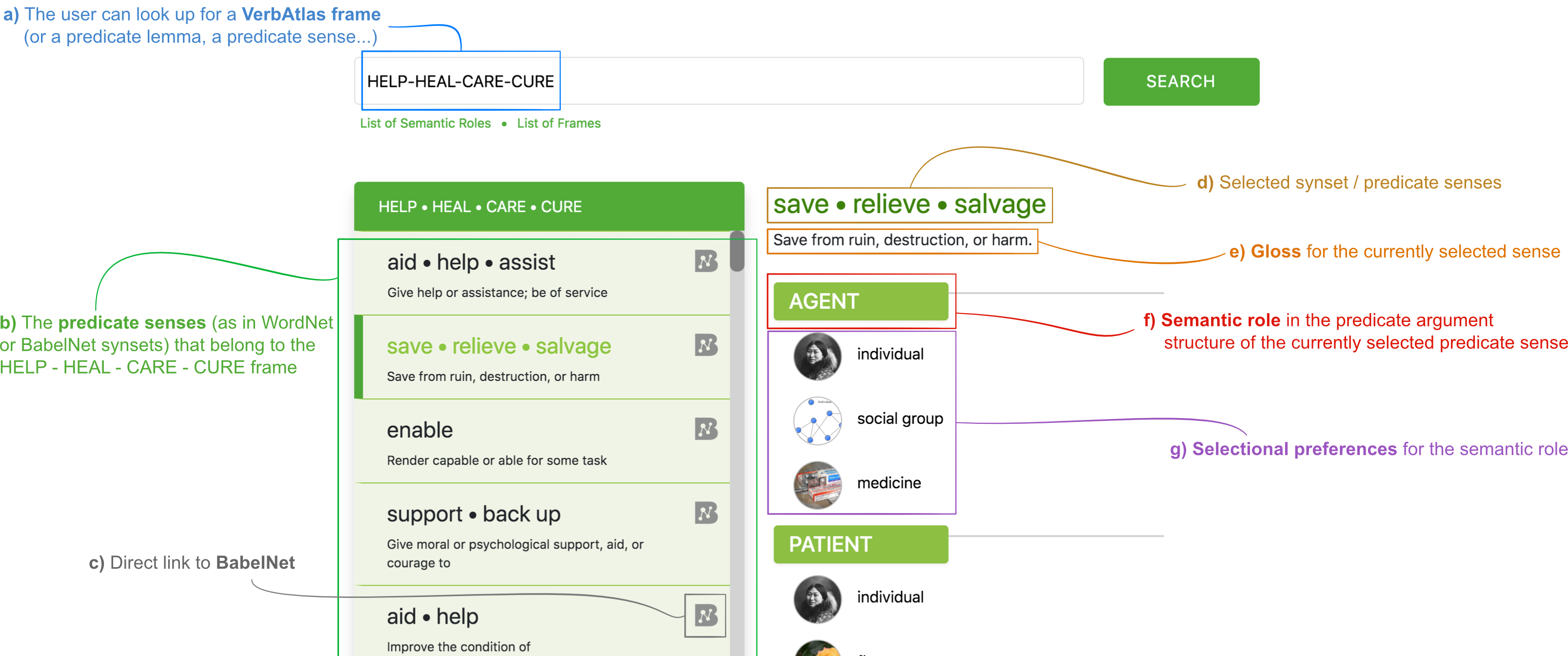

InVeRo: Making Semantic Role Labeling Accessible with Intelligible Verbs and Roles

Simone Conia, Fabrizio Brignone, Davide Zanfardino, Roberto Navigli,