Coreferential Reasoning Learning for Language Representation

Deming Ye, Yankai Lin, Jiaju Du, Zhenghao Liu, Peng Li, Maosong Sun, Zhiyuan Liu

Question Answering Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

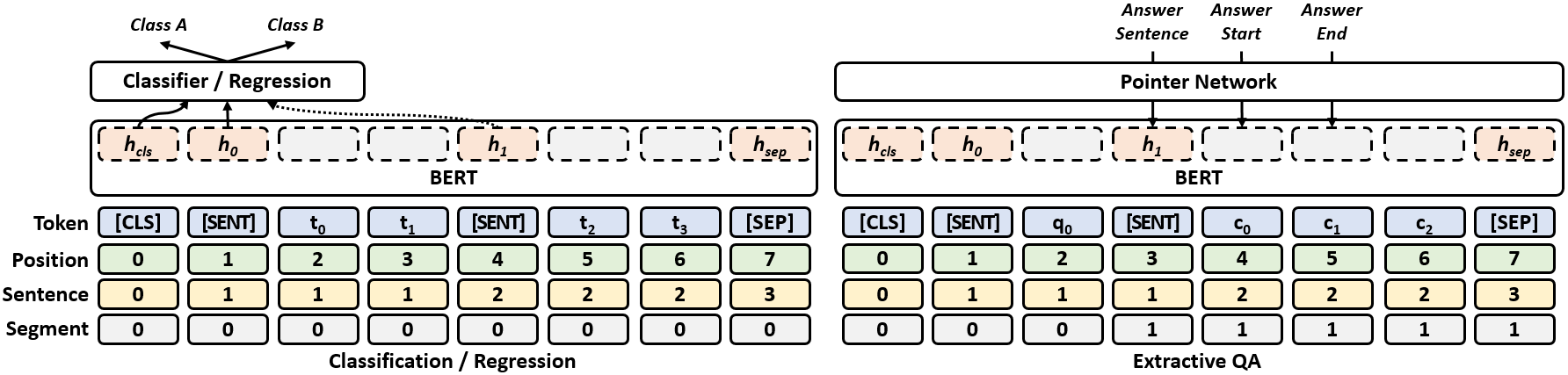

Language representation models such as BERT could effectively capture contextual semantic information from plain text, and have been proved to achieve promising results in lots of downstream NLP tasks with appropriate fine-tuning. However, most existing language representation models cannot explicitly handle coreference, which is essential to the coherent understanding of the whole discourse. To address this issue, we present CorefBERT, a novel language representation model that can capture the coreferential relations in context. The experimental results show that, compared with existing baseline models, CorefBERT can achieve significant improvements consistently on various downstream NLP tasks that require coreferential reasoning, while maintaining comparable performance to previous models on other common NLP tasks. The source code and experiment details of this paper can be obtained from https://github.com/thunlp/CorefBERT.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

DualTKB: A Dual Learning Bridge between Text and Knowledge Base

Pierre Dognin, Igor Melnyk, Inkit Padhi, Cicero Nogueira dos Santos, Payel Das,

SLM: Learning a Discourse Language Representation with Sentence Unshuffling

Haejun Lee, Drew A. Hudson, Kangwook Lee, Christopher D. Manning,

Grounded Compositional Outputs for Adaptive Language Modeling

Nikolaos Pappas, Phoebe Mulcaire, Noah A. Smith,