Q-learning with Language Model for Edit-based Unsupervised Summarization

Ryosuke Kohita, Akifumi Wachi, Yang Zhao, Ryuki Tachibana

Summarization Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Unsupervised methods are promising for abstractive textsummarization in that the parallel corpora is not required. However, their performance is still far from being satisfied, therefore research on promising solutions is on-going. In this paper, we propose a new approach based on Q-learning with an edit-based summarization. The method combines two key modules to form an Editorial Agent and Language Model converter (EALM). The agent predicts edit actions (e.t., delete, keep, and replace), and then the LM converter deterministically generates a summary on the basis of the action signals. Q-learning is leveraged to train the agent to produce proper edit actions. Experimental results show that EALM delivered competitive performance compared with the previous encoder-decoder-based methods, even with truly zero paired data (i.e., no validation set). Defining the task as Q-learning enables us not only to develop a competitive method but also to make the latest techniques in reinforcement learning available for unsupervised summarization. We also conduct qualitative analysis, providing insights into future study on unsupervised summarizers.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

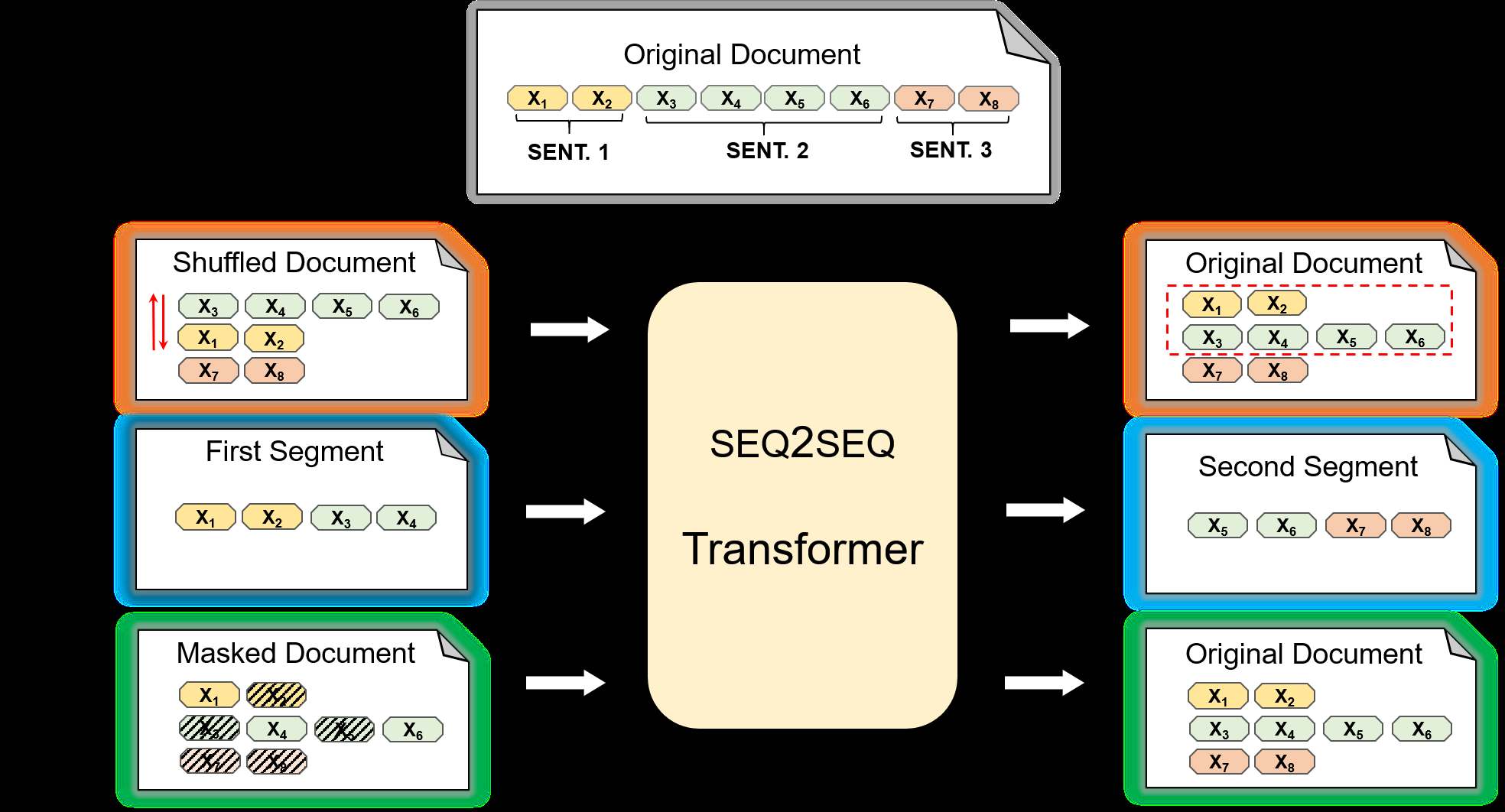

Pre-training for Abstractive Document Summarization by Reinstating Source Text

Yanyan Zou, Xingxing Zhang, Wei Lu, Furu Wei, Ming Zhou,

On Extractive and Abstractive Neural Document Summarization with Transformer Language Models

Jonathan Pilault, Raymond Li, Sandeep Subramanian, Chris Pal,

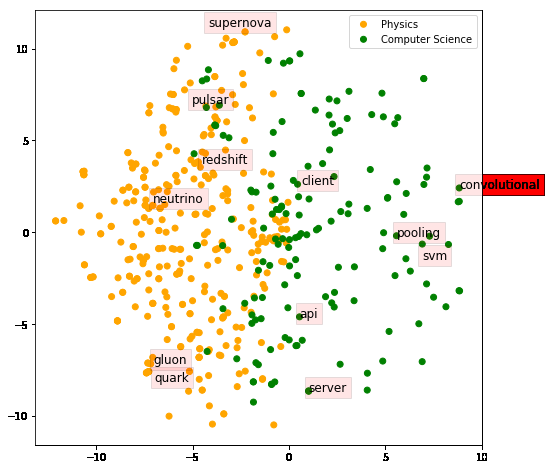

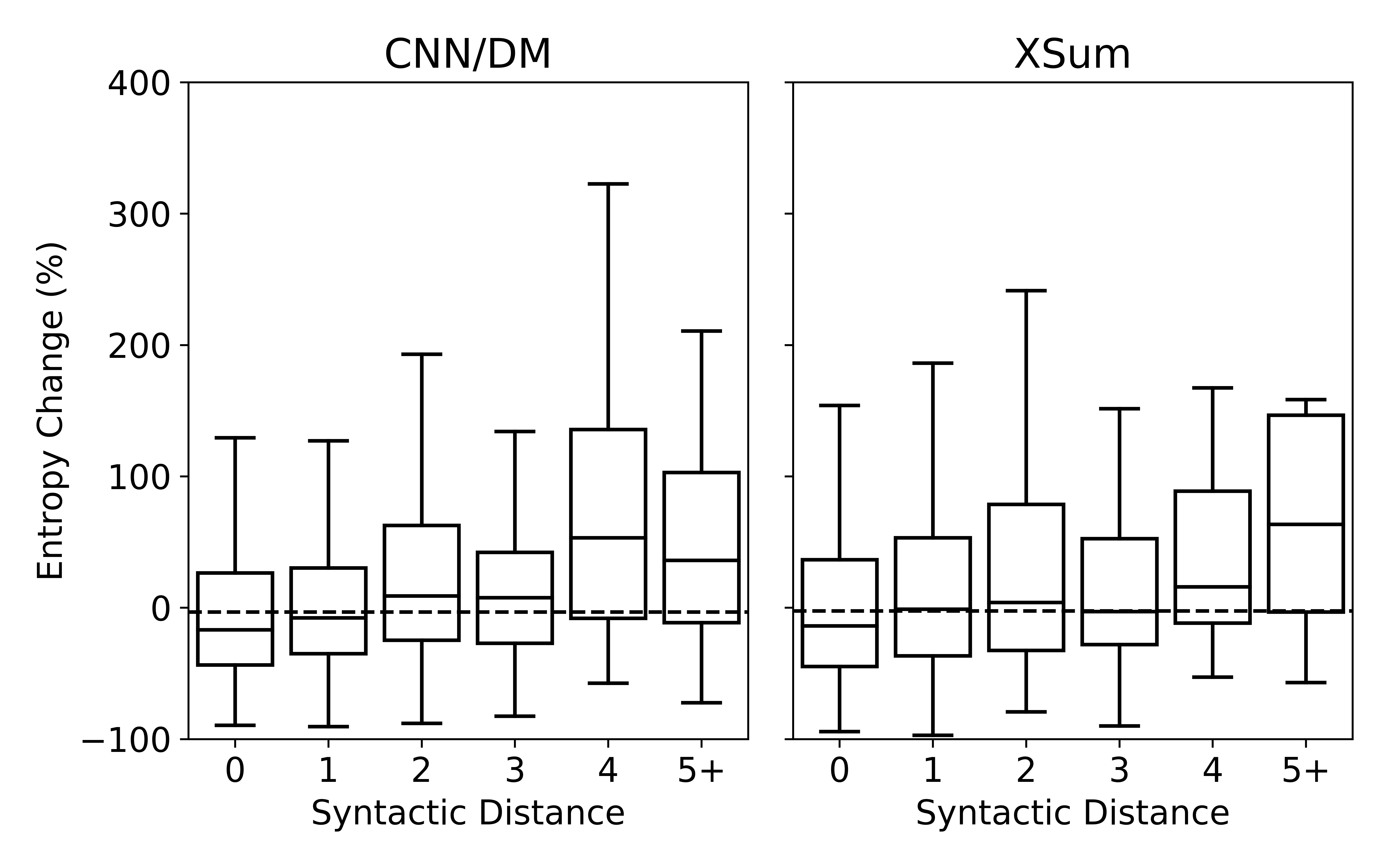

Understanding Neural Abstractive Summarization Models via Uncertainty

Jiacheng Xu, Shrey Desai, Greg Durrett,

What Have We Achieved on Text Summarization?

Dandan Huang, Leyang Cui, Sen Yang, Guangsheng Bao, Kun Wang, Jun Xie, Yue Zhang,