Interpretable Multi-dataset Evaluation for Named Entity Recognition

Jinlan Fu, Pengfei Liu, Graham Neubig

Syntax: Tagging, Chunking, and Parsing Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

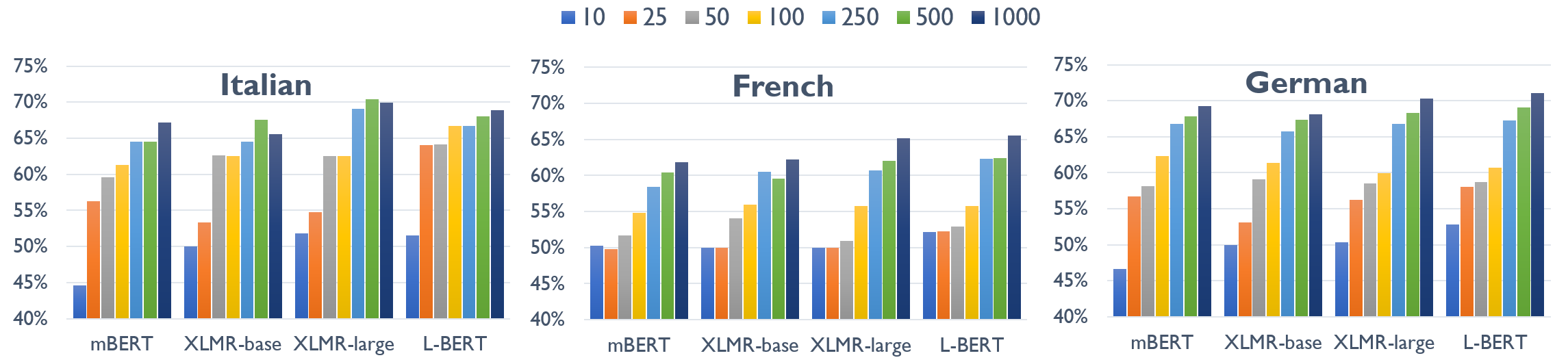

With the proliferation of models for natural language processing tasks, it is even harder to understand the differences between models and their relative merits. Simply looking at differences between holistic metrics such as accuracy, BLEU, or F1 does not tell us why or how particular methods perform differently and how diverse datasets influence the model design choices. In this paper, we present a general methodology for interpretable evaluation for the named entity recognition (NER) task. The proposed evaluation method enables us to interpret the differences in models and datasets, as well as the interplay between them, identifying the strengths and weaknesses of current systems. By making our analysis tool available, we make it easy for future researchers to run similar analyses and drive progress in this area: https://github.com/neulab/InterpretEval

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Systematic Comparison of Neural Architectures and Training Approaches for Open Information Extraction

Patrick Hohenecker, Frank Mtumbuka, Vid Kocijan, Thomas Lukasiewicz,

Counterfactual Generator: A Weakly-Supervised Method for Named Entity Recognition

Xiangji Zeng, Yunliang Li, Yuchen Zhai, Yin Zhang,

XL-WiC: A Multilingual Benchmark for Evaluating Semantic Contextualization

Alessandro Raganato, Tommaso Pasini, Jose Camacho-Collados, Mohammad Taher Pilehvar,