Systematic Comparison of Neural Architectures and Training Approaches for Open Information Extraction

Patrick Hohenecker, Frank Mtumbuka, Vid Kocijan, Thomas Lukasiewicz

Information Extraction Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

The goal of open information extraction (OIE) is to extract facts from natural language text, and to represent them as structured triples of the form <subject,predicate, object>. For example, given the sentence "Beethoven composed the Ode to Joy.", we are expected to extract the triple <Beethoven, composed, Ode to Joy>. In this work, we systematically compare different neural network architectures and training approaches, and improve the performance of the currently best models on the OIE16 benchmark (Stanovsky and Dagan, 2016) by 0.421 F1 score and 0.420 AUC-PR, respectively, in our experiments (i.e., by more than 200% in both cases). Furthermore, we show that appropriate problem and loss formulations often affect the performance more than the network architecture.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

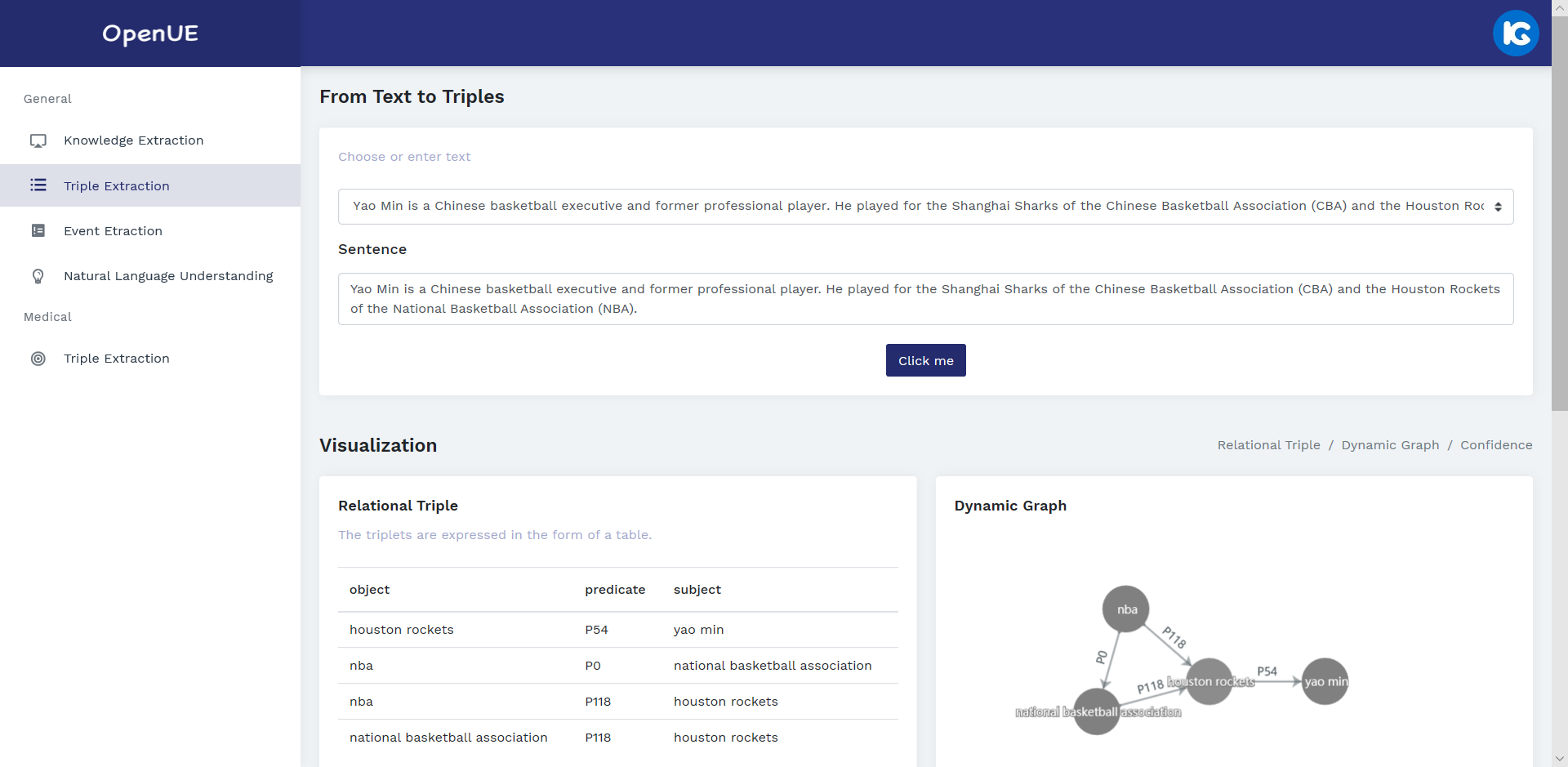

OpenUE: An Open Toolkit of Universal Extraction from Text

Ningyu Zhang, Shumin Deng, Zhen Bi, Haiyang Yu, Jiacheng Yang, Mosha Chen, Fei Huang, Wei Zhang, Huajun Chen,

Q-learning with Language Model for Edit-based Unsupervised Summarization

Ryosuke Kohita, Akifumi Wachi, Yang Zhao, Ryuki Tachibana,

DualTKB: A Dual Learning Bridge between Text and Knowledge Base

Pierre Dognin, Igor Melnyk, Inkit Padhi, Cicero Nogueira dos Santos, Payel Das,

Exposing Shallow Heuristics of Relation Extraction Models with Challenge Data

Shachar Rosenman, Alon Jacovi, Yoav Goldberg,