Multi-Stage Pre-training for Automated Chinese Essay Scoring

Wei Song, Kai Zhang, Ruiji Fu, Lizhen Liu, Ting Liu, Miaomiao Cheng

NLP Applications Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

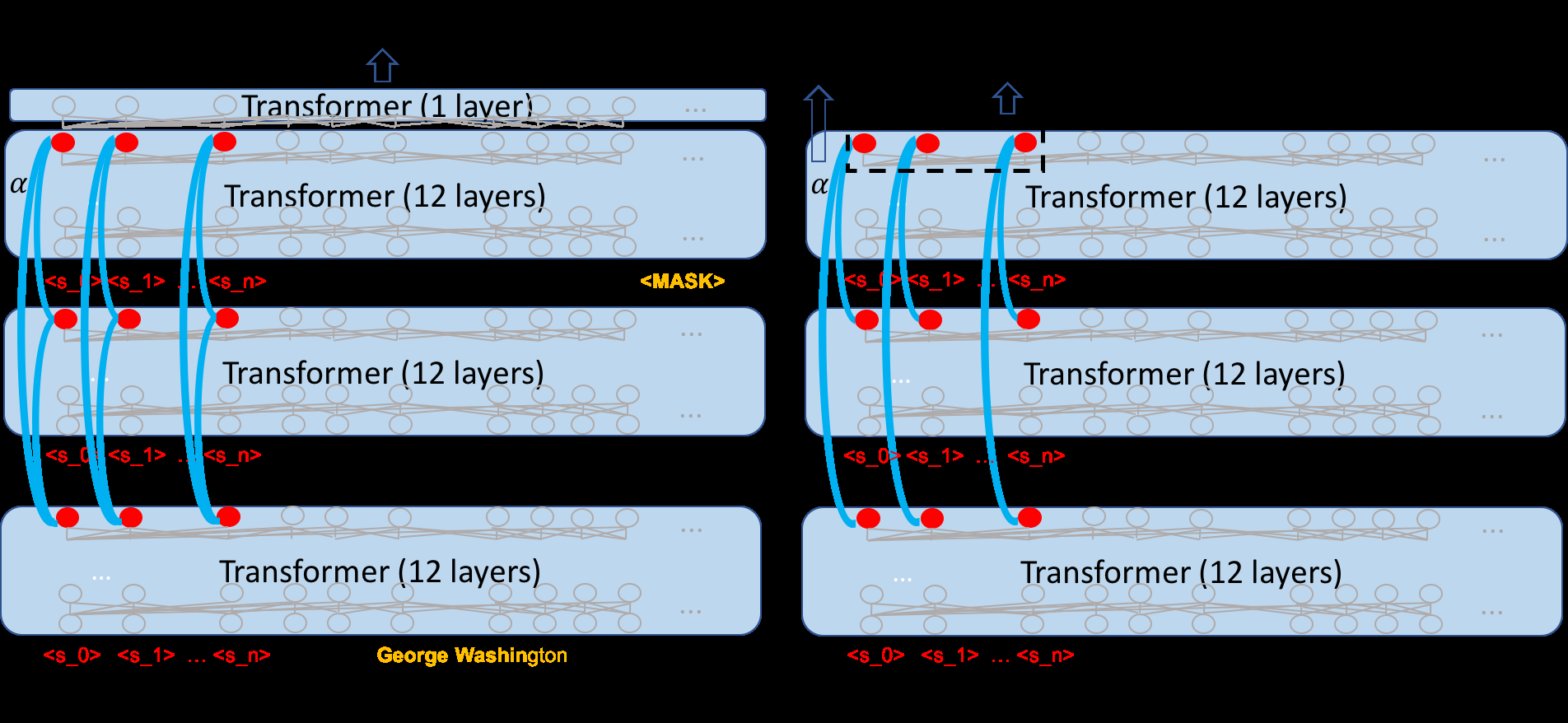

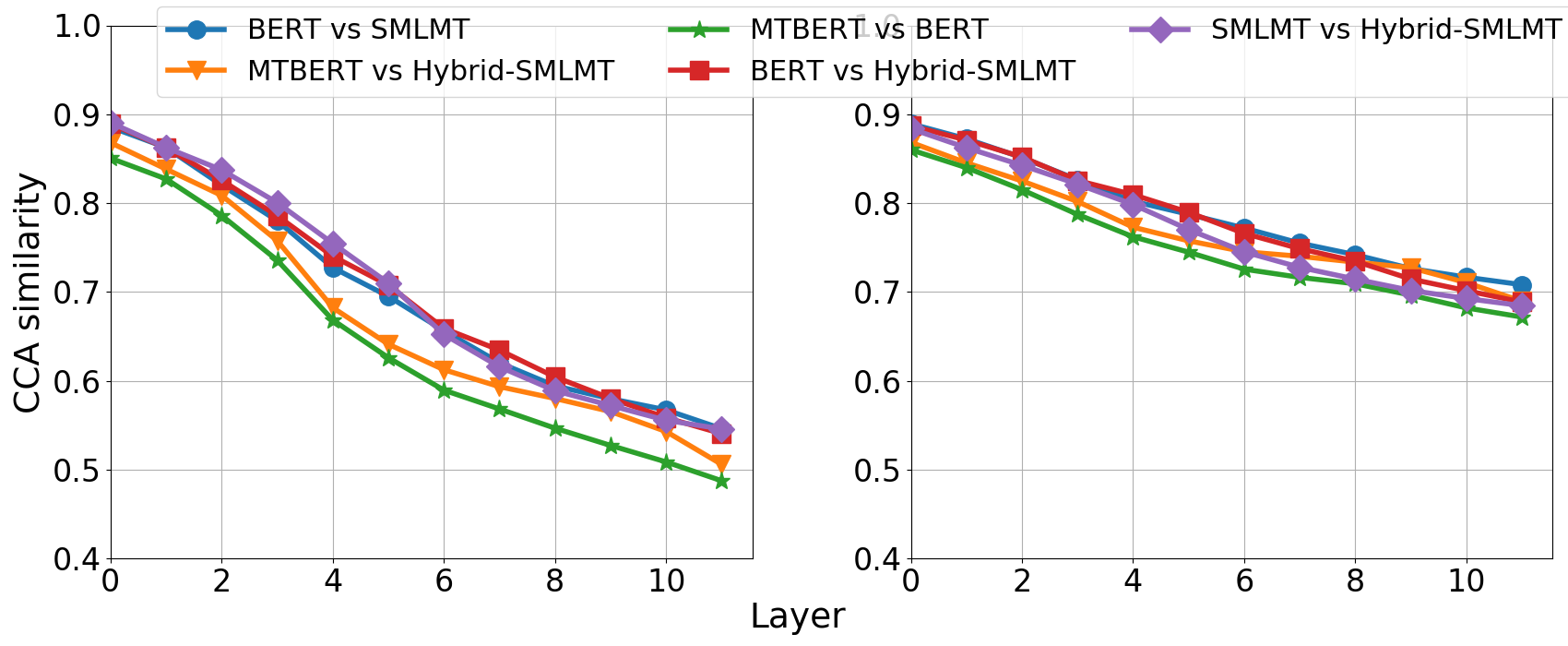

This paper proposes a pre-training based automated Chinese essay scoring method. The method involves three components: weakly supervised pre-training, supervised cross- prompt fine-tuning and supervised target- prompt fine-tuning. An essay scorer is first pre- trained on a large essay dataset covering diverse topics and with coarse ratings, i.e., good and poor, which are used as a kind of weak supervision. The pre-trained essay scorer would be further fine-tuned on previously rated es- says from existing prompts, which have the same score range with the target prompt and provide extra supervision. At last, the scorer is fine-tuned on the target-prompt training data. The evaluation on four prompts shows that this method can improve a state-of-the-art neural essay scorer in terms of effectiveness and domain adaptation ability, while in-depth analysis also reveals its limitations..

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Cross-Thought for Sentence Encoder Pre-training

Shuohang Wang, Yuwei Fang, Siqi Sun, Zhe Gan, Yu Cheng, Jingjing Liu, Jing Jiang,

Self-Supervised Meta-Learning for Few-Shot Natural Language Classification Tasks

Trapit Bansal, Rishikesh Jha, Tsendsuren Munkhdalai, Andrew McCallum,

MultiCQA: Zero-Shot Transfer of Self-Supervised Text Matching Models on a Massive Scale

Andreas Rücklé, Jonas Pfeiffer, Iryna Gurevych,