Modularized Transfomer-based Ranking Framework

Luyu Gao, Zhuyun Dai, Jamie Callan

Information Retrieval and Text Mining Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Recent innovations in Transformer-based ranking models have advanced the state-of-the-art in information retrieval. However, these Transformers are computationally expensive, and their opaque hidden states make it hard to understand the ranking process. In this work, we modularize the Transformer ranker into separate modules for text representation and interaction. We show how this design enables substantially faster ranking using offline pre-computed representations and light-weight online interactions. The modular design is also easier to interpret and sheds light on the ranking process in Transformer rankers.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

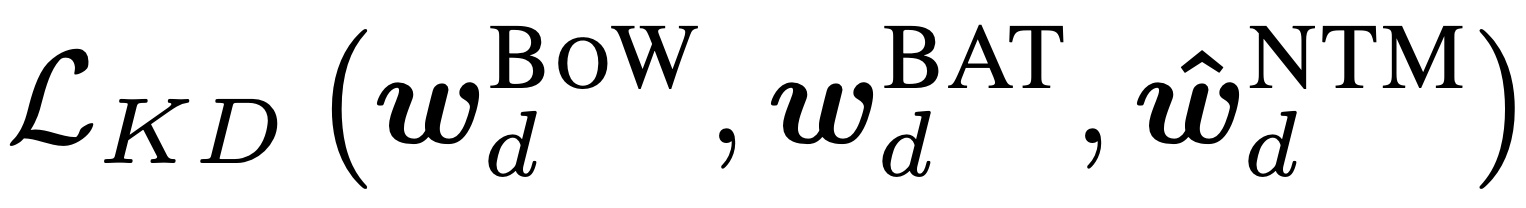

Improving Neural Topic Models using Knowledge Distillation

Alexander Miserlis Hoyle, Pranav Goel, Philip Resnik,

Retrofitting Structure-aware Transformer Language Model for End Tasks

Hao Fei, Yafeng Ren, Donghong Ji,

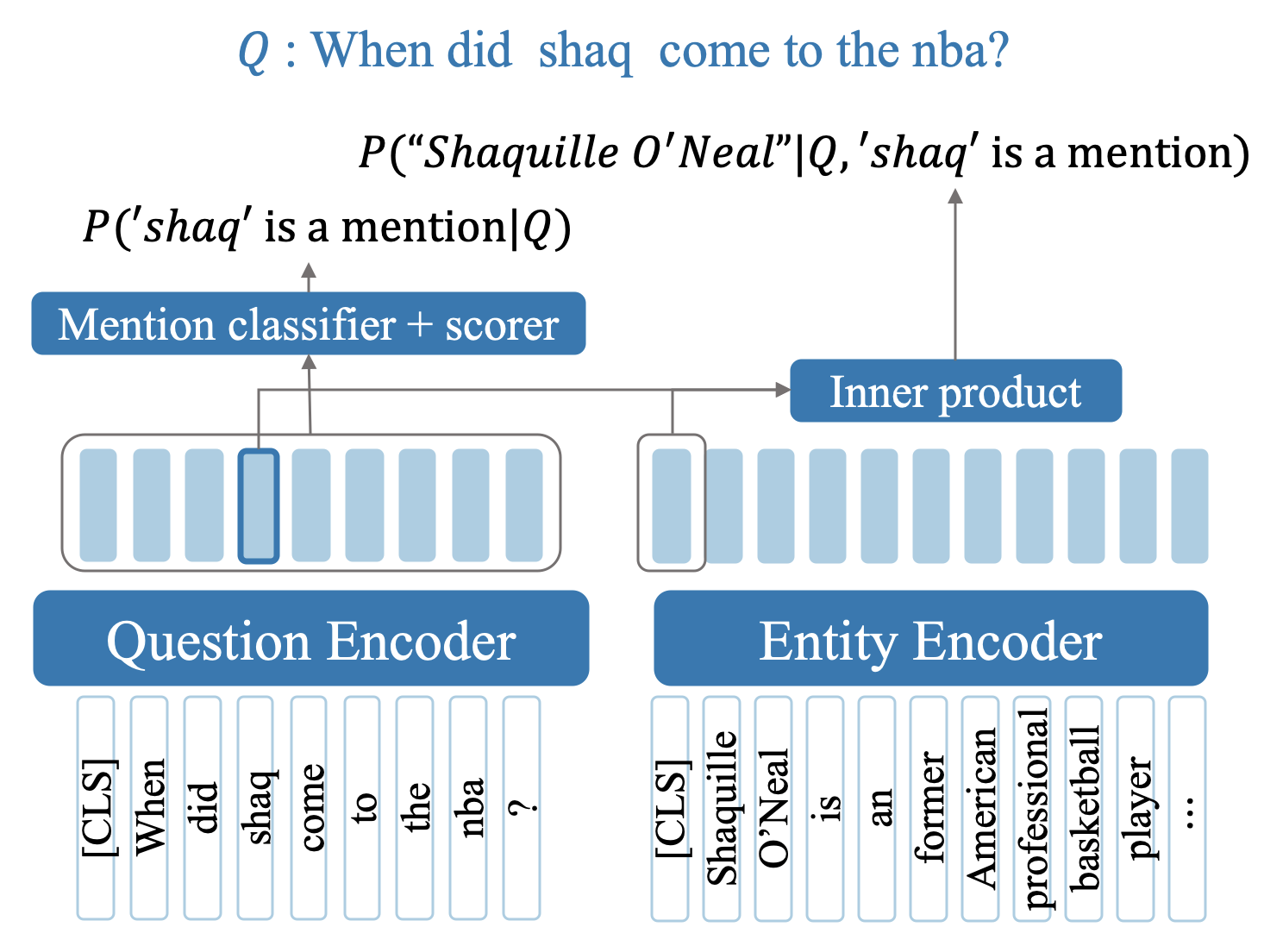

Efficient One-Pass End-to-End Entity Linking for Questions

Belinda Z. Li, Sewon Min, Srinivasan Iyer, Yashar Mehdad, Wen-tau Yih,