Content Planning for Neural Story Generation with Aristotelian Rescoring

Seraphina Goldfarb-Tarrant, Tuhin Chakrabarty, Ralph Weischedel, Nanyun Peng

Language Generation Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Long-form narrative text generated from large language models manages a fluent impersonation of human writing, but only at the local sentence level, and lacks structure or global cohesion. We posit that many of the problems of story generation can be addressed via high-quality content planning, and present a system that focuses on how to learn good plot structures to guide story generation. We utilize a plot-generation language model along with an ensemble of rescoring models that each implement an aspect of good story-writing as detailed in Aristotle's Poetics. We find that stories written with our more principled plot-structure are both more relevant to a given prompt and higher quality than baselines that do not content plan, or that plan in an unprincipled way.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

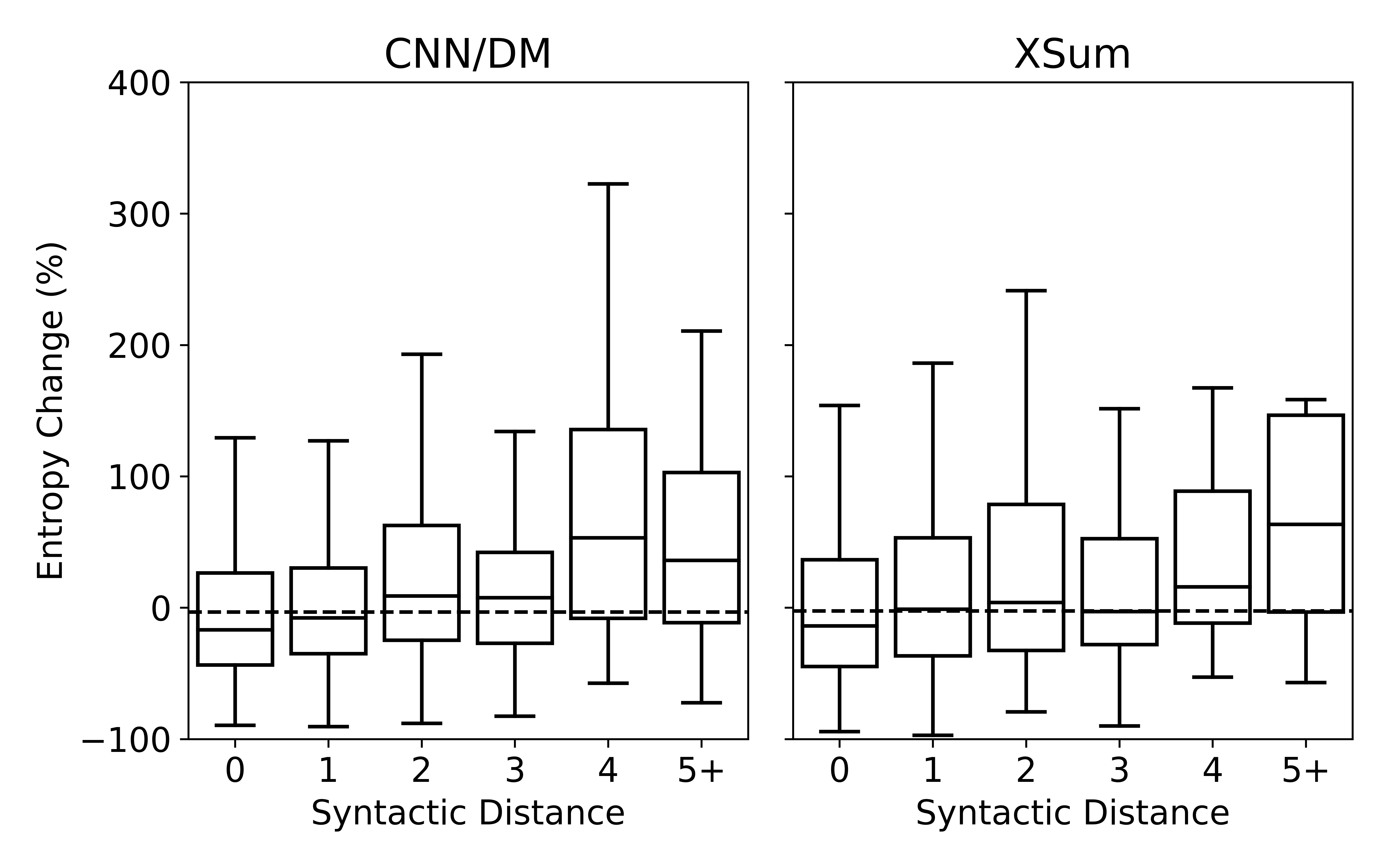

Understanding Neural Abstractive Summarization Models via Uncertainty

Jiacheng Xu, Shrey Desai, Greg Durrett,

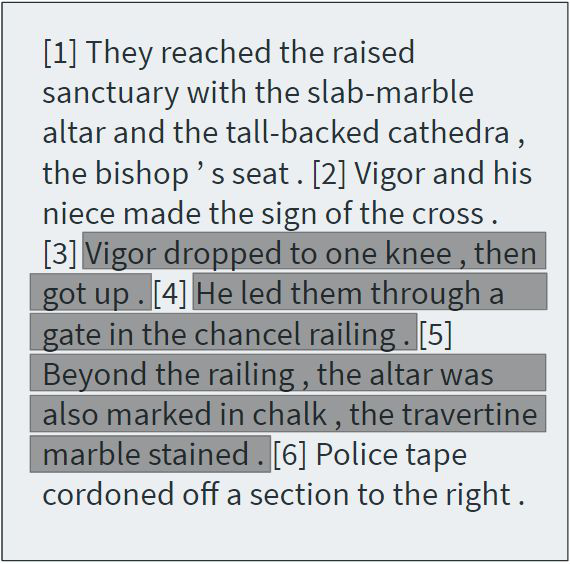

Reading Between the Lines: Exploring Infilling in Visual Narratives

Khyathi Raghavi Chandu, Ruo-Ping Dong, Alan W Black,