Learning Which Features Matter: RoBERTa Acquires a Preference for Linguistic Generalizations (Eventually)

Alex Warstadt, Yian Zhang, Xiaocheng Li, Haokun Liu, Samuel R. Bowman

Interpretability and Analysis of Models for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

One reason pretraining on self-supervised linguistic tasks is effective is that it teaches models features that are helpful for language understanding. However, we want pretrained models to learn not only to represent linguistic features, but also to use those features preferentially during fine-turning. With this goal in mind, we introduce a new English-language diagnostic set called MSGS (the Mixed Signals Generalization Set), which consists of 20 ambiguous binary classification tasks that we use to test whether a pretrained model prefers linguistic or surface generalizations during finetuning. We pretrain RoBERTa from scratch on quantities of data ranging from 1M to 1B words and compare their performance on MSGS to the publicly available RoBERTa_BASE. We find that models can learn to represent linguistic features with little pretraining data, but require far more data to learn to prefer linguistic generalizations over surface ones. Eventually, with about 30B words of pretraining data, RoBERTa_BASE does consistently demonstrate a linguistic bias with some regularity. We conclude that while self-supervised pretraining is an effective way to learn helpful inductive biases, there is likely room to improve the rate at which models learn which features matter.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Structural Supervision Improves Few-Shot Learning and Syntactic Generalization in Neural Language Models

Ethan Wilcox, Peng Qian, Richard Futrell, Ryosuke Kohita, Roger Levy, Miguel Ballesteros,

Feature Adaptation of Pre-Trained Language Models across Languages and Domains with Robust Self-Training

Hai Ye, Qingyu Tan, Ruidan He, Juntao Li, Hwee Tou Ng, Lidong Bing,

Self-Supervised Meta-Learning for Few-Shot Natural Language Classification Tasks

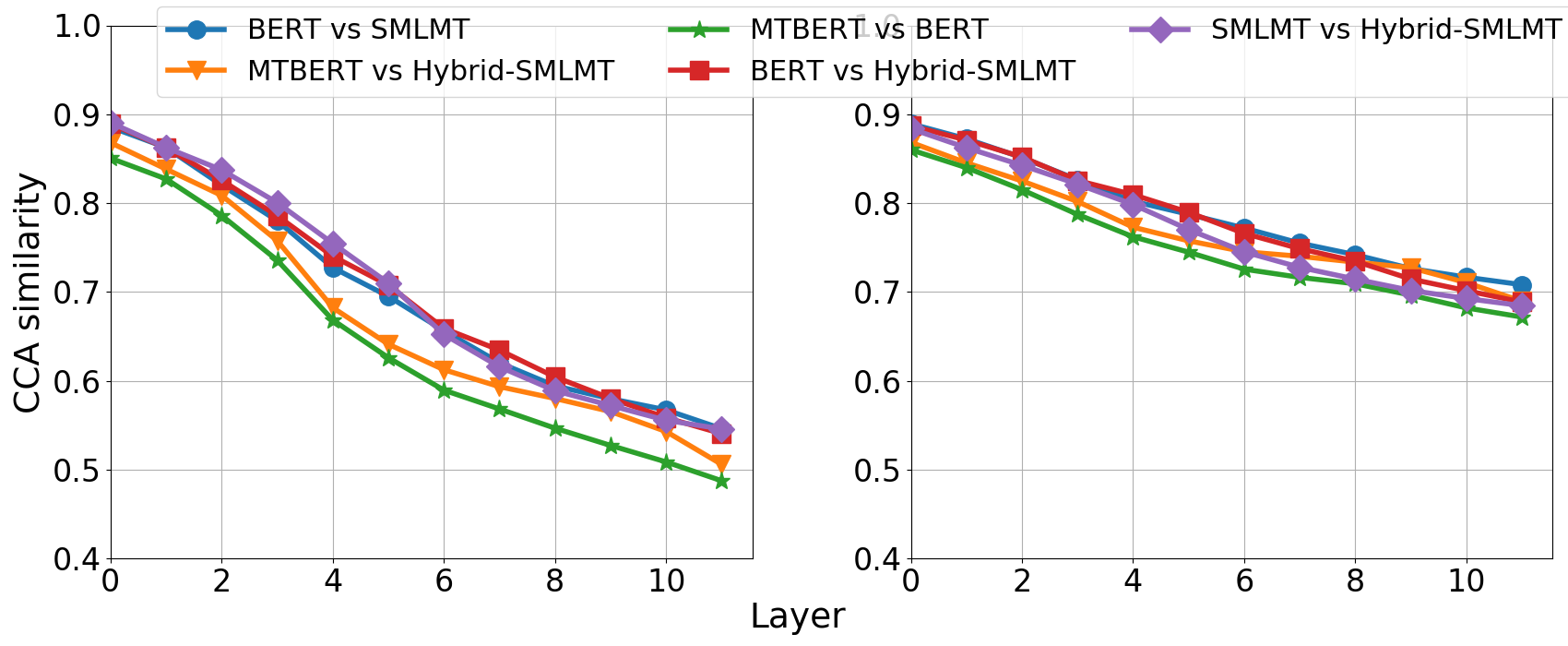

Trapit Bansal, Rishikesh Jha, Tsendsuren Munkhdalai, Andrew McCallum,

Self-Induced Curriculum Learning in Self-Supervised Neural Machine Translation

Dana Ruiter, Josef van Genabith, Cristina España-Bonet,