Evaluating and Characterizing Human Rationales

Samuel Carton, Anirudh Rathore, Chenhao Tan

Interpretability and Analysis of Models for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

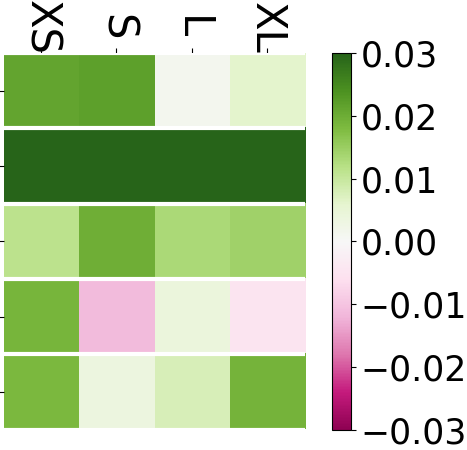

Two main approaches for evaluating the quality of machine-generated rationales are: 1) using human rationales as a gold standard; and 2) automated metrics based on how rationales affect model behavior. An open question, however, is how human rationales fare with these automatic metrics. Analyzing a variety of datasets and models, we find that human rationales do not necessarily perform well on these metrics. To unpack this finding, we propose improved metrics to account for model-dependent baseline performance. We then propose two methods to further characterize rationale quality, one based on model retraining and one on using ``fidelity curves'' to reveal properties such as irrelevance and redundancy. Our work leads to actionable suggestions for evaluating and characterizing rationales.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

F1 is Not Enough! Models and Evaluation Towards User-Centered Explainable Question Answering

Hendrik Schuff, Heike Adel, Ngoc Thang Vu,

What Does My QA Model Know? Devising Controlled Probes using Expert

Kyle Richardson, Ashish Sabharwal,

Interpretable Multi-dataset Evaluation for Named Entity Recognition

Jinlan Fu, Pengfei Liu, Graham Neubig,