Self-Supervised Knowledge Triplet Learning for Zero-Shot Question Answering

Pratyay Banerjee, Chitta Baral

Question Answering Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

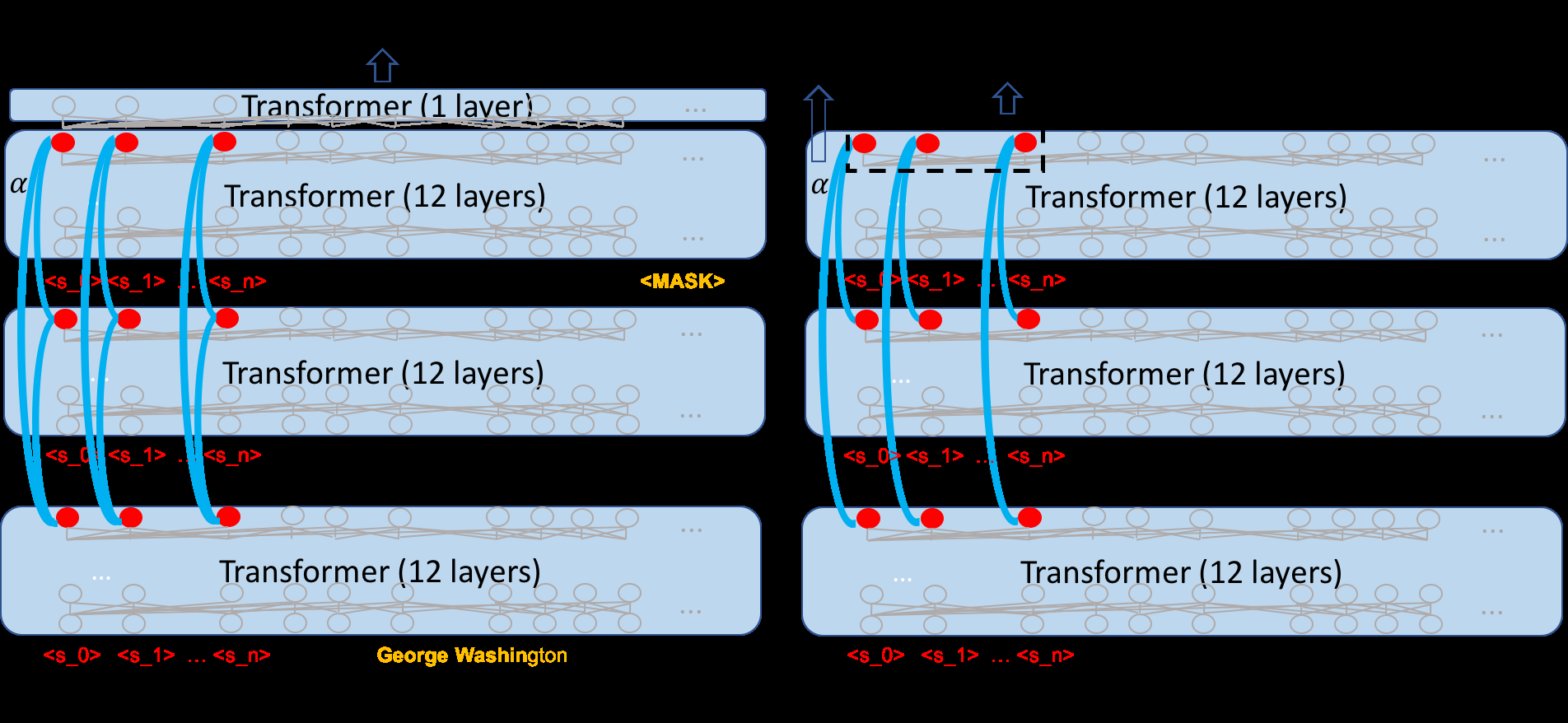

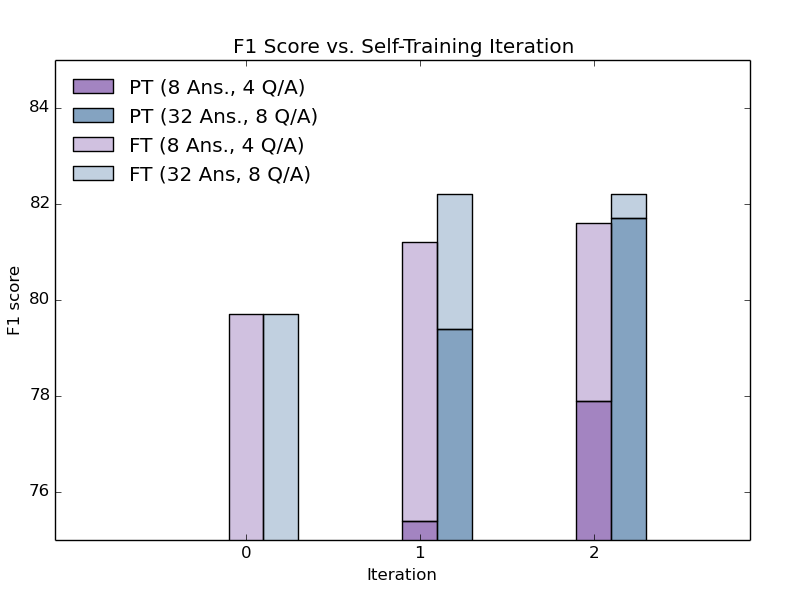

The aim of all Question Answering (QA) systems is to generalize to unseen questions. Current supervised methods are reliant on expensive data annotation. Moreover, such annotations can introduce unintended annotator bias, making systems focus more on the bias than the actual task. This work proposes Knowledge Triplet Learning (KTL), a self-supervised task over knowledge graphs. We propose heuristics to create synthetic graphs for commonsense and scientific knowledge. We propose using KTL to perform zero-shot question answering, and our experiments show considerable improvements over large pre-trained transformer language models.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Cross-Thought for Sentence Encoder Pre-training

Shuohang Wang, Yuwei Fang, Siqi Sun, Zhe Gan, Yu Cheng, Jingjing Liu, Jing Jiang,

Unsupervised Adaptation of Question Answering Systems via Generative Self-training

Steven Rennie, Etienne Marcheret, Neil Mallinar, David Nahamoo, Vaibhava Goel,

Learning a Cost-Effective Annotation Policy for Question Answering

Bernhard Kratzwald, Stefan Feuerriegel, Huan Sun,

DualTKB: A Dual Learning Bridge between Text and Knowledge Base

Pierre Dognin, Igor Melnyk, Inkit Padhi, Cicero Nogueira dos Santos, Payel Das,