Supertagging Combinatory Categorial Grammar with Attentive Graph Convolutional Networks

Yuanhe Tian, Yan Song, Fei Xia

Syntax: Tagging, Chunking, and Parsing Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

Supertagging is conventionally regarded as an important task for combinatory categorial grammar (CCG) parsing, where effective modeling of contextual information is highly important to this task. However, existing studies have made limited efforts to leverage contextual features except for applying powerful encoders (e.g., bi-LSTM). In this paper, we propose attentive graph convolutional networks to enhance neural CCG supertagging through a novel solution of leveraging contextual information. Specifically, we build the graph from chunks (n-grams) extracted from a lexicon and apply attention over the graph, so that different word pairs from the contexts within and across chunks are weighted in the model and facilitate the supertagging accordingly. The experiments performed on the CCGbank demonstrate that our approach outperforms all previous studies in terms of both supertagging and parsing. Further analyses illustrate the effectiveness of each component in our approach to discriminatively learn from word pairs to enhance CCG supertagging.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

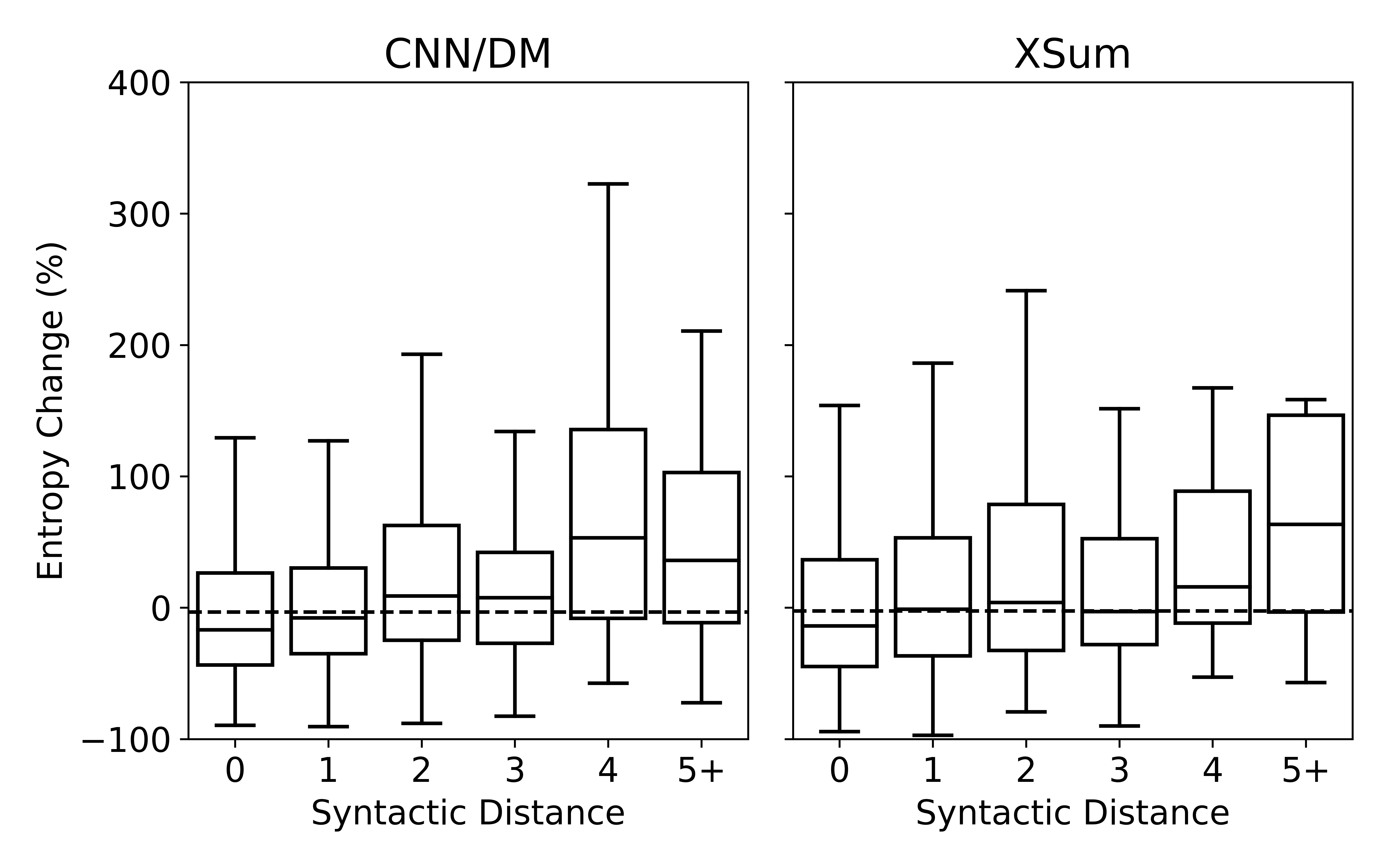

Understanding Neural Abstractive Summarization Models via Uncertainty

Jiacheng Xu, Shrey Desai, Greg Durrett,

On the Role of Supervision in Unsupervised Constituency Parsing

Haoyue Shi, Karen Livescu, Kevin Gimpel,

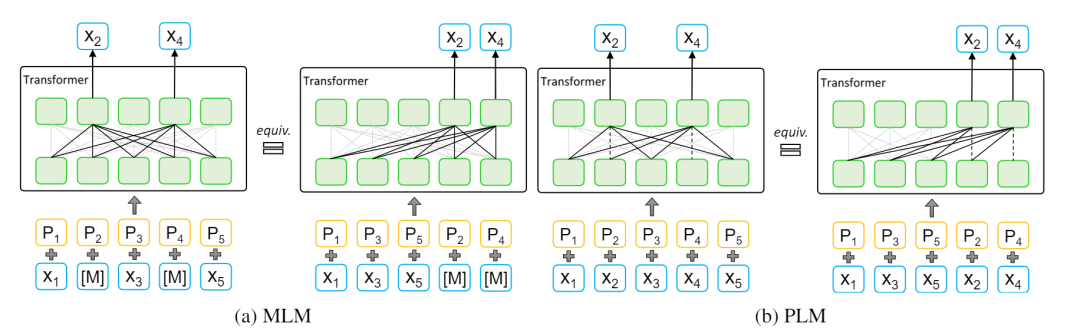

Syntactic Structure Distillation Pretraining for Bidirectional Encoders

Adhiguna Kuncoro, Lingpeng Kong, Daniel Fried, Dani Yogatama, Laura Rimell, Chris Dyer, Phil Blunsom,

Modeling Content Importance for Summarization with Pre-trained Language Models

Liqiang Xiao, Lu Wang, Hao He, Yaohui Jin,