Modeling Content Importance for Summarization with Pre-trained Language Models

Liqiang Xiao, Lu Wang, Hao He, Yaohui Jin

Summarization Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

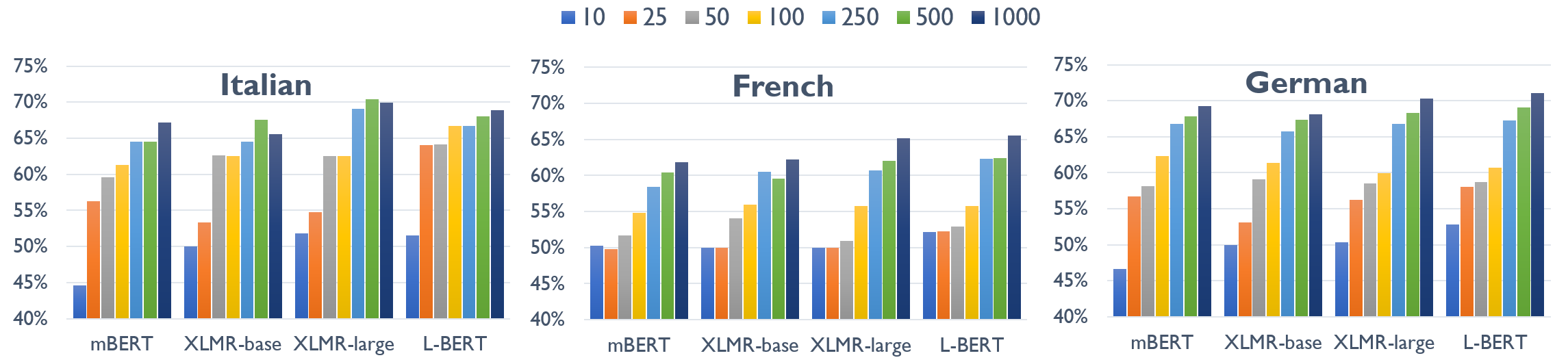

Modeling content importance is an essential yet challenging task for summarization. Previous work is mostly based on statistical methods that estimate word-level salience, which does not consider semantics and larger context when quantifying importance. It is thus hard for these methods to generalize to semantic units of longer text spans. In this work, we apply information theory on top of pre-trained language models and define the concept of importance from the perspective of information amount. It considers both the semantics and context when evaluating the importance of each semantic unit. With the help of pre-trained language models, it can easily generalize to different kinds of semantic units n-grams or sentences. Experiments on CNN/Daily Mail and New York Times datasets demonstrate that our method can better model the importance of content than prior work based on F1 and ROUGE scores.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Q-learning with Language Model for Edit-based Unsupervised Summarization

Ryosuke Kohita, Akifumi Wachi, Yang Zhao, Ryuki Tachibana,

XL-WiC: A Multilingual Benchmark for Evaluating Semantic Contextualization

Alessandro Raganato, Tommaso Pasini, Jose Camacho-Collados, Mohammad Taher Pilehvar,

Neural Extractive Summarization with Hierarchical Attentive Heterogeneous Graph Network

Ruipeng Jia, Yanan Cao, Hengzhu Tang, Fang Fang, Cong Cao, Shi Wang,

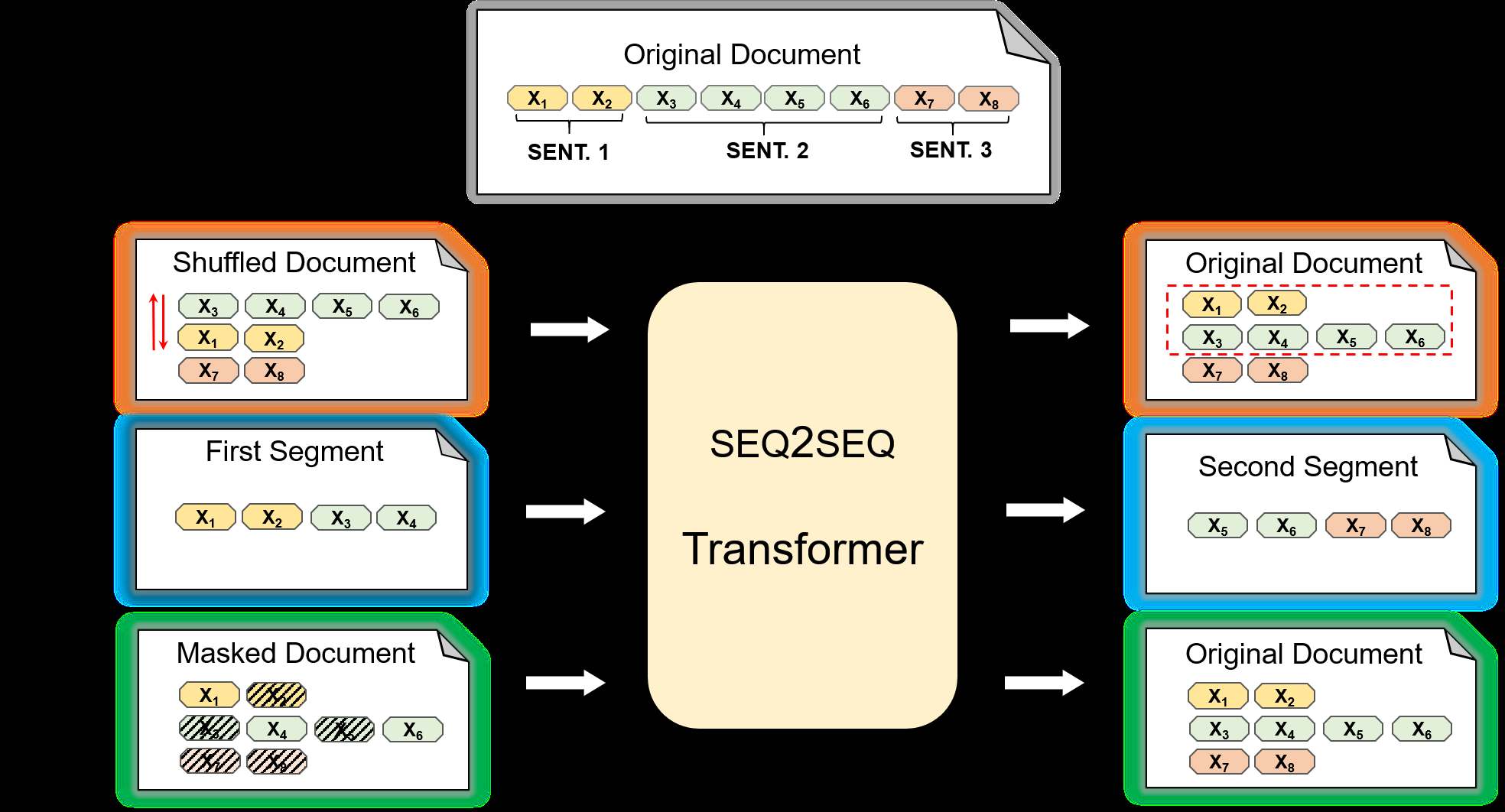

Pre-training for Abstractive Document Summarization by Reinstating Source Text

Yanyan Zou, Xingxing Zhang, Wei Lu, Furu Wei, Ming Zhou,