On Losses for Modern Language Models

Stéphane Aroca-Ouellette, Frank Rudzicz

Machine Learning for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

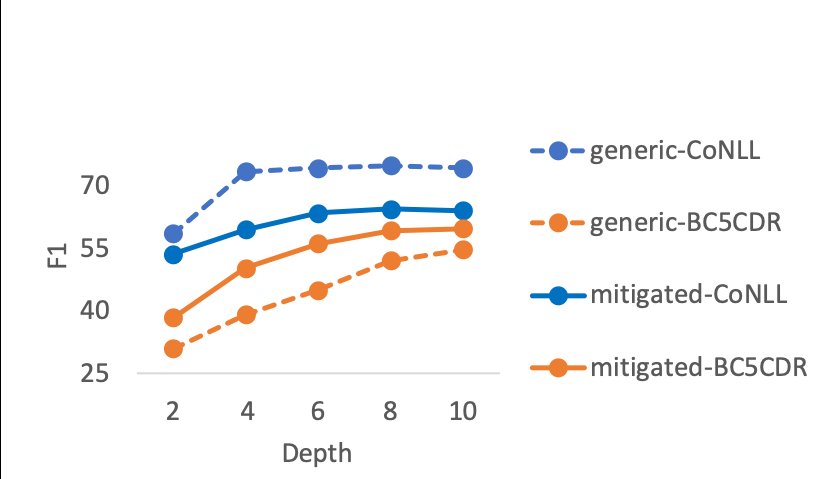

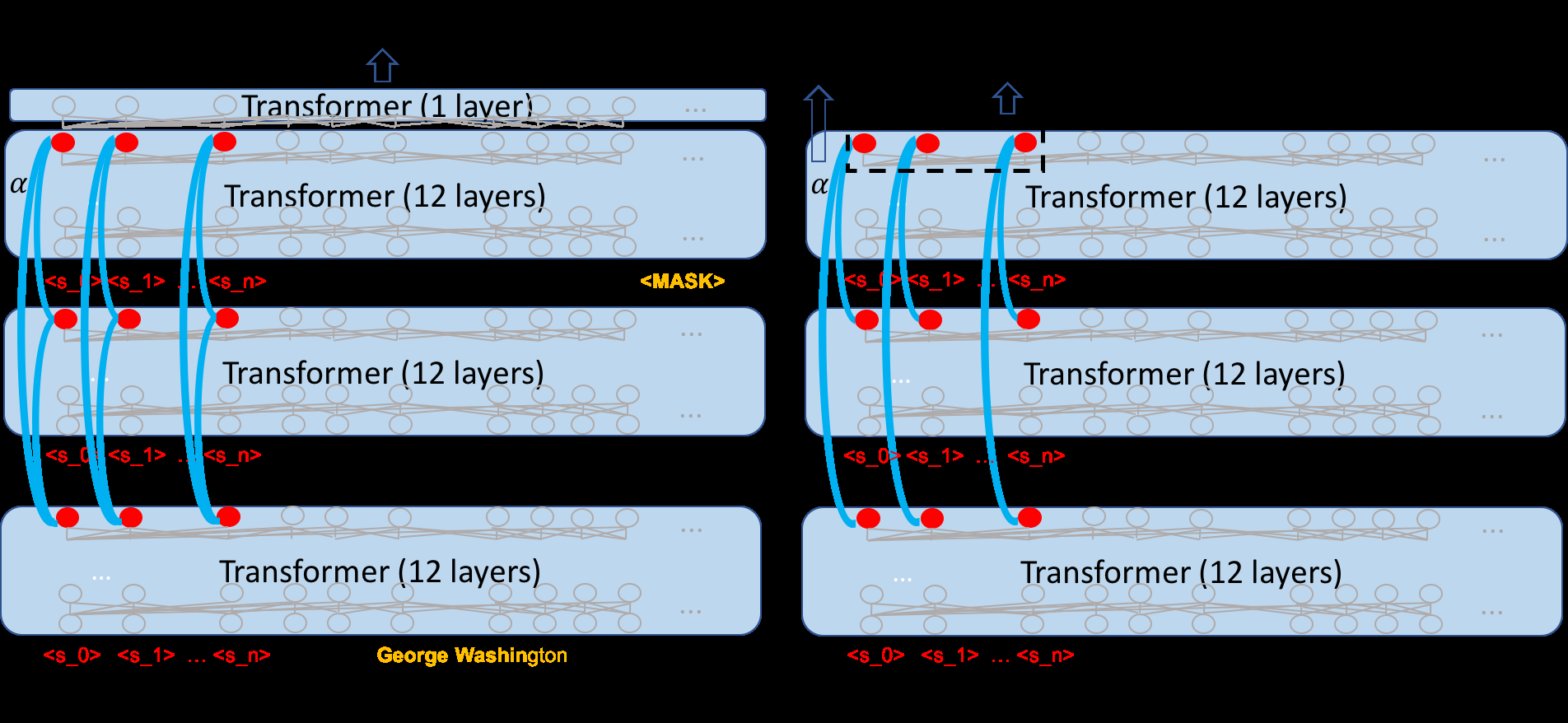

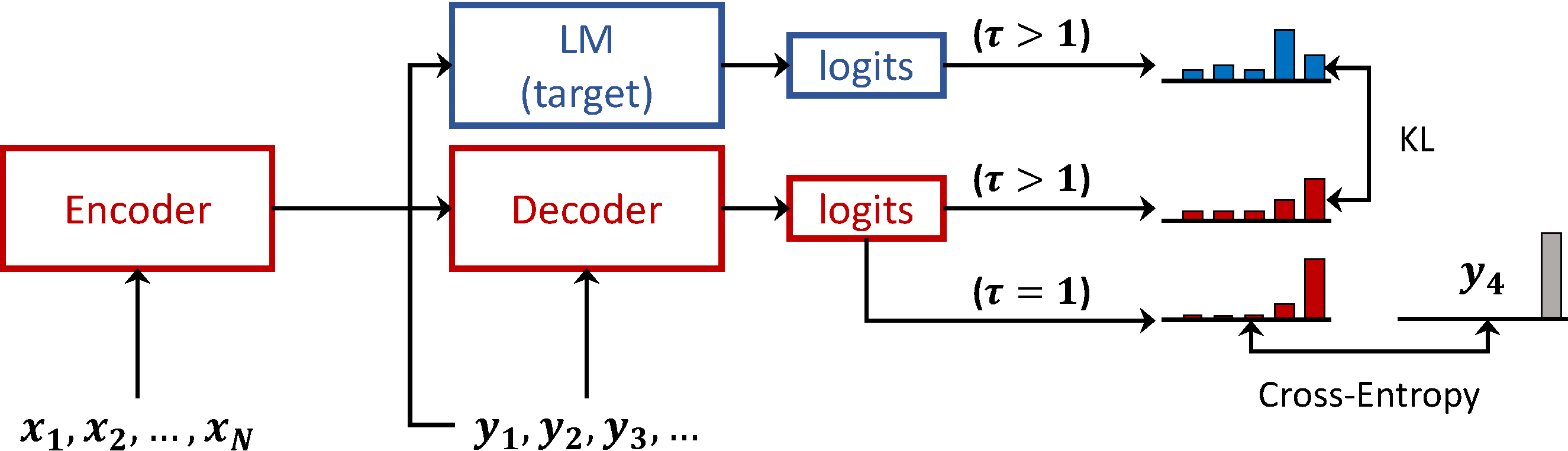

BERT set many state-of-the-art results over varied NLU benchmarks by pre-training over two tasks: masked language modelling (MLM) and next sentence prediction (NSP), the latter of which has been highly criticized. In this paper, we 1) clarify NSP's effect on BERT pre-training, 2) explore fourteen possible auxiliary pre-training tasks, of which seven are novel to modern language models, and 3) investigate different ways to include multiple tasks into pre-training. We show that NSP is detrimental to training due to its context splitting and shallow semantic signal. We also identify six auxiliary pre-training tasks -- sentence ordering, adjacent sentence prediction, TF prediction, TF-IDF prediction, a FastSent variant, and a Quick Thoughts variant -- that outperform a pure MLM baseline. Finally, we demonstrate that using multiple tasks in a multi-task pre-training framework provides better results than using any single auxiliary task. Using these methods, we outperform BERT\textsubscript{Base} on the GLUE benchmark using fewer than a quarter of the training tokens.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

On the importance of pre-training data volume for compact language models

Vincent Micheli, Martin d'Hoffschmidt, François Fleuret,

Cross-Thought for Sentence Encoder Pre-training

Shuohang Wang, Yuwei Fang, Siqi Sun, Zhe Gan, Yu Cheng, Jingjing Liu, Jing Jiang,

Language Model Prior for Low-Resource Neural Machine Translation

Christos Baziotis, Barry Haddow, Alexandra Birch,

An Empirical Investigation Towards Efficient Multi-Domain Language Model Pre-training

Kristjan Arumae, Qing Sun, Parminder Bhatia,