An Analysis of Natural Language Inference Benchmarks through the Lens of Negation

Md Mosharaf Hossain, Venelin Kovatchev, Pranoy Dutta, Tiffany Kao, Elizabeth Wei, Eduardo Blanco

Semantics: Sentence-level Semantics, Textual Inference and Other areas Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

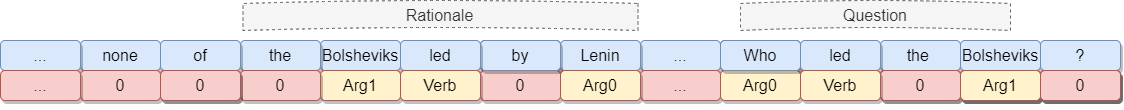

Negation is underrepresented in existing natural language inference benchmarks. Additionally, one can often ignore the few negations in existing benchmarks and still make the right inference judgments. In this paper, we present a new benchmark for natural language inference in which negation plays a critical role. We also show that state-of-the-art transformers struggle making inference judgments with the new pairs.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Multi-Step Inference for Reasoning Over Paragraphs

Jiangming Liu, Matt Gardner, Shay B. Cohen, Mirella Lapata,

BLiMP: The Benchmark of Linguistic Minimal Pairs for English

Alex Warstadt, Alicia Parrish, Haokun Liu, Anhad Monananey, Wei Peng, Sheng-Fu Wang, Samuel Bowman,

Compositional and Lexical Semantics in RoBERTa, BERT and DistilBERT: A Case Study on CoQA

Ieva Staliūnaitė, Ignacio Iacobacci,

Learning Explainable Linguistic Expressions with Neural Inductive Logic Programming for Sentence Classification

Prithviraj Sen, Marina Danilevsky, Yunyao Li, Siddhartha Brahma, Matthias Boehm, Laura Chiticariu, Rajasekar Krishnamurthy,