Multi-turn Response Selection using Dialogue Dependency Relations

Qi Jia, Yizhu Liu, Siyu Ren, Kenny Zhu, Haifeng Tang

Dialog and Interactive Systems Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Multi-turn response selection is a task designed for developing dialogue agents. The performance on this task has a remarkable improvement with pre-trained language models. However, these models simply concatenate the turns in dialogue history as the input and largely ignore the dependencies between the turns. In this paper, we propose a dialogue extraction algorithm to transform a dialogue history into threads based on their dependency relations. Each thread can be regarded as a self-contained sub-dialogue. We also propose Thread-Encoder model to encode threads and candidates into compact representations by pre-trained Transformers and finally get the matching score through an attention layer. The experiments show that dependency relations are helpful for dialogue context understanding, and our model outperforms the state-of-the-art baselines on both DSTC7 and DSTC8*, with competitive results on UbuntuV2.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

TOD-BERT: Pre-trained Natural Language Understanding for Task-Oriented Dialogue

Chien-Sheng Wu, Steven C.H. Hoi, Richard Socher, Caiming Xiong,

Semantic Role Labeling Guided Multi-turn Dialogue ReWriter

Kun Xu, Haochen Tan, Linfeng Song, Han Wu, Haisong Zhang, Linqi Song, Dong Yu,

Cross Copy Network for Dialogue Generation

Changzhen Ji, Xin Zhou, Yating Zhang, Xiaozhong Liu, Changlong Sun, Conghui Zhu, Tiejun Zhao,

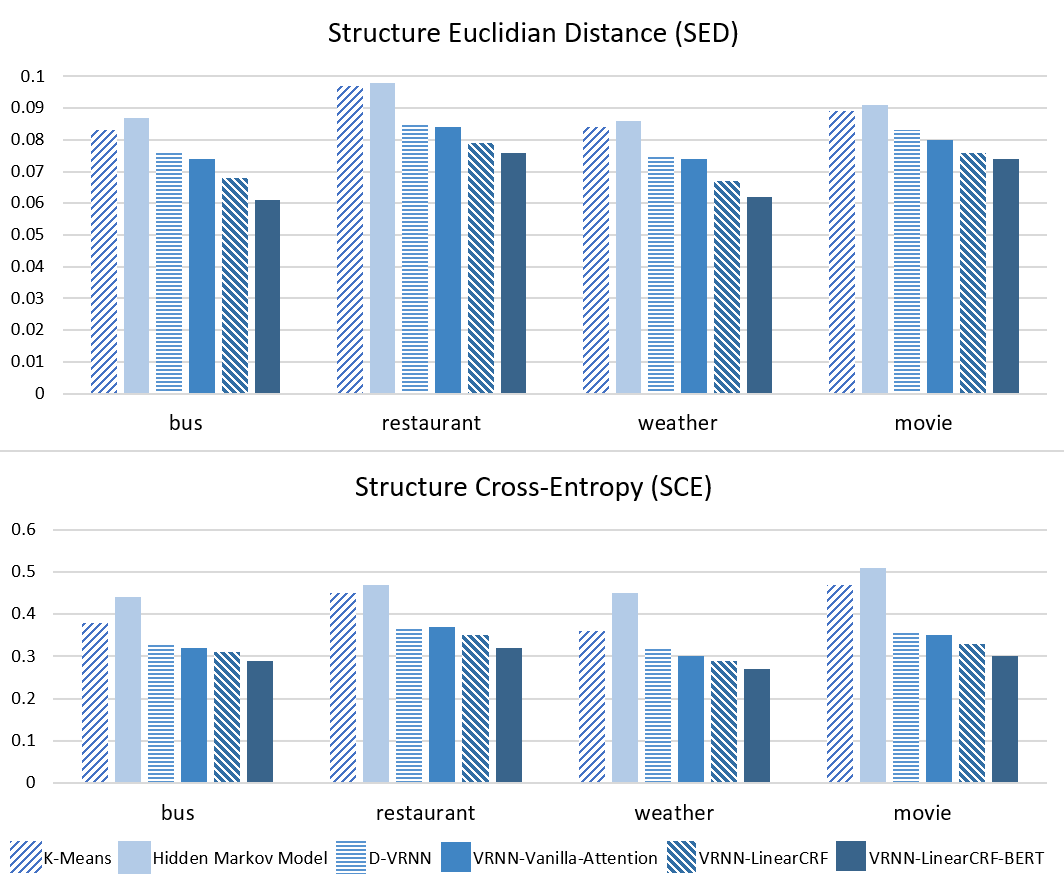

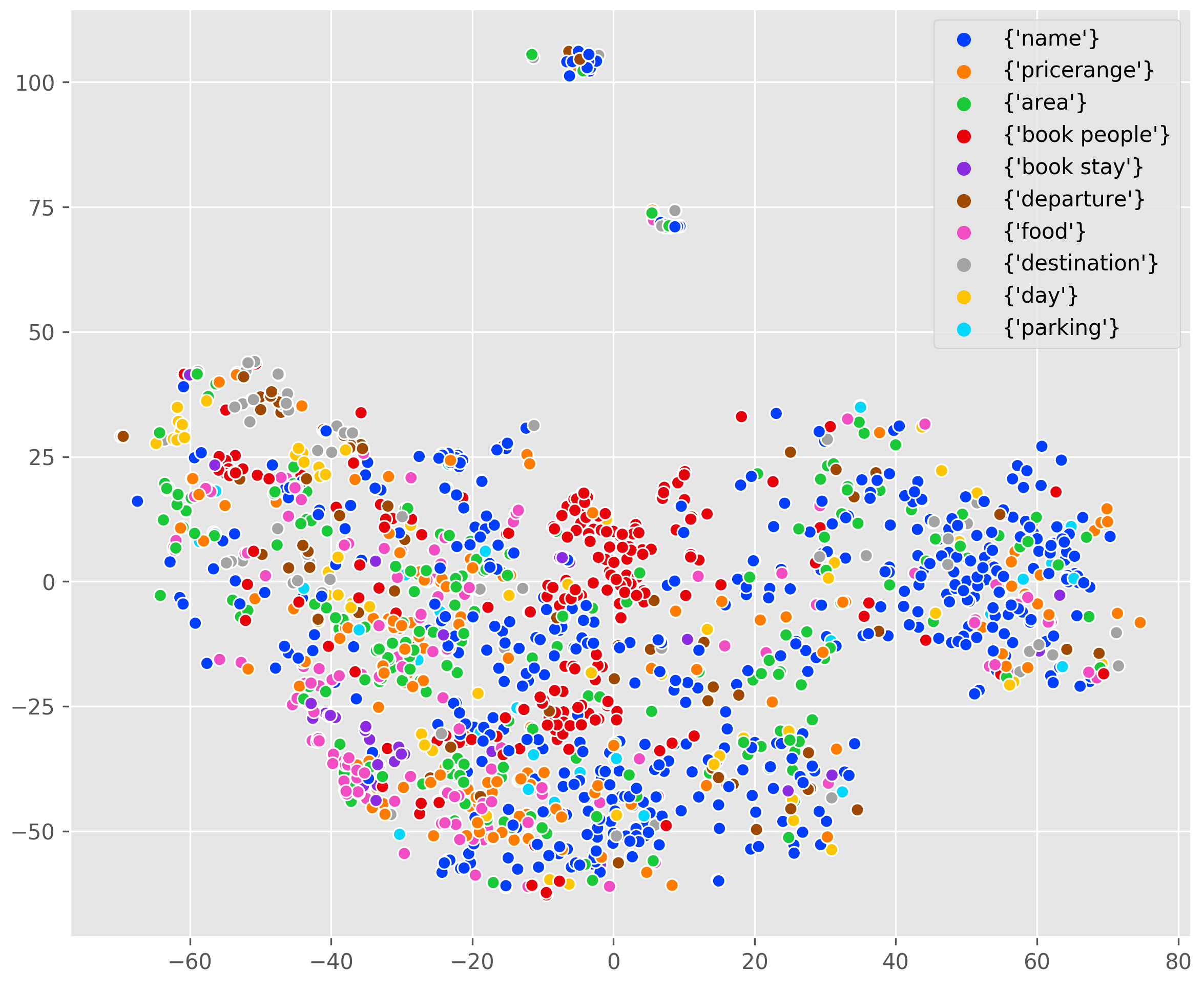

Structured Attention for Unsupervised Dialogue Structure Induction

Liang Qiu, Yizhou Zhao, Weiyan Shi, Yuan Liang, Feng Shi, Tao Yuan, Zhou Yu, Song-Chun Zhu,