ISAAQ - Mastering Textbook Questions with Pre-trained Transformers and Bottom-Up and Top-Down Attention

Jose Manuel Gomez-Perez, Raúl Ortega

Question Answering Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

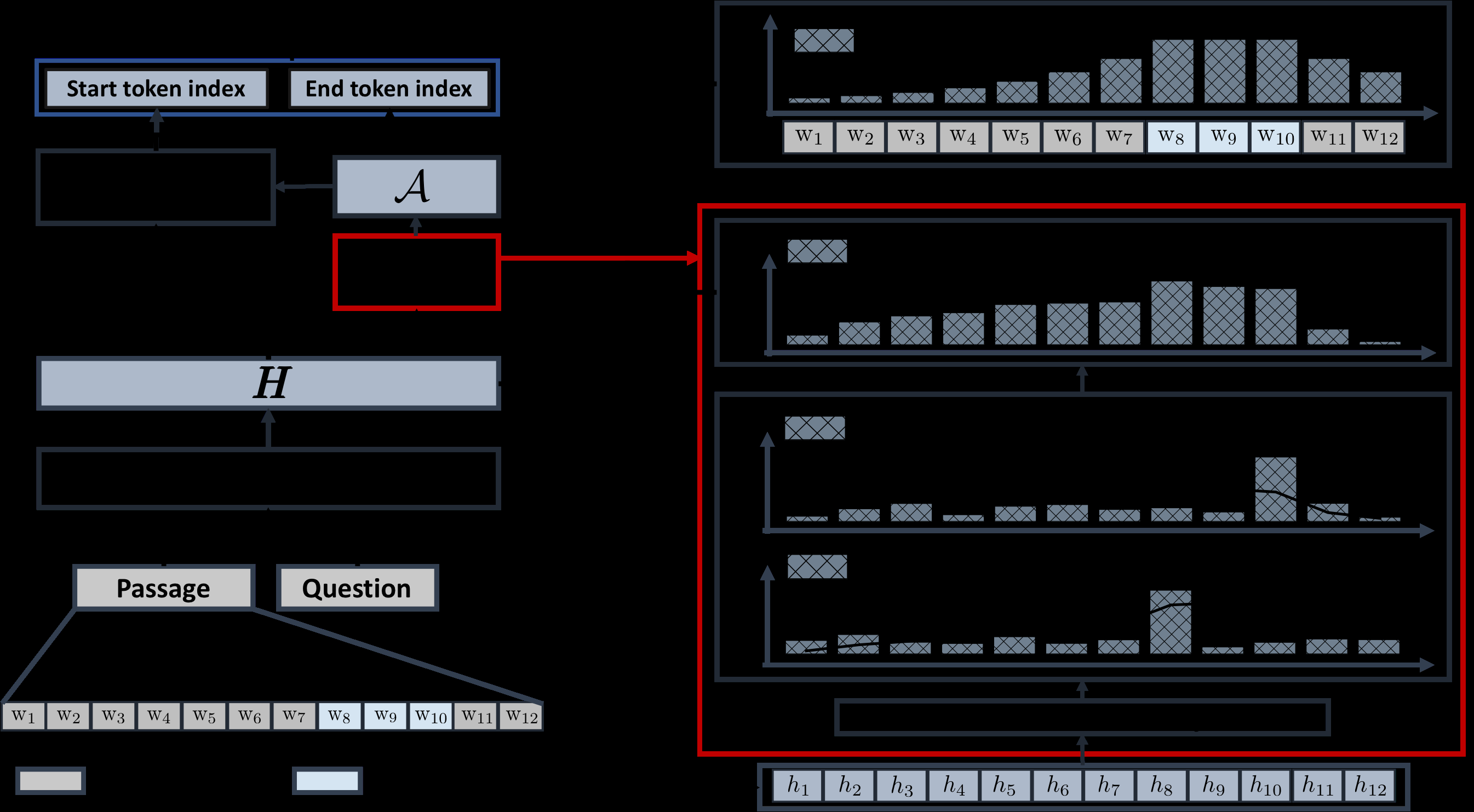

Textbook Question Answering is a complex task in the intersection of Machine Comprehension and Visual Question Answering that requires reasoning with multimodal information from text and diagrams. For the first time, this paper taps on the potential of transformer language models and bottom-up and top-down attention to tackle the language and visual understanding challenges this task entails. Rather than training a language-visual transformer from scratch we rely on pre-trained transformers, fine-tuning and ensembling. We add bottom-up and top-down attention to identify regions of interest corresponding to diagram constituents and their relationships, improving the selection of relevant visual information for each question and answer options. Our system ISAAQ reports unprecedented success in all TQA question types, with accuracies of 81.36%, 71.11% and 55.12% on true/false, text-only and diagram multiple choice questions. ISAAQ also demonstrates its broad applicability, obtaining state-of-the-art results in other demanding datasets.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Unsupervised Question Decomposition for Question Answering

Ethan Perez, Patrick Lewis, Wen-tau Yih, Kyunghyun Cho, Douwe Kiela,

A Simple and Effective Model for Answering Multi-span Questions

Elad Segal, Avia Efrat, Mor Shoham, Amir Globerson, Jonathan Berant,

oLMpics - On what Language Model Pre-training Captures

Alon Talmor, Yanai Elazar, Yoav Goldberg, Jonathan Berant,

Context-Aware Answer Extraction in Question Answering

Yeon Seonwoo, Ji-Hoon Kim, Jung-Woo Ha, Alice Oh,