oLMpics - On what Language Model Pre-training Captures

Alon Talmor, Yanai Elazar, Yoav Goldberg, Jonathan Berant

Question Answering Tacl Paper

You can open the pre-recorded video in a separate window.

Abstract:

Recent success of pre-trained language models (LMs) has spurred widespread interest in the language capabilities that they possess. However, efforts to understand whether LM representations are useful for symbolic reasoning tasks have been limited and scattered. In this work, we propose eight reasoning tasks, which conceptually require operations such as comparison, conjunction, and composition. A fundamental challenge is to understand whether the performance of a LM on a task should be attributed to the pre-trained representations or to the process of fine-tuning on the task data. To address this, we propose an evaluation protocol that includes both zero-shot evaluation (no fine-tuning), as well as comparing the learning curve of a fine-tuned LM to the learning curve of multiple controls, which paints a rich picture of the LM capabilities. Our main findings are that: (a) different LMs exhibit qualitatively different reasoning abilities, e.g., RoBERTa succeeds in reasoning tasks where BERT fails completely; (b) LMs do not reason in an abstract manner and are context-dependent, e.g., while RoBERTa can compare ages, it can do so only when the ages are in the typical range of human ages; (c) On half of our reasoning tasks all models fail completely. Our findings and infrastructure can help future work on designing new datasets, models and objective functions for pre-training.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Grounded Compositional Outputs for Adaptive Language Modeling

Nikolaos Pappas, Phoebe Mulcaire, Noah A. Smith,

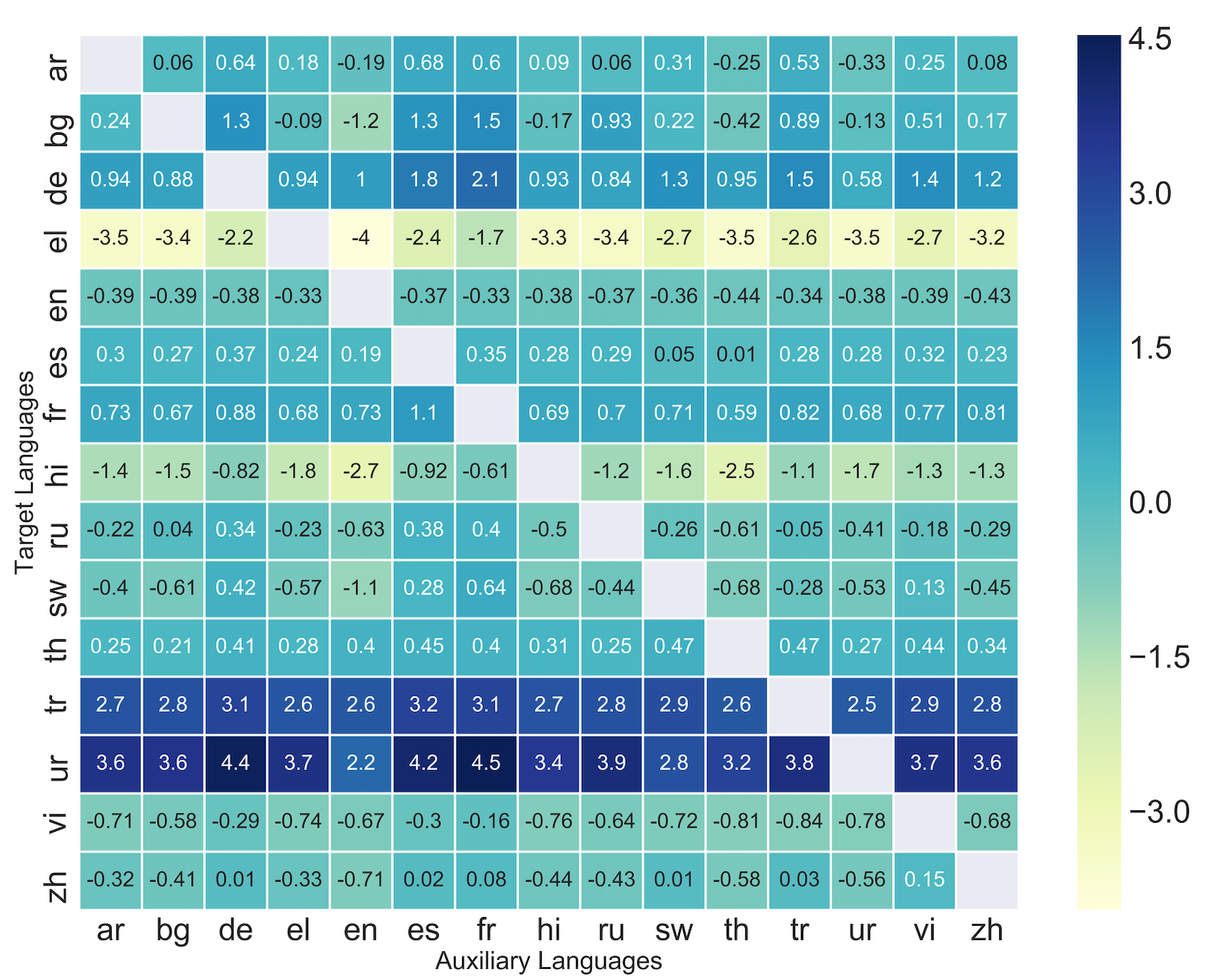

Zero-Shot Cross-Lingual Transfer with Meta Learning

Farhad Nooralahzadeh, Giannis Bekoulis, Johannes Bjerva, Isabelle Augenstein,

Syntactic Structure Distillation Pretraining for Bidirectional Encoders

Adhiguna Kuncoro, Lingpeng Kong, Daniel Fried, Dani Yogatama, Laura Rimell, Chris Dyer, Phil Blunsom,

Efficient Meta Lifelong-Learning with Limited Memory

Zirui Wang, Sanket Vaibhav Mehta, Barnabas Poczos, Jaime Carbonell,