Adversarial Semantic Collisions

Congzheng Song, Alexander Rush, Vitaly Shmatikov

Interpretability and Analysis of Models for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

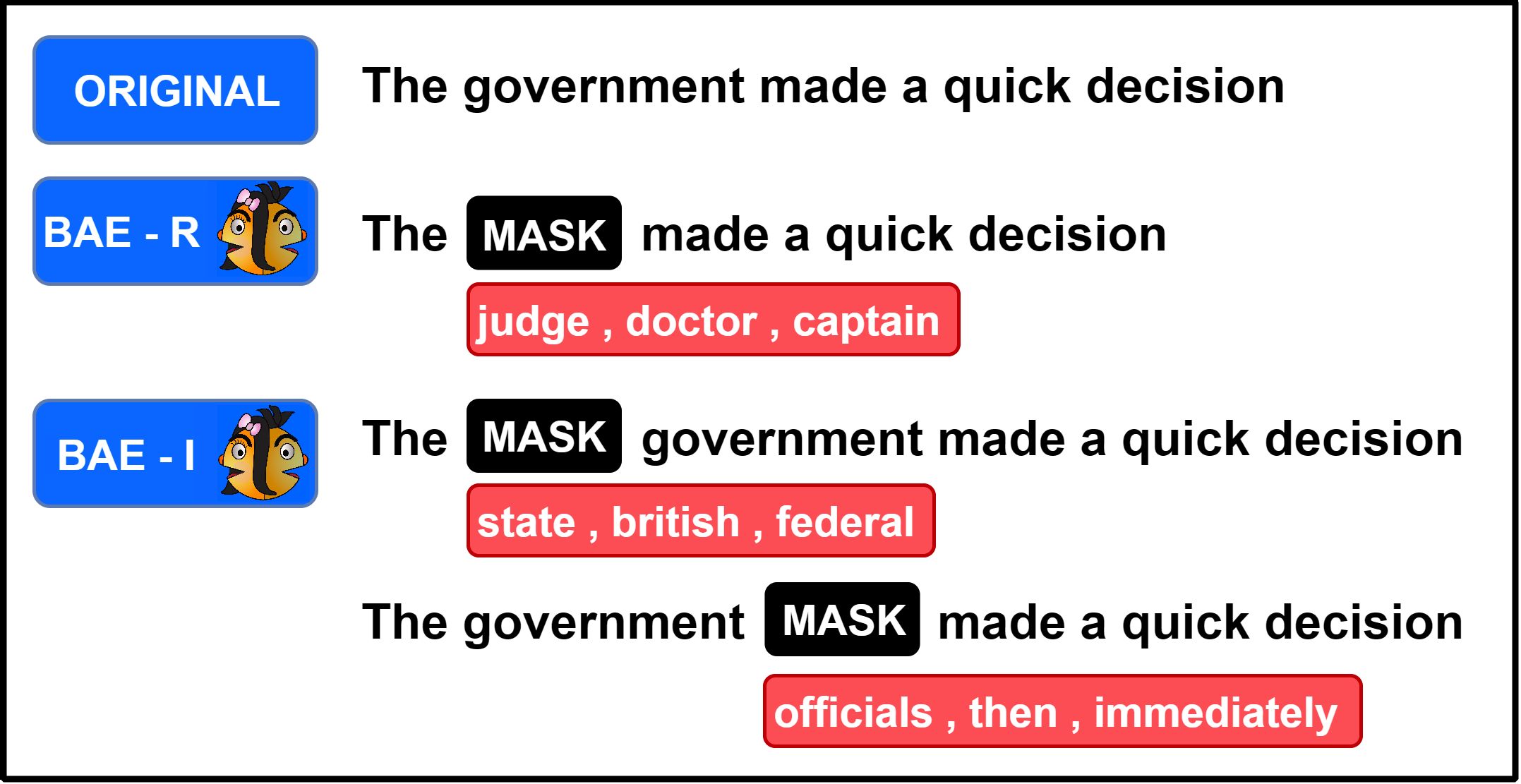

We study \emph{semantic collisions}: texts that are semantically unrelated but judged as similar by NLP models. We develop gradient-based approaches for generating semantic collisions and demonstrate that state-of-the-art models for many tasks which rely on analyzing the meaning and similarity of texts\textemdash including paraphrase identification, document retrieval, response suggestion, and extractive summarization\textemdash are vulnerable to semantic collisions. For example, given a target query, inserting a crafted collision into an irrelevant document can shift its retrieval rank from 1000 to top 3. We show how to generate semantic collisions that evade perplexity-based filtering and discuss other potential mitigations. Our code is available at \url{https://github.com/csong27/collision-bert}.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Adversarial Semantic Decoupling for Recognizing Open-Vocabulary Slots

Yuanmeng Yan, Keqing He, Hong Xu, Sihong Liu, Fanyu Meng, Min Hu, Weiran Xu,

Small but Mighty: New Benchmarks for Split and Rephrase

Li Zhang, Huaiyu Zhu, Siddhartha Brahma, Yunyao Li,