Towards Better Context-aware Lexical Semantics:Adjusting Contextualized Representations through Static Anchors

Qianchu Liu, Diana McCarthy, Anna Korhonen

Semantics: Lexical Semantics Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

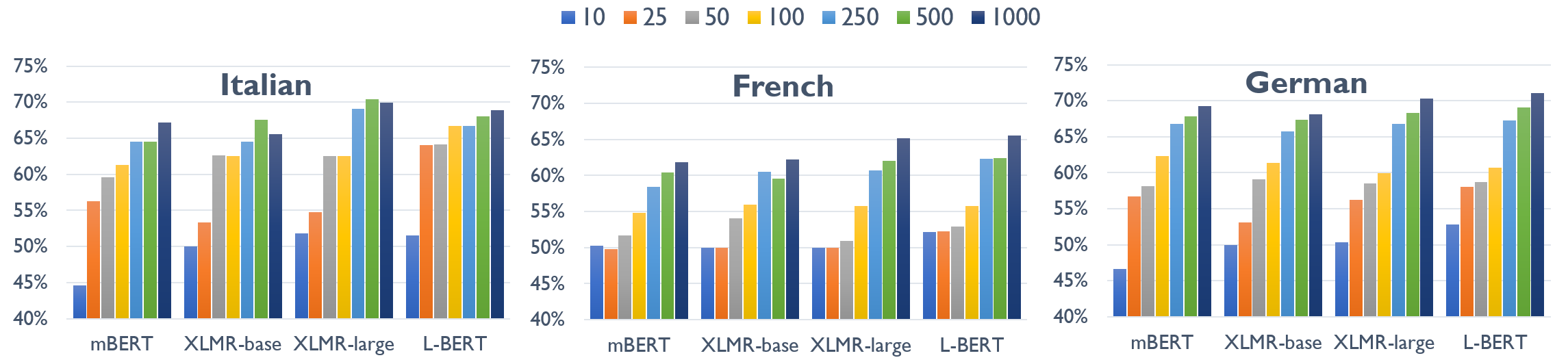

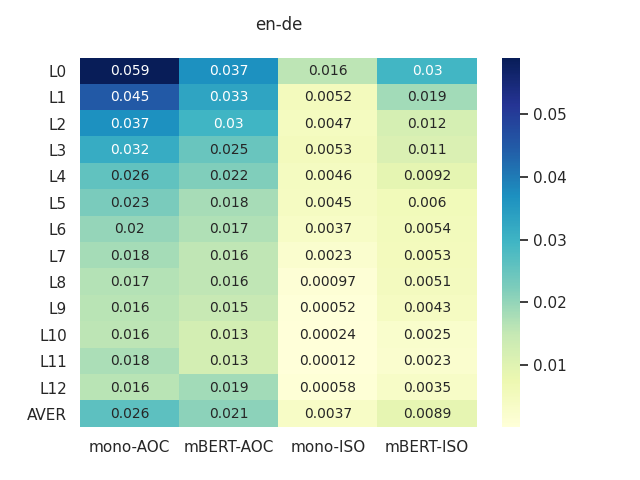

One of the most powerful features of contextualized models is their dynamic embeddings for words in context, leading to state-of-the-art representations for context-aware lexical semantics. In this paper, we present a post-processing technique that enhances these representations by learning a transformation through static anchors. Our method requires only another pre-trained model and no labeled data is needed. We show consistent improvement in a range of benchmark tasks that test contextual variations of meaning both across different usages of a word and across different words as they are used in context. We demonstrate that while the original contextual representations can be improved by another embedding space from both contextualized and static models, the static embeddings, which have lower computational requirements, provide the most gains.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Probing Pretrained Language Models for Lexical Semantics

Ivan Vulić, Edoardo Maria Ponti, Robert Litschko, Goran Glavaš, Anna Korhonen,

VCDM: Leveraging Variational Bi-encoding and Deep Contextualized Word Representations for Improved Definition Modeling

Machel Reid, Edison Marrese-Taylor, Yutaka Matsuo,

A Synset Relation-enhanced Framework with a Try-again Mechanism for Word Sense Disambiguation

Ming Wang, Yinglin Wang,

XL-WiC: A Multilingual Benchmark for Evaluating Semantic Contextualization

Alessandro Raganato, Tommaso Pasini, Jose Camacho-Collados, Mohammad Taher Pilehvar,