Deconstructing word embedding algorithms

Kian Kenyon-Dean, Edward Newell, Jackie Chi Kit Cheung

Semantics: Lexical Semantics Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

Word embeddings are reliable feature representations of words used to obtain high quality results for various NLP applications. Uncontextualized word embeddings are used in many NLP tasks today, especially in resource-limited settings where high memory capacity and GPUs are not available. Given the historical success of word embeddings in NLP, we propose a retrospective on some of the most well-known word embedding algorithms. In this work, we deconstruct Word2vec, GloVe, and others, into a common form, unveiling some of the common conditions that seem to be required for making performant word embeddings. We believe that the theoretical findings in this paper can provide a basis for more informed development of future models.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

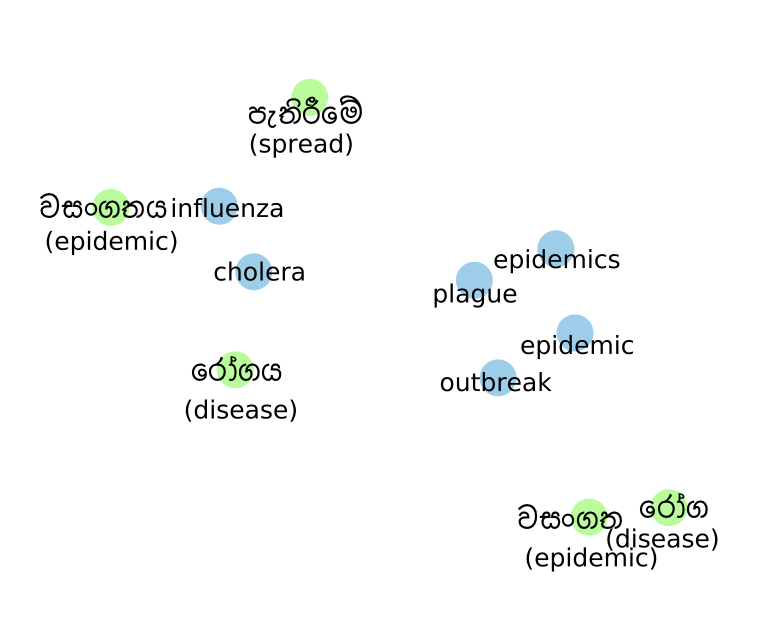

Connected Papers in EMNLP2020

Similar Papers

Improving Low Compute Language Modeling with In-Domain Embedding Initialisation

Charles Welch, Rada Mihalcea, Jonathan K. Kummerfeld,

Interactive Refinement of Cross-Lingual Word Embeddings

Michelle Yuan, Mozhi Zhang, Benjamin Van Durme, Leah Findlater, Jordan Boyd-Graber,

Compositional Demographic Word Embeddings

Charles Welch, Jonathan K. Kummerfeld, Verónica Pérez-Rosas, Rada Mihalcea,

With More Contexts Comes Better Performance: Contextualized Sense Embeddings for All-Round Word Sense Disambiguation

Bianca Scarlini, Tommaso Pasini, Roberto Navigli,