Interactive Refinement of Cross-Lingual Word Embeddings

Michelle Yuan, Mozhi Zhang, Benjamin Van Durme, Leah Findlater, Jordan Boyd-Graber

Machine Translation and Multilinguality Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

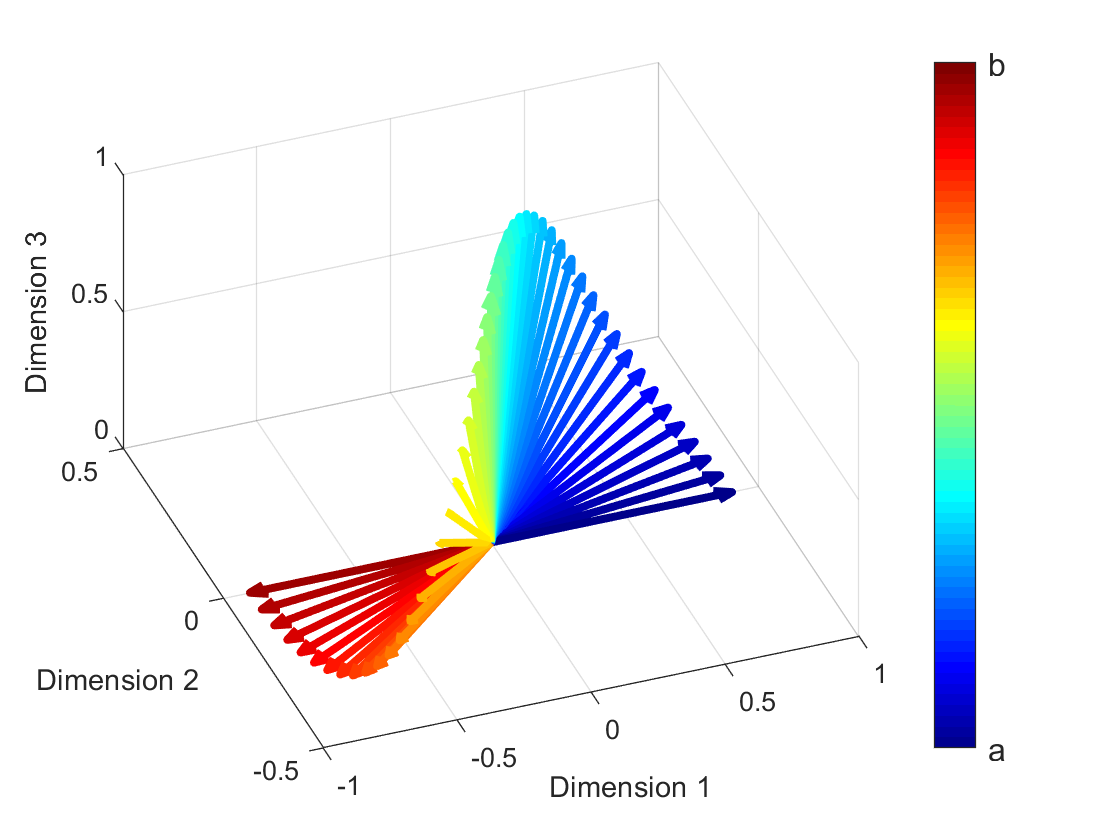

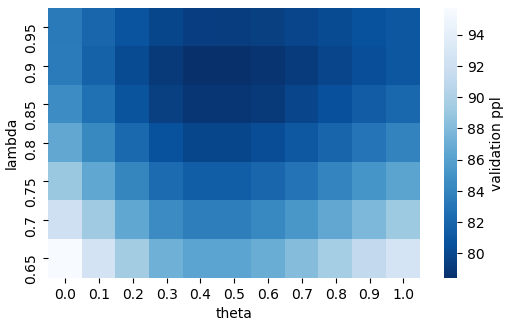

Cross-lingual word embeddings transfer knowledge between languages: models trained on high-resource languages can predict in low-resource languages. We introduce CLIME, an interactive system to quickly refine cross-lingual word embeddings for a given classification problem. First, CLIME ranks words by their salience to the downstream task. Then, users mark similarity between keywords and their nearest neighbors in the embedding space. Finally, CLIME updates the embeddings using the annotations. We evaluate CLIME on identifying health-related text in four low-resource languages: Ilocano, Sinhalese, Tigrinya, and Uyghur. Embeddings refined by CLIME capture more nuanced word semantics and have higher test accuracy than the original embeddings. CLIME often improves accuracy faster than an active learning baseline and can be easily combined with active learning to improve results.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Methods for Numeracy-Preserving Word Embeddings

Dhanasekar Sundararaman, Shijing Si, Vivek Subramanian, Guoyin Wang, Devamanyu Hazarika, Lawrence Carin,

Grounded Compositional Outputs for Adaptive Language Modeling

Nikolaos Pappas, Phoebe Mulcaire, Noah A. Smith,

Bridging Linguistic Typology and Multilingual Machine Translation with Multi-View Language Representations

Arturo Oncevay, Barry Haddow, Alexandra Birch,