Generating Radiology Reports via Memory-driven Transformer

Zhihong Chen, Yan Song, Tsung-Hui Chang, Xiang Wan

NLP Applications Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

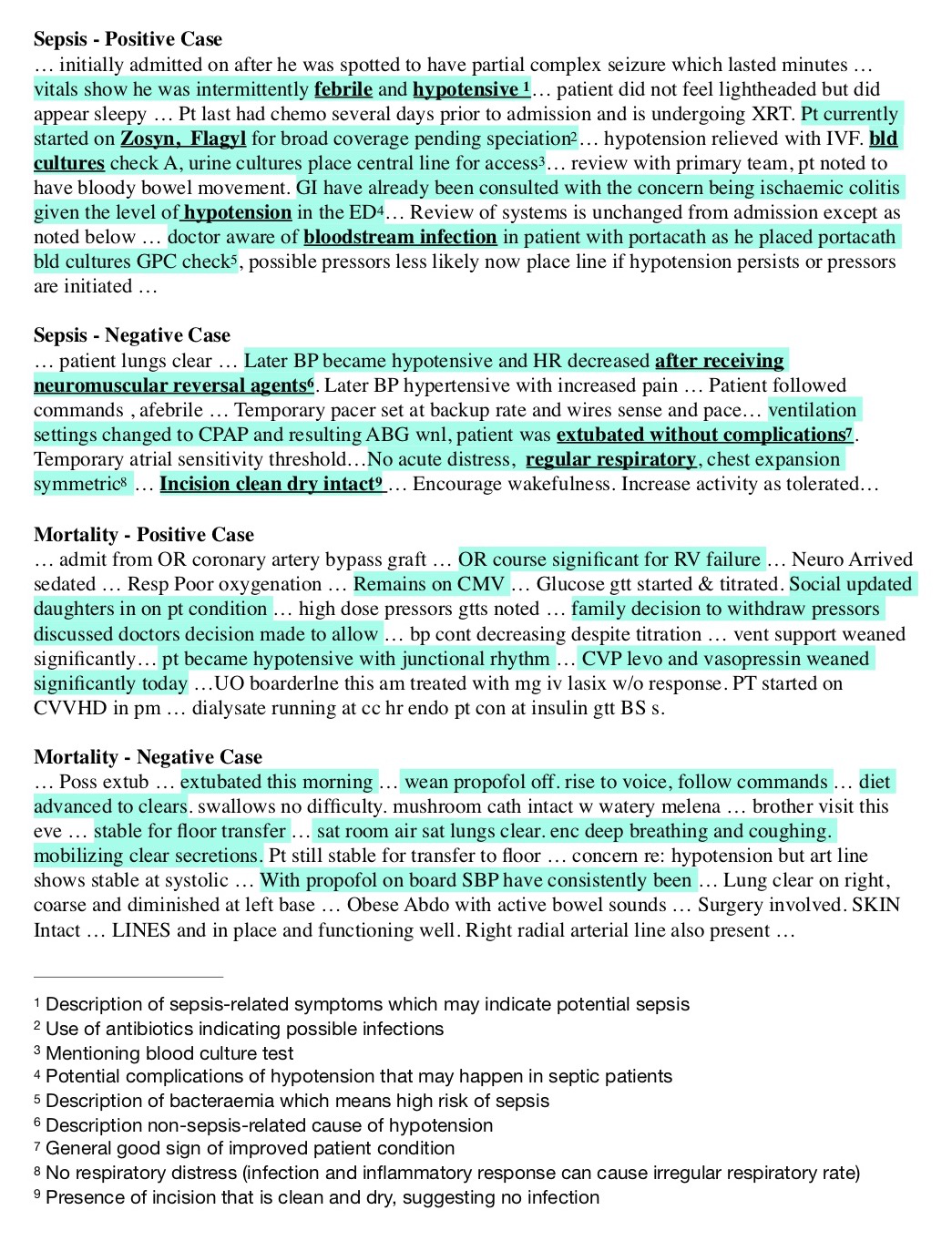

Medical imaging is frequently used in clinical practice and trials for diagnosis and treatment. Writing imaging reports is time-consuming and can be error-prone for inexperienced radiologists. Therefore, automatically generating radiology reports is highly desired to lighten the workload of radiologists and accordingly promote clinical automation, which is an essential task to apply artificial intelligence to the medical domain. In this paper, we propose to generate radiology reports with memory-driven Transformer, where a relational memory is designed to record key information of the generation process and a memory-driven conditional layer normalization is applied to incorporating the memory into the decoder of Transformer. Experimental results on two prevailing radiology report datasets, IU X-Ray and MIMIC-CXR, show that our proposed approach outperforms previous models with respect to both language generation metrics and clinical evaluations. Particularly, this is the first work reporting the generation results on MIMIC-CXR to the best of our knowledge. Further analyses also demonstrate that our approach is able to generate long reports with necessary medical terms as well as meaningful image-text attention mappings.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Combining Automatic Labelers and Expert Annotations for Accurate Radiology Report Labeling Using BERT

Akshay Smit, Saahil Jain, Pranav Rajpurkar, Anuj Pareek, Andrew Ng, Matthew Lungren,

New Protocols and Negative Results for Textual Entailment Data Collection

Samuel R. Bowman, Jennimaria Palomaki, Livio Baldini Soares, Emily Pitler,

Incremental Processing in the Age of Non-Incremental Encoders: An Empirical Assessment of Bidirectional Models for Incremental NLU

Brielen Madureira, David Schlangen,