Combining Automatic Labelers and Expert Annotations for Accurate Radiology Report Labeling Using BERT

Akshay Smit, Saahil Jain, Pranav Rajpurkar, Anuj Pareek, Andrew Ng, Matthew Lungren

NLP Applications Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

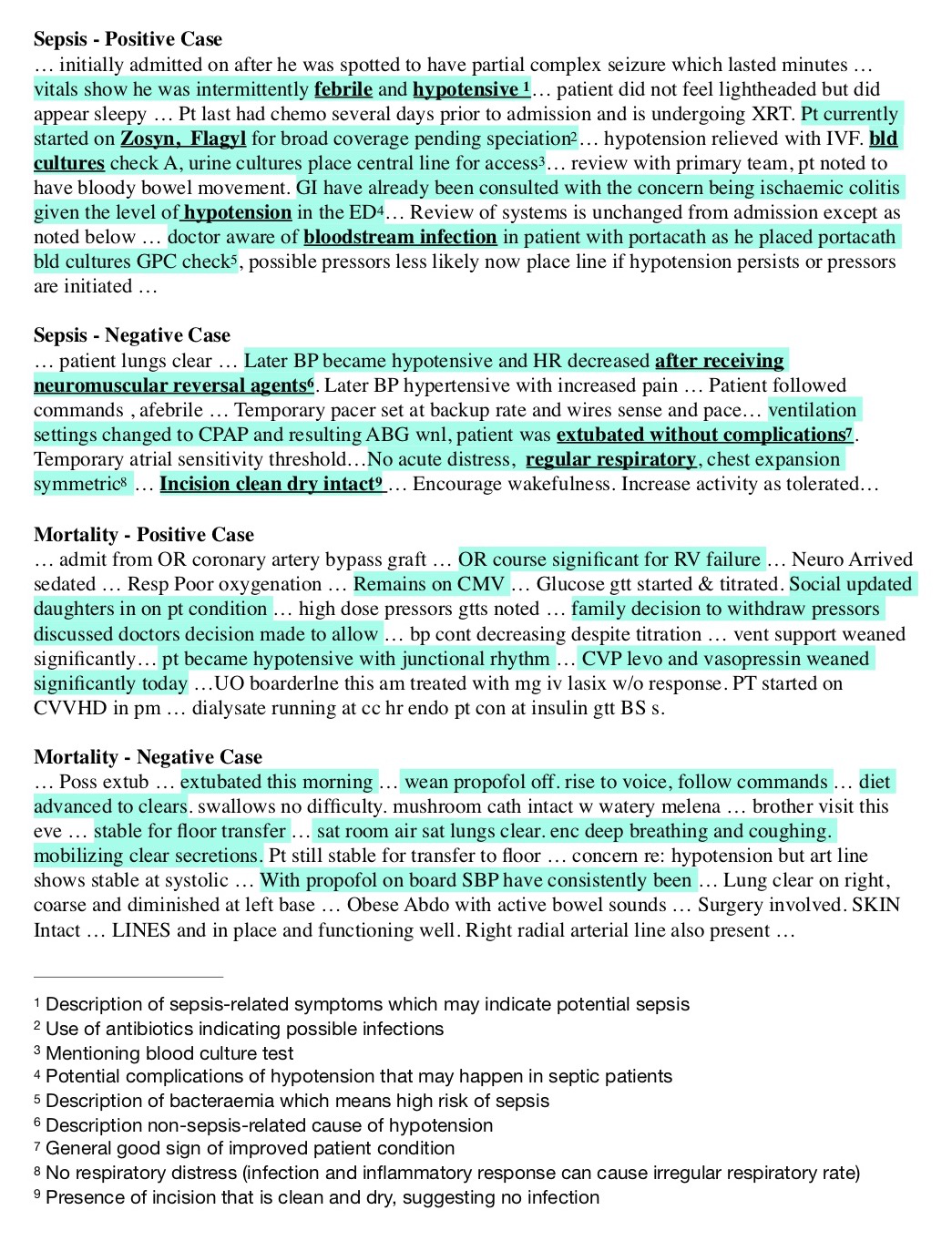

The extraction of labels from radiology text reports enables large-scale training of medical imaging models. Existing approaches to report labeling typically rely either on sophisticated feature engineering based on medical domain knowledge or manual annotations by experts. In this work, we introduce a BERT-based approach to medical image report labeling that exploits both the scale of available rule-based systems and the quality of expert annotations. We demonstrate superior performance of a biomedically pretrained BERT model first trained on annotations of a rule-based labeler and then finetuned on a small set of expert annotations augmented with automated backtranslation. We find that our final model, CheXbert, is able to outperform the previous best rules-based labeler with statistical significance, setting a new SOTA for report labeling on one of the largest datasets of chest x-rays.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

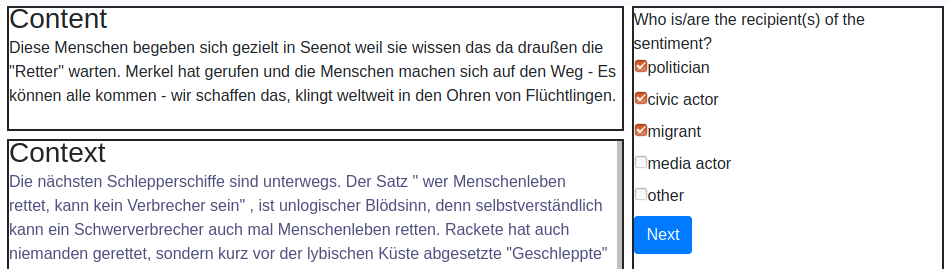

HUMAN: Hierarchical Universal Modular ANnotator

Moritz Wolf, Dana Ruiter, Ashwin Geet D'Sa, Liane Reiners, Jan Alexandersson, Dietrich Klakow,

Text Classification Using Label Names Only: A Language Model Self-Training Approach

Yu Meng, Yunyi Zhang, Jiaxin Huang, Chenyan Xiong, Heng Ji, Chao Zhang, Jiawei Han,

Learning a Cost-Effective Annotation Policy for Question Answering

Bernhard Kratzwald, Stefan Feuerriegel, Huan Sun,