Explainable Clinical Decision Support from Text

Jinyue Feng, Chantal Shaib, Frank Rudzicz

NLP Applications Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

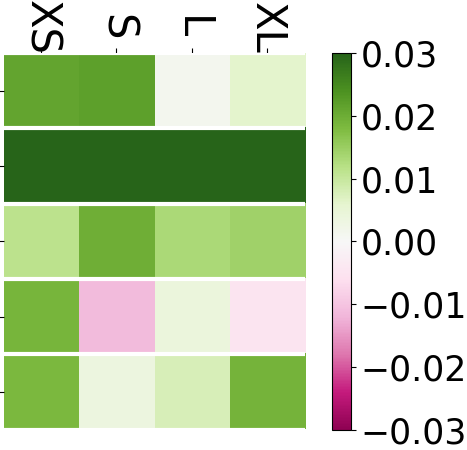

Clinical prediction models often use structured variables and provide outcomes that are not readily interpretable by clinicians. Further, free-text medical notes may contain information not immediately available in structured variables. We propose a hierarchical CNN-transformer model with explicit attention as an interpretable, multi-task clinical language model, which achieves an AUROC of 0.75 and 0.78 on sepsis and mortality prediction, respectively. We also explore the relationships between learned features from structured and unstructured variables using projection-weighted canonical correlation analysis. Finally, we outline a protocol to evaluate model usability in a clinical decision support context. From domain-expert evaluations, our model generates informative rationales that have promising real-life applications.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Towards Medical Machine Reading Comprehension with Structural Knowledge and Plain Text

Dongfang Li, Baotian Hu, Qingcai Chen, Weihua Peng, Anqi Wang,

Infusing Disease Knowledge into BERT for Health Question Answering, Medical Inference and Disease Name Recognition

Yun He, Ziwei Zhu, Yin Zhang, Qin Chen, James Caverlee,

Interpretable Multi-dataset Evaluation for Named Entity Recognition

Jinlan Fu, Pengfei Liu, Graham Neubig,

An Information Bottleneck Approach for Controlling Conciseness in Rationale Extraction

Bhargavi Paranjape, Mandar Joshi, John Thickstun, Hannaneh Hajishirzi, Luke Zettlemoyer,