Towards Reasonably-Sized Character-Level Transformer NMT by Finetuning Subword Systems

Jindřich Libovický, Alexander Fraser

Machine Translation and Multilinguality Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

Applying the Transformer architecture on the character level usually requires very deep architectures that are difficult and slow to train. These problems can be partially overcome by incorporating a segmentation into tokens in the model. We show that by initially training a subword model and then finetuning it on characters, we can obtain a neural machine translation model that works at the character level without requiring token segmentation. We use only the vanilla 6-layer Transformer Base architecture. Our character-level models better capture morphological phenomena and show more robustness to noise at the expense of somewhat worse overall translation quality. Our study is a significant step towards high-performance and easy to train character-based models that are not extremely large.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

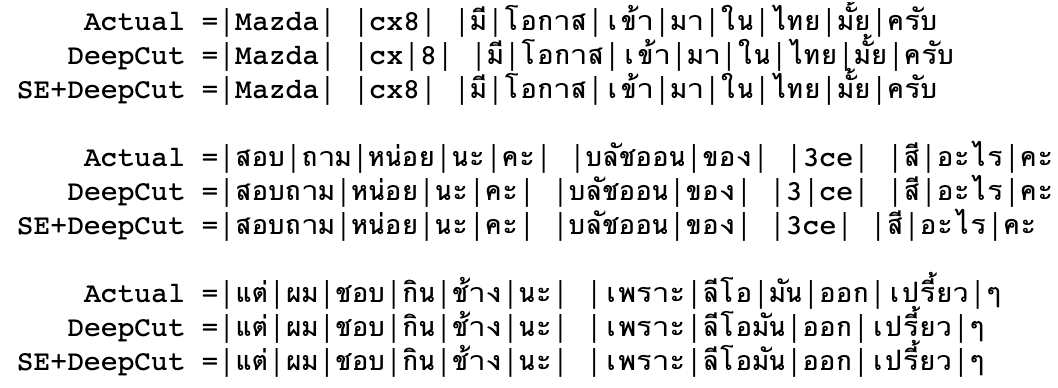

Domain Adaptation of Thai Word Segmentation Models using Stacked Ensemble

Peerat Limkonchotiwat, Wannaphong Phatthiyaphaibun, Raheem Sarwar, Ekapol Chuangsuwanich, Sarana Nutanong,

On the Sparsity of Neural Machine Translation Models

Yong Wang, Longyue Wang, Victor Li, Zhaopeng Tu,

Tackling the Low-resource Challenge for Canonical Segmentation

Manuel Mager, Özlem Çetinoğlu, Katharina Kann,