KERMIT: Complementing Transformer Architectures with Encoders of Explicit Syntactic Interpretations

Fabio Massimo Zanzotto, Andrea Santilli, Leonardo Ranaldi, Dario Onorati, Pierfrancesco Tommasino, Francesca Fallucchi

Machine Learning for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

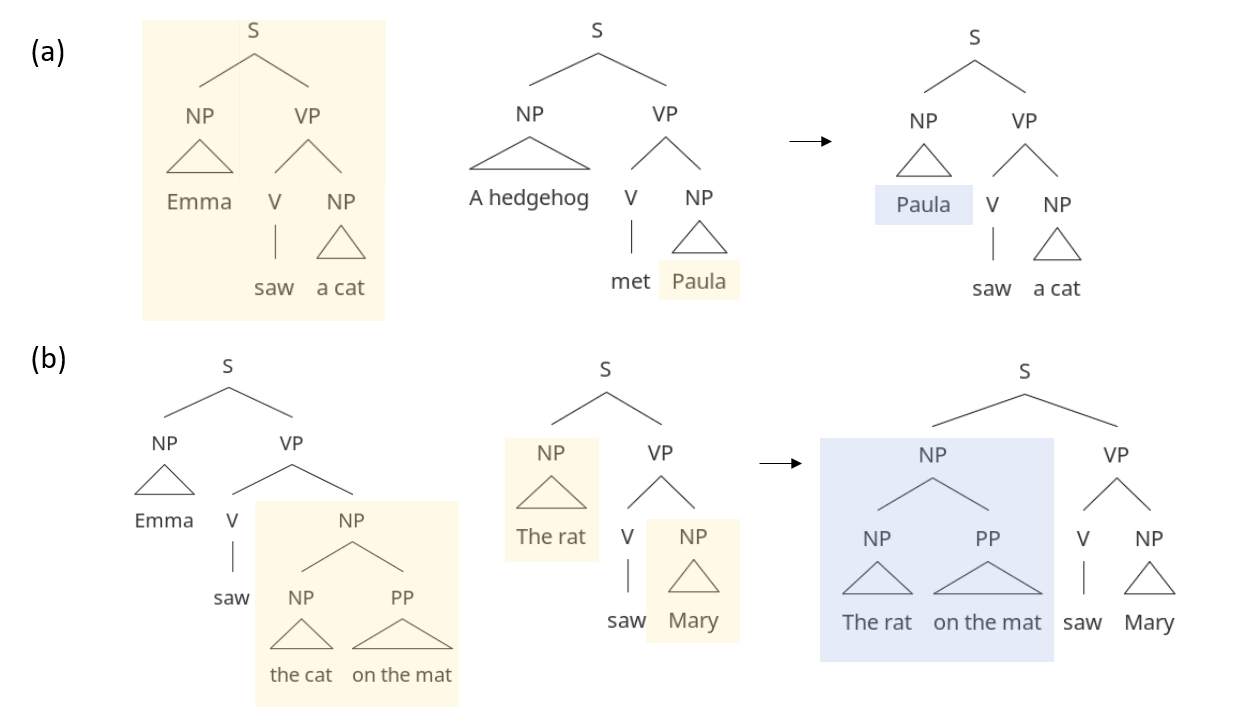

Syntactic parsers have dominated natural language understanding for decades. Yet, their syntactic interpretations are losing centrality in downstream tasks due to the success of large-scale textual representation learners. In this paper, we propose KERMIT (Kernel-inspired Encoder with Recursive Mechanism for Interpretable Trees) to embed symbolic syntactic parse trees into artificial neural networks and to visualize how syntax is used in inference. We experimented with KERMIT paired with two state-of-the-art transformer-based universal sentence encoders (BERT and XLNet) and we showed that KERMIT can indeed boost their performance by effectively embedding human-coded universal syntactic representations in neural networks

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Learning Explainable Linguistic Expressions with Neural Inductive Logic Programming for Sentence Classification

Prithviraj Sen, Marina Danilevsky, Yunyao Li, Siddhartha Brahma, Matthias Boehm, Laura Chiticariu, Rajasekar Krishnamurthy,

COGS: A Compositional Generalization Challenge Based on Semantic Interpretation

Najoung Kim, Tal Linzen,