The Return of Lexical Dependencies: Neural Lexicalized PCFGs

Hao Zhu, Yonatan Bisk, Graham Neubig

Syntax: Tagging, Chunking, and Parsing Tacl Paper

You can open the pre-recorded video in a separate window.

Abstract:

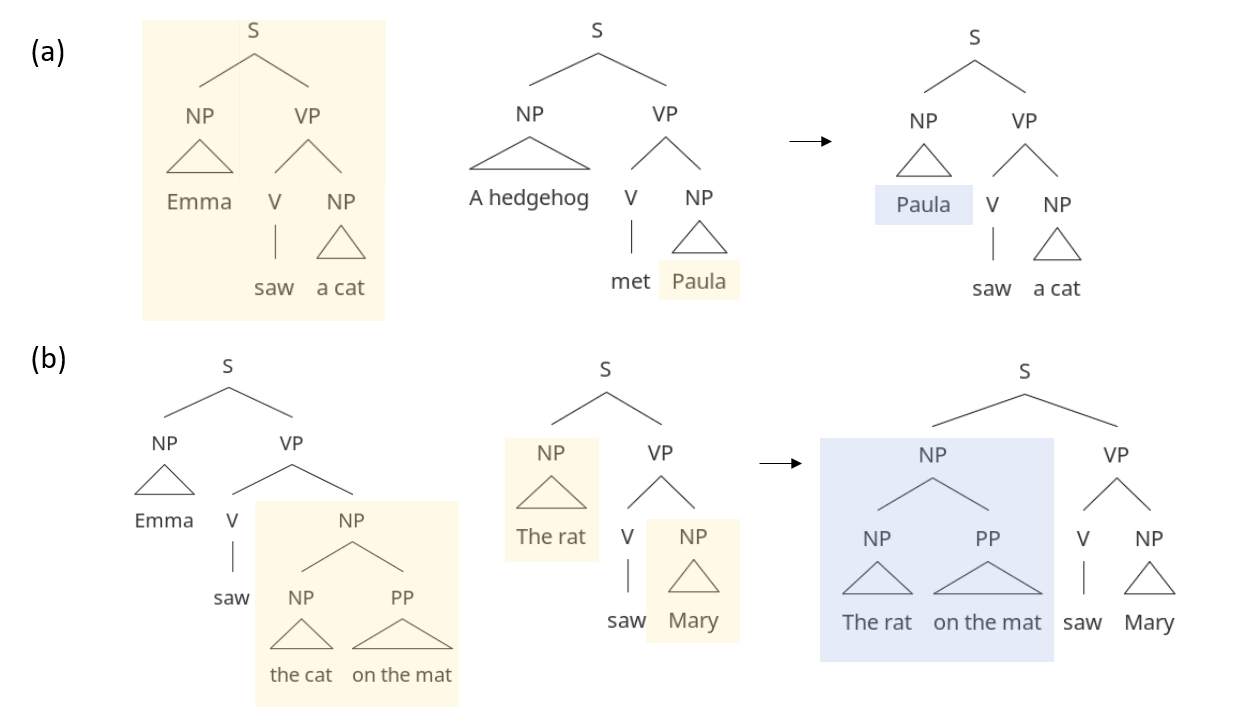

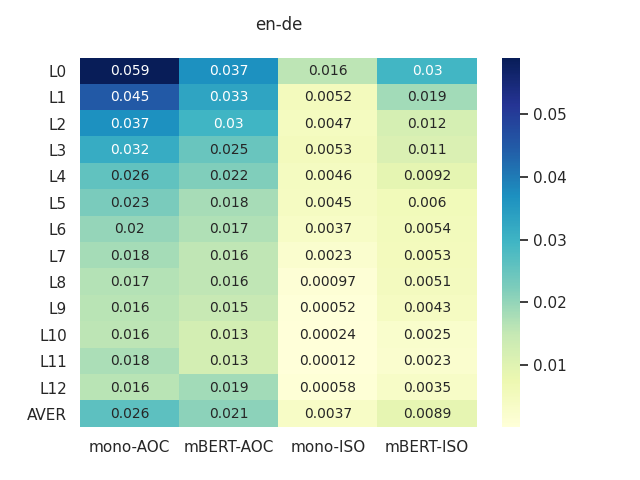

In this paper we demonstrate that context free grammar (CFG) based methods for grammar induction benefit from modeling lexical dependencies. This contrasts to the most popular current methods for grammar induction, which focus on discovering either constituents or dependencies. Previous approaches to marry these two disparate syntactic formalisms (e.g. lexicalized PCFGs) have been plagued by sparsity, making them unsuitable for unsupervised grammar induction. However, in this work, we present novel neural models of lexicalized PCFGs which allow us to overcome sparsity problems and effectively induce both constituents and dependencies within a single model. Experiments demonstrate that this unified framework results in stronger results on both representations than achieved when modeling either formalism alone. Code is available at https://github.com/neulab/neural-lpcfg.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Probing Pretrained Language Models for Lexical Semantics

Ivan Vulić, Edoardo Maria Ponti, Robert Litschko, Goran Glavaš, Anna Korhonen,

COGS: A Compositional Generalization Challenge Based on Semantic Interpretation

Najoung Kim, Tal Linzen,