Towards Interpretable Reasoning over Paragraph Effects in Situation

Mucheng Ren, Xiubo Geng, Tao Qin, Heyan Huang, Daxin Jiang

Question Answering Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

We focus on the task of reasoning over paragraph effects in situation, which requires a model to understand the cause and effect described in a background paragraph, and apply the knowledge to a novel situation. Existing works ignore the complicated reasoning process and solve it with a one-step "black box" model. Inspired by human cognitive processes, in this paper we propose a sequential approach for this task which explicitly models each step of the reasoning process with neural network modules. In particular, five reasoning modules are designed and learned in an end-to-end manner, which leads to a more interpretable model. Experimental results on the ROPES dataset demonstrate the effectiveness and explainability of our proposed approach.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Multi-Step Inference for Reasoning Over Paragraphs

Jiangming Liu, Matt Gardner, Shay B. Cohen, Mirella Lapata,

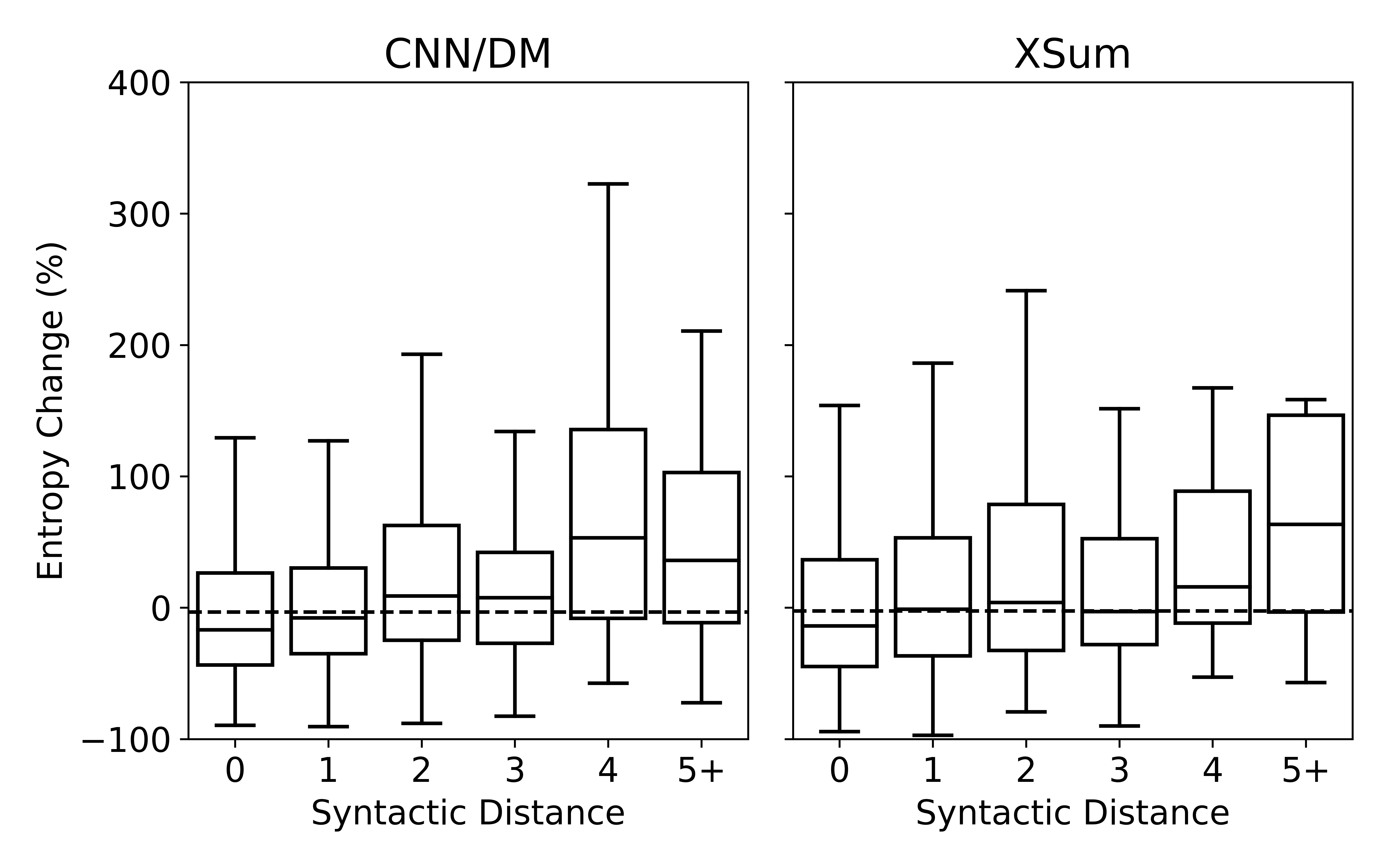

Understanding Neural Abstractive Summarization Models via Uncertainty

Jiacheng Xu, Shrey Desai, Greg Durrett,

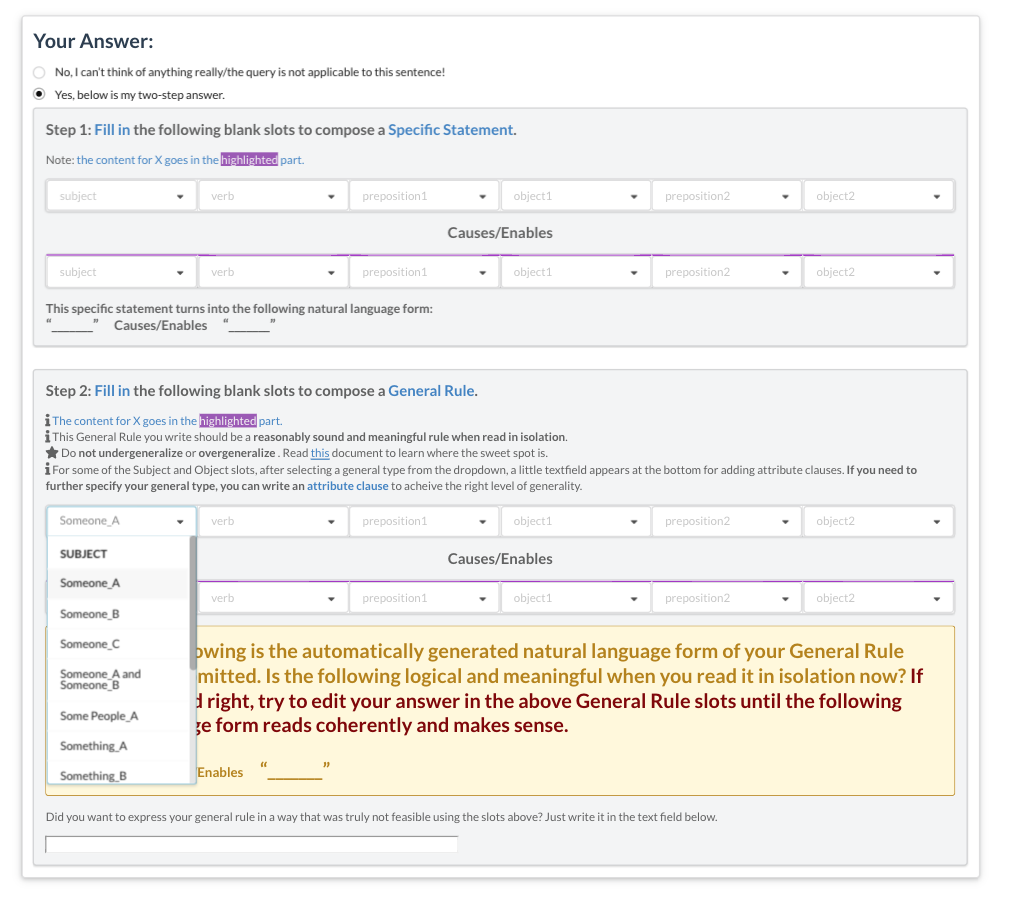

GLUCOSE: GeneraLized and COntextualized Story Explanations

Nasrin Mostafazadeh, Aditya Kalyanpur, Lori Moon, David Buchanan, Lauren Berkowitz, Or Biran, Jennifer Chu-Carroll,