Data Weighted Training Strategies for Grammatical Error Correction

Jared Lichtarge, Chris Alberti, Shankar Kumar

NLP Applications Tacl Paper

You can open the pre-recorded video in a separate window.

Abstract:

Recent progress in the task of Grammatical Error Correction (GEC) has been driven by addressing data sparsity, both through new methods for generating large and noisy pretraining data and through the publication of small and higher-quality finetuning data in the BEA-2019 shared task. Building upon recent work in Neural Machine Translation (NMT), we make use of both kinds of data by deriving example-level scores on our large pretraining data based on a smaller, higher-quality dataset. In this work, we perform an empirical study to discover how to best incorporate delta-log-perplexity, a type of example scoring, into a training schedule for GEC. In doing so, we perform experiments that shed light on the function and applicability of delta-log-perplexity. Models trained on scored data achieve state-of-the-art results on common GEC test sets.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

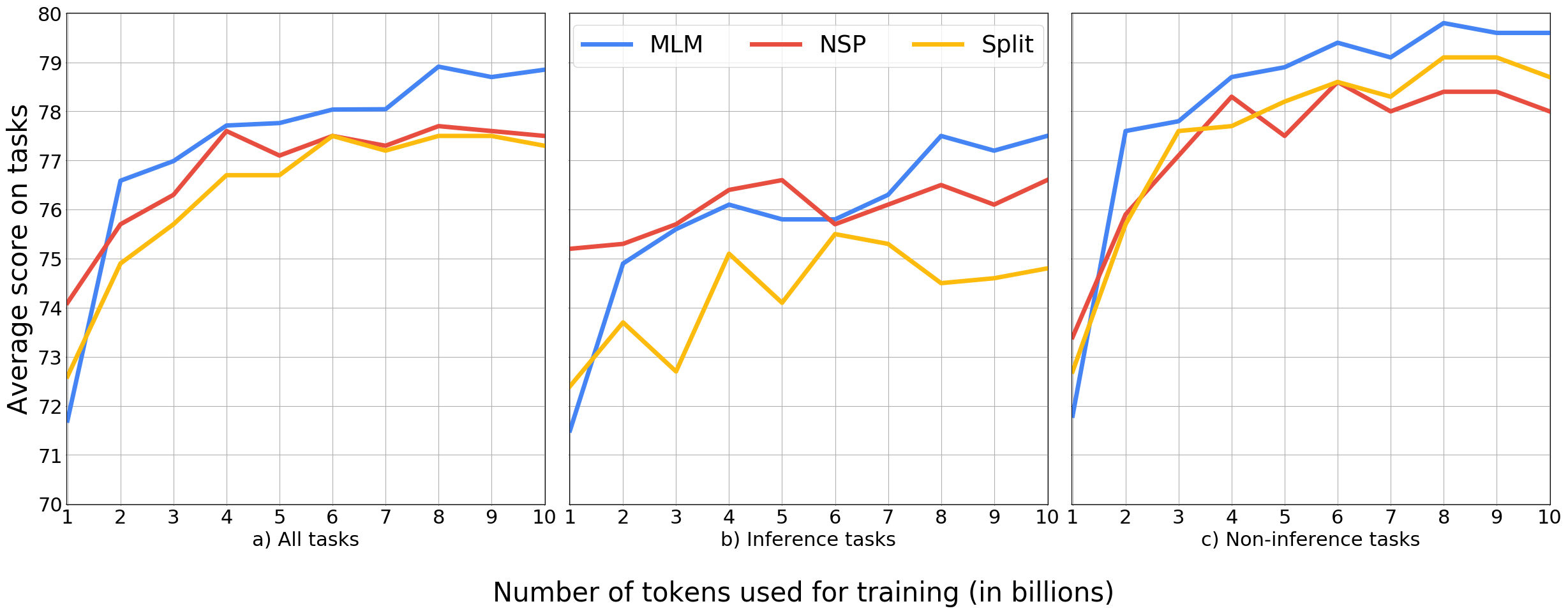

On the importance of pre-training data volume for compact language models

Vincent Micheli, Martin d'Hoffschmidt, François Fleuret,

Pronoun-Targeted Fine-tuning for NMT with Hybrid Losses

Prathyusha Jwalapuram, Shafiq Joty, Youlin Shen,

On the Sparsity of Neural Machine Translation Models

Yong Wang, Longyue Wang, Victor Li, Zhaopeng Tu,