A Joint Multiple Criteria Model in Transfer Learning for Cross-domain Chinese Word Segmentation

Kaiyu Huang, Degen Huang, Zhuang Liu, Fengran Mo

Phonology, Morphology and Word Segmentation Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

Word-level information is important in natural language processing (NLP), especially for the Chinese language due to its high linguistic complexity. Chinese word segmentation (CWS) is an essential task for Chinese downstream NLP tasks. Existing methods have already achieved a competitive performance for CWS on large-scale annotated corpora. However, the accuracy of the method will drop dramatically when it handles an unsegmented text with lots of out-of-vocabulary (OOV) words. In addition, there are many different segmentation criteria for addressing different requirements of downstream NLP tasks. Excessive amounts of models with saving different criteria will generate the explosive growth of the total parameters. To this end, we propose a joint multiple criteria model that shares all parameters to integrate different segmentation criteria into one model. Besides, we utilize a transfer learning method to improve the performance of OOV words. Our proposed method is evaluated by designing comprehensive experiments on multiple benchmark datasets (e.g., Bakeoff 2005, Bakeoff 2008 and SIGHAN 2010). Our method achieves the state-of-the-art performances on all datasets. Importantly, our method also shows a competitive practicability and generalization ability for the CWS task.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

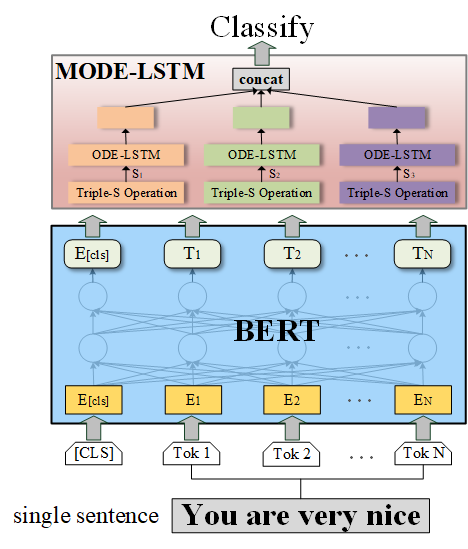

MODE-LSTM: A Parameter-efficient Recurrent Network with Multi-Scale for Sentence Classification

Qianli Ma, Zhenxi Lin, Jiangyue Yan, Zipeng Chen, Liuhong Yu,

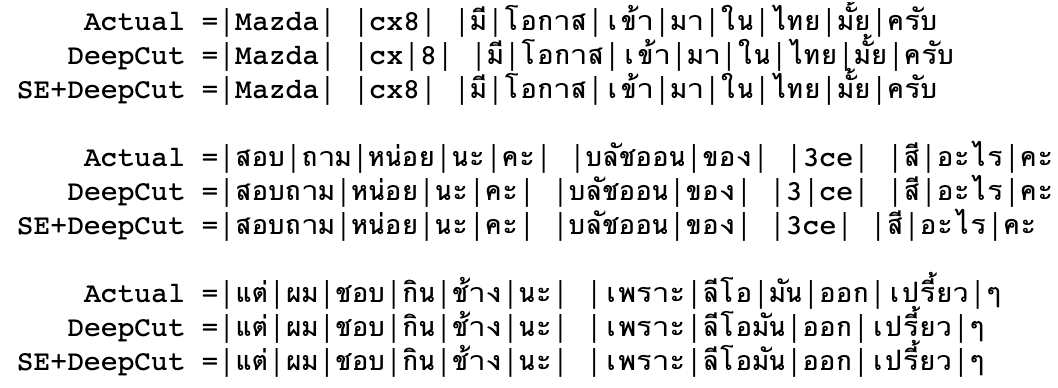

Domain Adaptation of Thai Word Segmentation Models using Stacked Ensemble

Peerat Limkonchotiwat, Wannaphong Phatthiyaphaibun, Raheem Sarwar, Ekapol Chuangsuwanich, Sarana Nutanong,

Meta Fine-Tuning Neural Language Models for Multi-Domain Text Mining

Chengyu Wang, Minghui Qiu, Jun Huang, Xiaofeng He,