Continuity of Topic, Interaction, and Query: Learning to Quote in Online Conversations

Lingzhi Wang, Jing Li, Xingshan Zeng, Haisong Zhang, Kam-Fai Wong

Computational Social Science and Social Media Long Paper

Abstract:

Quotations are crucial for successful explanations and persuasions in interpersonal communications. However, finding what to quote in a conversation is challenging for both humans and machines. This work studies automatic quotation generation in an online conversation and explores how language consistency affects whether a quotation fits the given context. Here, we capture the contextual consistency of a quotation in terms of latent topics, interactions with the dialogue history, and coherence to the query turn's existing contents. Further, an encoder-decoder neural framework is employed to continue the context with a quotation via language generation. Experiment results on two large-scale datasets in English and Chinese demonstrate that our quotation generation model outperforms the state-of-the-art models. Further analysis shows that topic, interaction, and query consistency are all helpful to learn how to quote in online conversations.

Connected Papers in EMNLP2020

Similar Papers

Cross Copy Network for Dialogue Generation

Changzhen Ji, Xin Zhou, Yating Zhang, Xiaozhong Liu, Changlong Sun, Conghui Zhu, Tiejun Zhao,

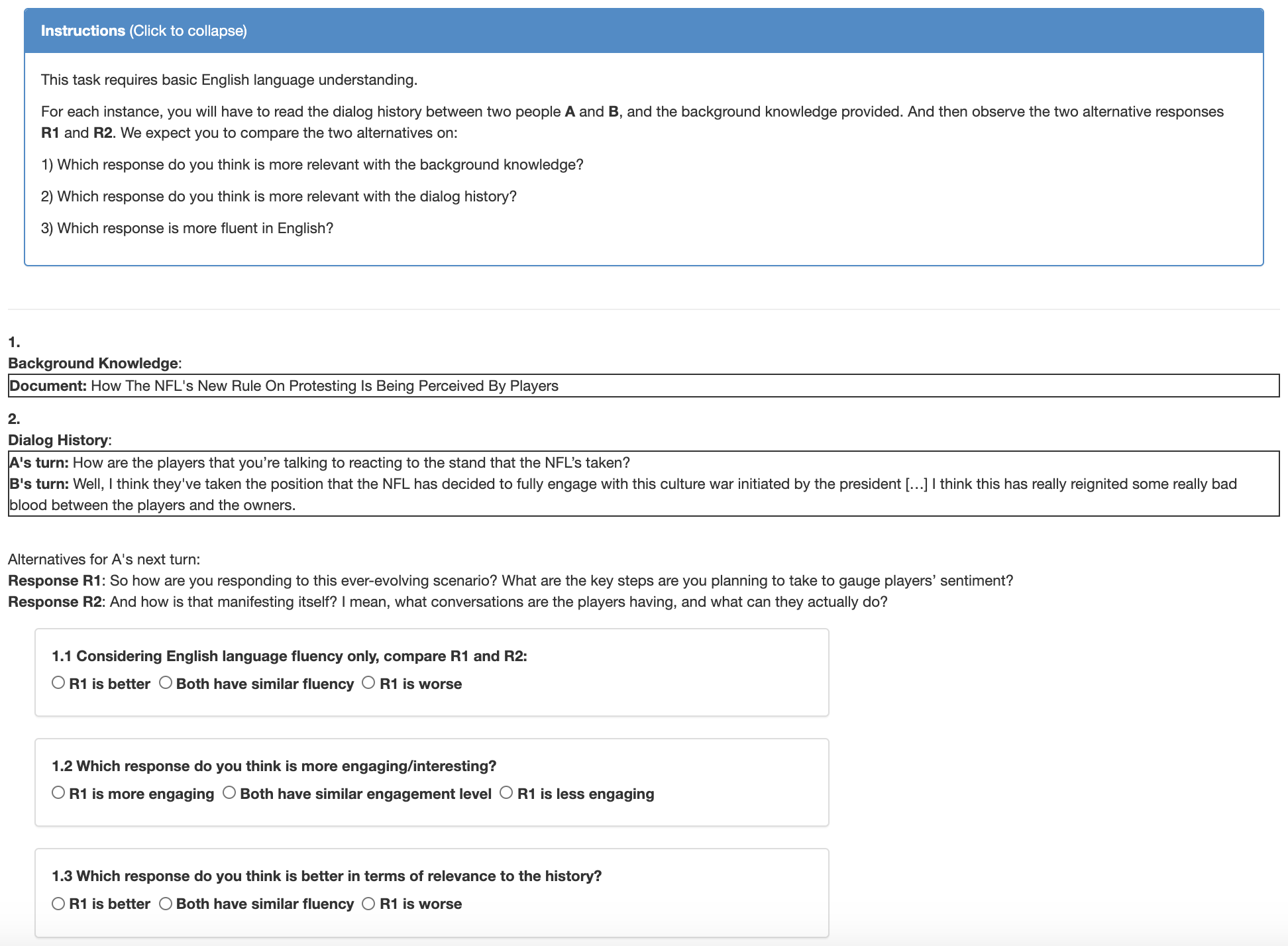

Interview: Large-scale Modeling of Media Dialog with Discourse Patterns and Knowledge Grounding

Bodhisattwa Prasad Majumder, Shuyang Li, Jianmo Ni, Julian McAuley,

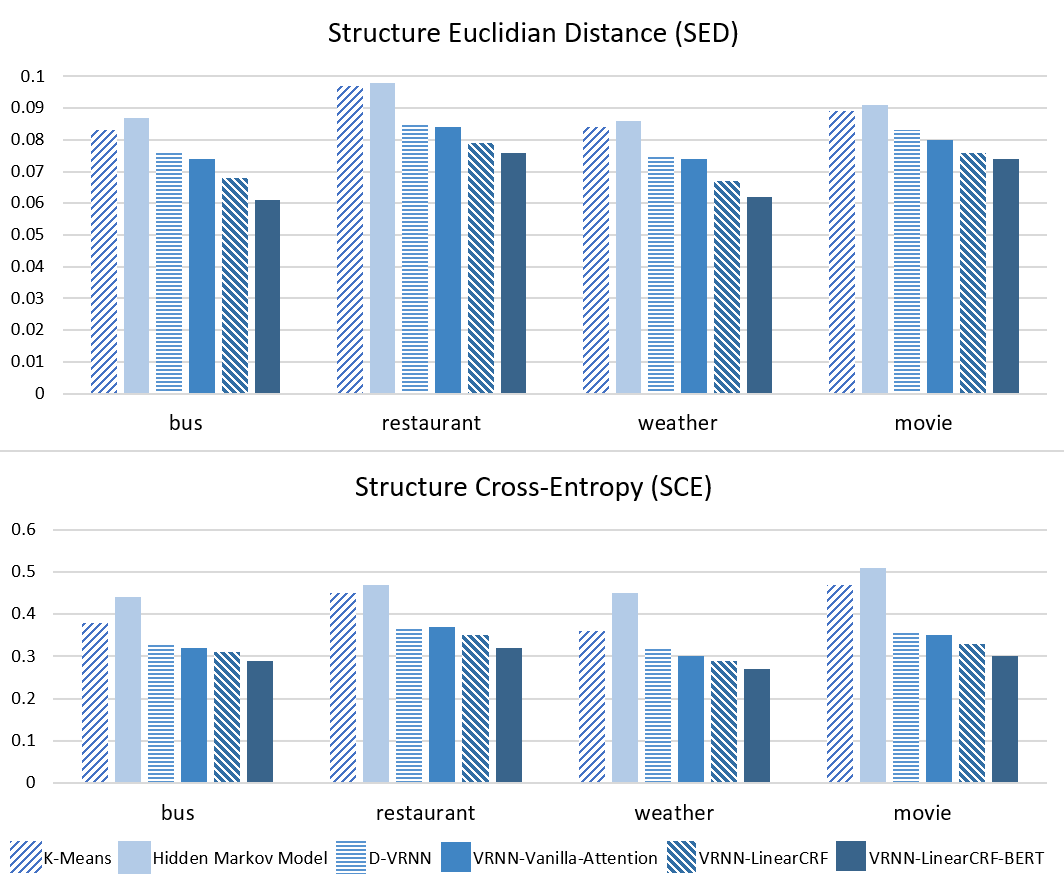

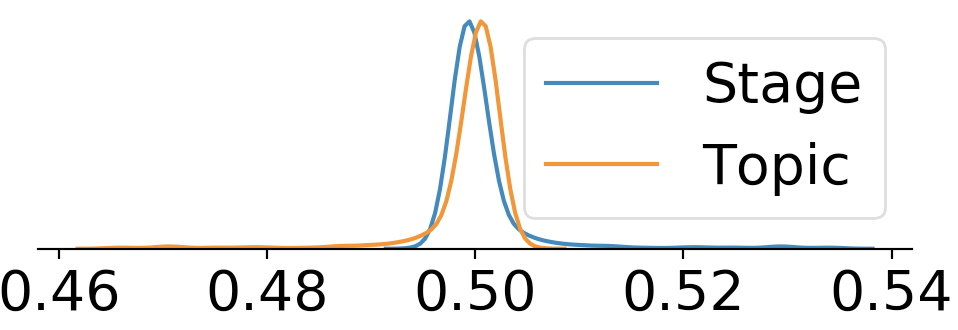

Structured Attention for Unsupervised Dialogue Structure Induction

Liang Qiu, Yizhou Zhao, Weiyan Shi, Yuan Liang, Feng Shi, Tao Yuan, Zhou Yu, Song-Chun Zhu,