Modularized Syntactic Neural Networks for Sentence Classification

Haiyan Wu, Ying Liu, Shaoyun Shi

Semantics: Sentence-level Semantics, Textual Inference and Other areas Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

This paper focuses on tree-based modeling for the sentence classification task. In existing works, aggregating on a syntax tree usually considers local information of sub-trees. In contrast, in addition to the local information, our proposed Modularized Syntactic Neural Network (MSNN) utilizes the syntax category labels and takes advantage of the global context while modeling sub-trees. In MSNN, each node of a syntax tree is modeled by a label-related syntax module. Each syntax module aggregates the outputs of lower-level modules, and finally, the root module provides the sentence representation. We design a tree-parallel mini-batch strategy for efficient training and predicting. Experimental results on four benchmark datasets show that our MSNN significantly outperforms previous state-of-the-art tree-based methods on the sentence classification task.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

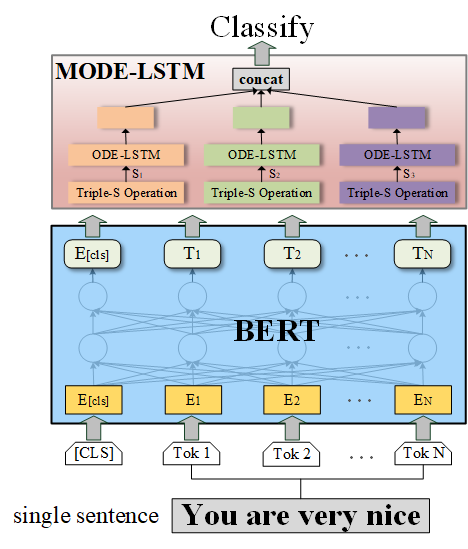

MODE-LSTM: A Parameter-efficient Recurrent Network with Multi-Scale for Sentence Classification

Qianli Ma, Zhenxi Lin, Jiangyue Yan, Zipeng Chen, Liuhong Yu,

Modeling Content Importance for Summarization with Pre-trained Language Models

Liqiang Xiao, Lu Wang, Hao He, Yaohui Jin,

Learning Explainable Linguistic Expressions with Neural Inductive Logic Programming for Sentence Classification

Prithviraj Sen, Marina Danilevsky, Yunyao Li, Siddhartha Brahma, Matthias Boehm, Laura Chiticariu, Rajasekar Krishnamurthy,