Queens are Powerful too: Mitigating Gender Bias in Dialogue Generation

Emily Dinan, Angela Fan, Adina Williams, Jack Urbanek, Douwe Kiela, Jason Weston

Dialog and Interactive Systems Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

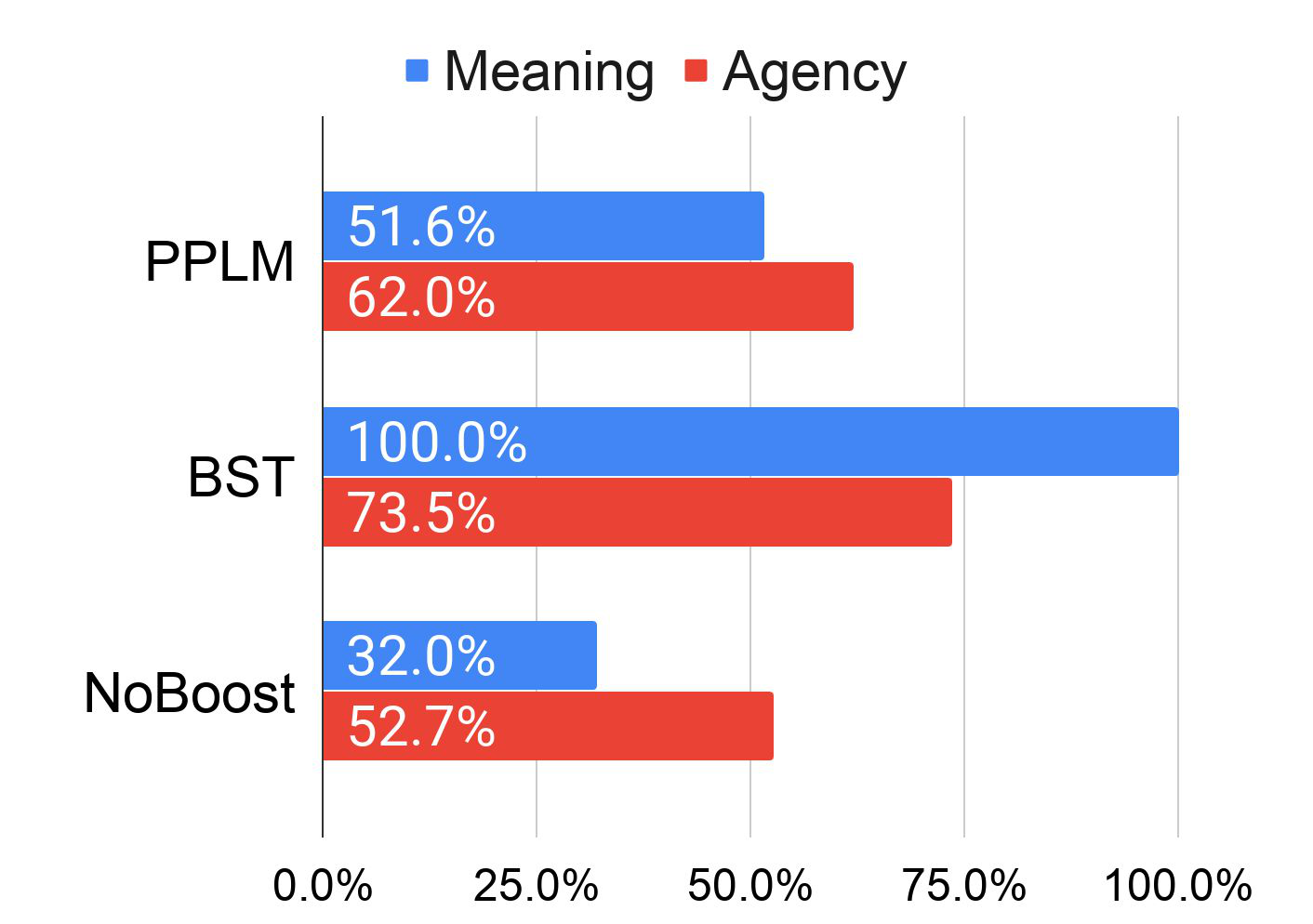

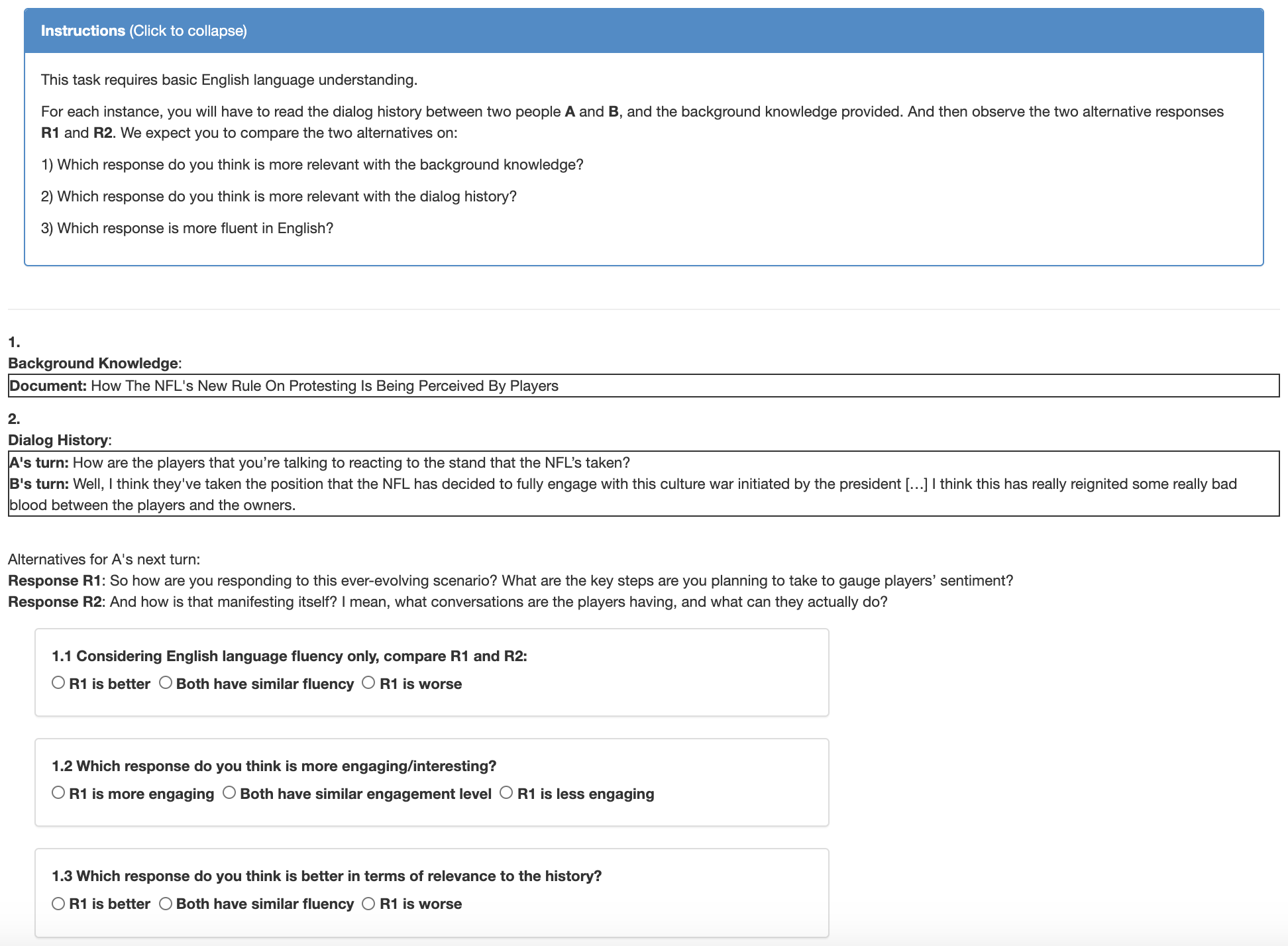

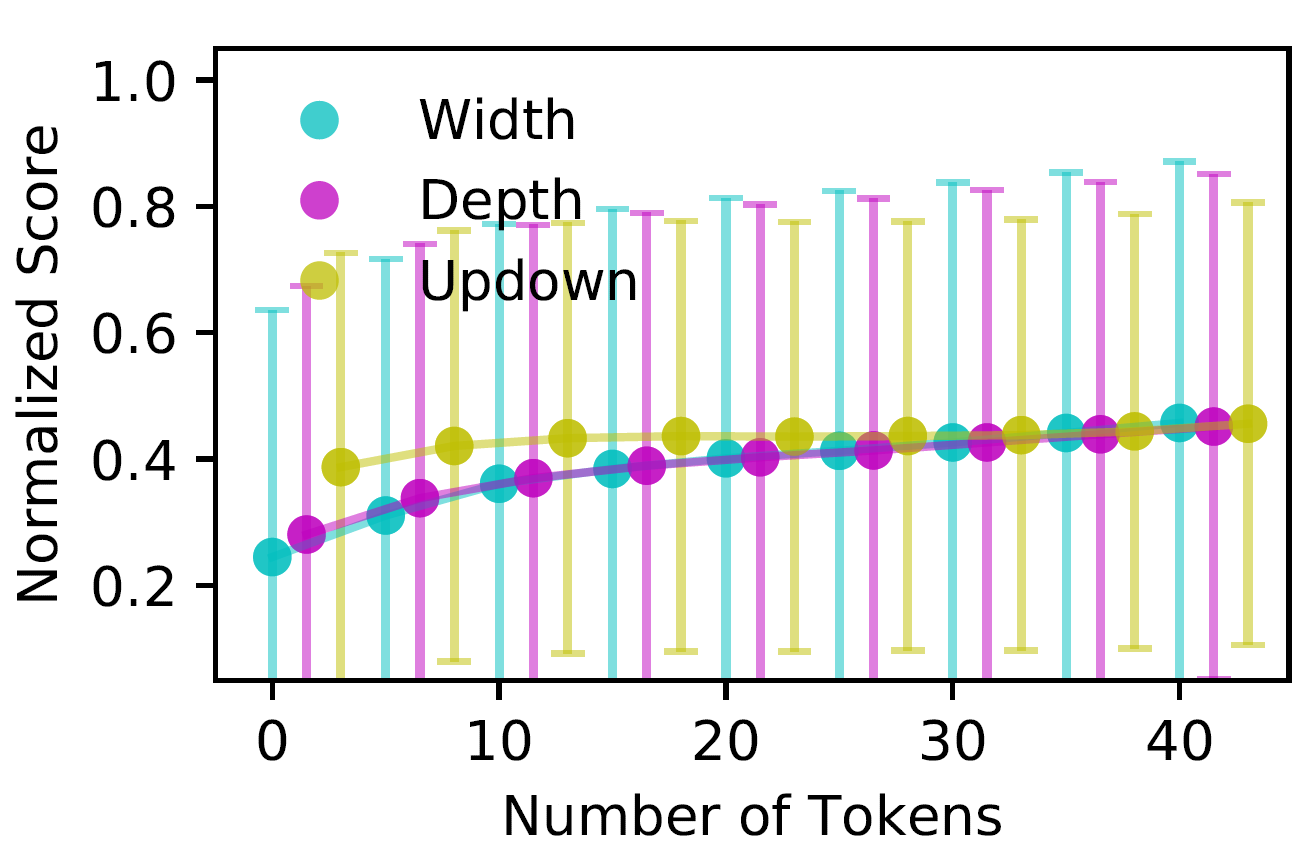

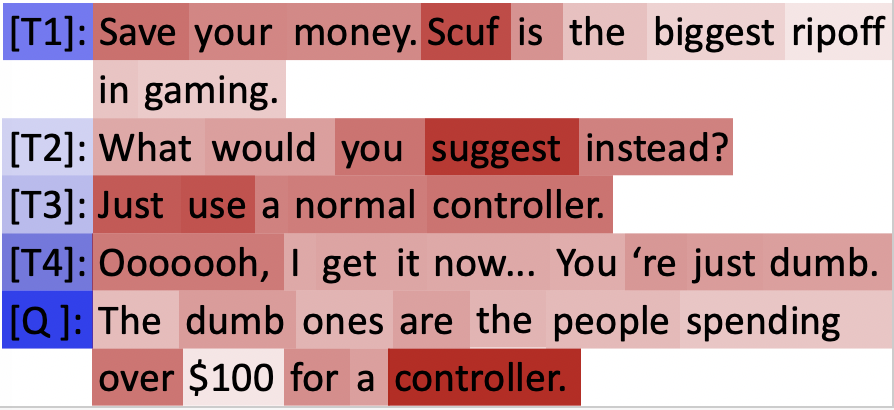

Social biases present in data are often directly reflected in the predictions of models trained on that data. We analyze gender bias in dialogue data, and examine how this bias is not only replicated, but is also amplified in subsequent generative chit-chat dialogue models. We measure gender bias in six existing dialogue datasets before selecting the most biased one, the multi-player text-based fantasy adventure dataset LIGHT, as a testbed for bias mitigation techniques. We consider three techniques to mitigate gender bias: counterfactual data augmentation, targeted data collection, and bias controlled training. We show that our proposed techniques mitigate gender bias by balancing the genderedness of generated dialogue utterances, and find that they are particularly effective in combination. We evaluate model performance with a variety of quantitative methods---including the quantity of gendered words, a dialogue safety classifier, and human assessments---all of which show that our models generate less gendered, but equally engaging chit-chat responses.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Interview: Large-scale Modeling of Media Dialog with Discourse Patterns and Knowledge Grounding

Bodhisattwa Prasad Majumder, Shuyang Li, Jianmo Ni, Julian McAuley,

Dialogue Response Ranking Training with Large-Scale Human Feedback Data

Xiang Gao, Yizhe Zhang, Michel Galley, Chris Brockett, Bill Dolan,

Continuity of Topic, Interaction, and Query: Learning to Quote in Online Conversations

Lingzhi Wang, Jing Li, Xingshan Zeng, Haisong Zhang, Kam-Fai Wong,

PowerTransformer: Unsupervised Controllable Revision for Biased Language Correction

Xinyao Ma, Maarten Sap, Hannah Rashkin, Yejin Choi,