Dialogue Response Ranking Training with Large-Scale Human Feedback Data

Xiang Gao, Yizhe Zhang, Michel Galley, Chris Brockett, Bill Dolan

Dialog and Interactive Systems Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

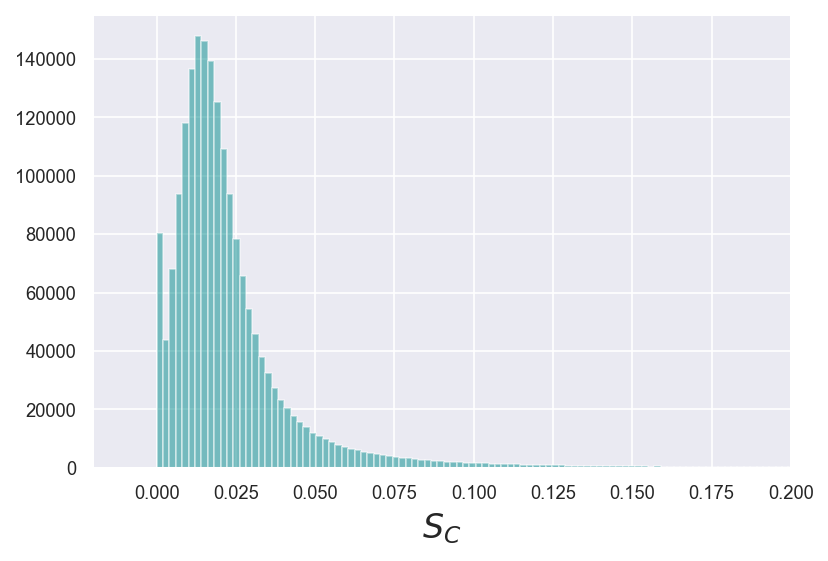

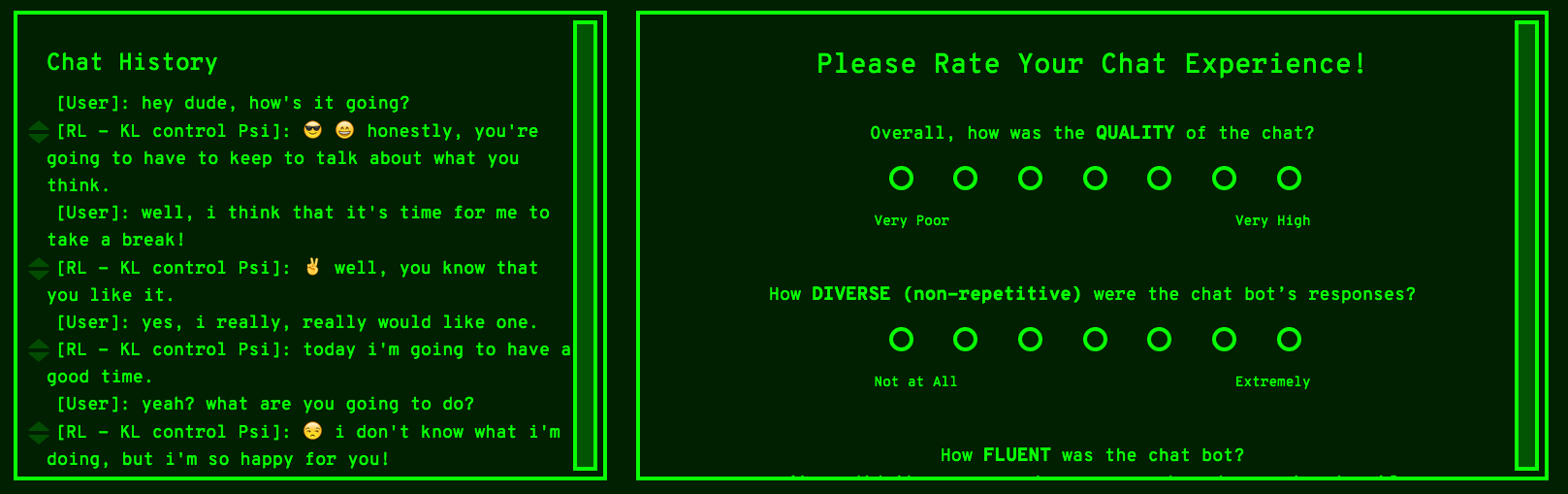

Existing open-domain dialog models are generally trained to minimize the perplexity of target human responses. However, some human replies are more engaging than others, spawning more followup interactions. Current conversational models are increasingly capable of producing turns that are context-relevant, but in order to produce compelling agents, these models need to be able to predict and optimize for turns that are genuinely engaging. We leverage social media feedback data (number of replies and upvotes) to build a large-scale training dataset for feedback prediction. To alleviate possible distortion between the feedback and engagingness, we convert the ranking problem to a comparison of response pairs which involve few confounding factors. We trained DialogRPT, a set of GPT-2 based models on 133M pairs of human feedback data and the resulting ranker outperformed several baselines. Particularly, our ranker outperforms the conventional dialog perplexity baseline with a large margin on predicting Reddit feedback. We finally combine the feedback prediction models and a human-like scoring model to rank the machine-generated dialog responses. Crowd-sourced human evaluation shows that our ranking method correlates better with real human preferences than baseline models.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

The World is Not Binary: Learning to Rank with Grayscale Data for Dialogue Response Selection

Zibo Lin, Deng Cai, Yan Wang, Xiaojiang Liu, Haitao Zheng, Shuming Shi,

Dialogue Distillation: Open-Domain Dialogue Augmentation Using Unpaired Data

Rongsheng Zhang, Yinhe Zheng, Jianzhi Shao, Xiaoxi Mao, Yadong Xi, Minlie Huang,

Human-centric dialog training via offline reinforcement learning

Natasha Jaques, Judy Hanwen Shen, Asma Ghandeharioun, Craig Ferguson, Agata Lapedriza, Noah Jones, Shixiang Gu, Rosalind Picard,

Filtering Noisy Dialogue Corpora by Connectivity and Content Relatedness

Reina Akama, Sho Yokoi, Jun Suzuki, Kentaro Inui,