The Thieves on Sesame Street are Polyglots - Extracting Multilingual Models from Monolingual APIs

Nitish Shirish Keskar, Bryan McCann, Caiming Xiong, Richard Socher

Machine Learning for NLP Short Paper

You can open the pre-recorded video in a separate window.

Abstract:

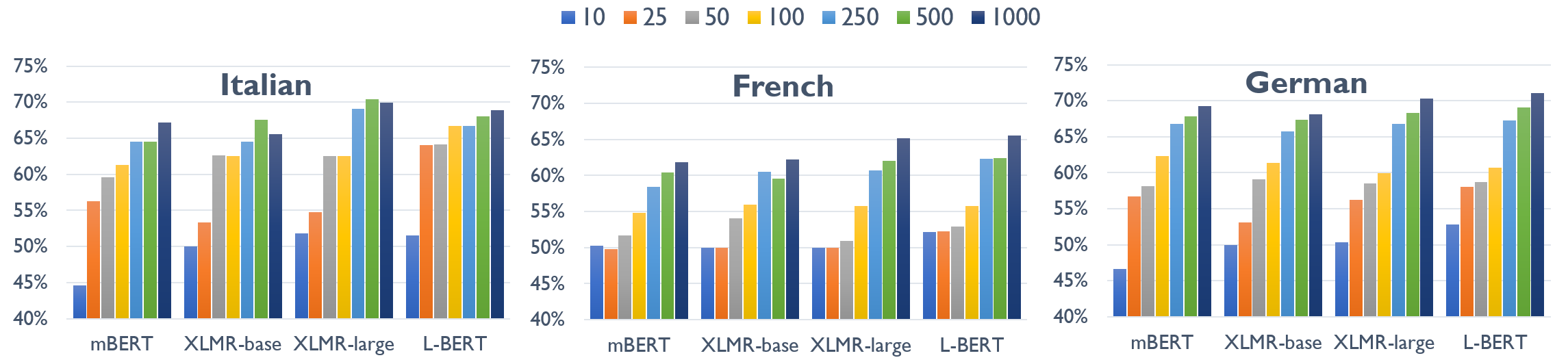

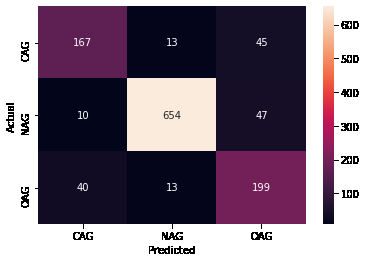

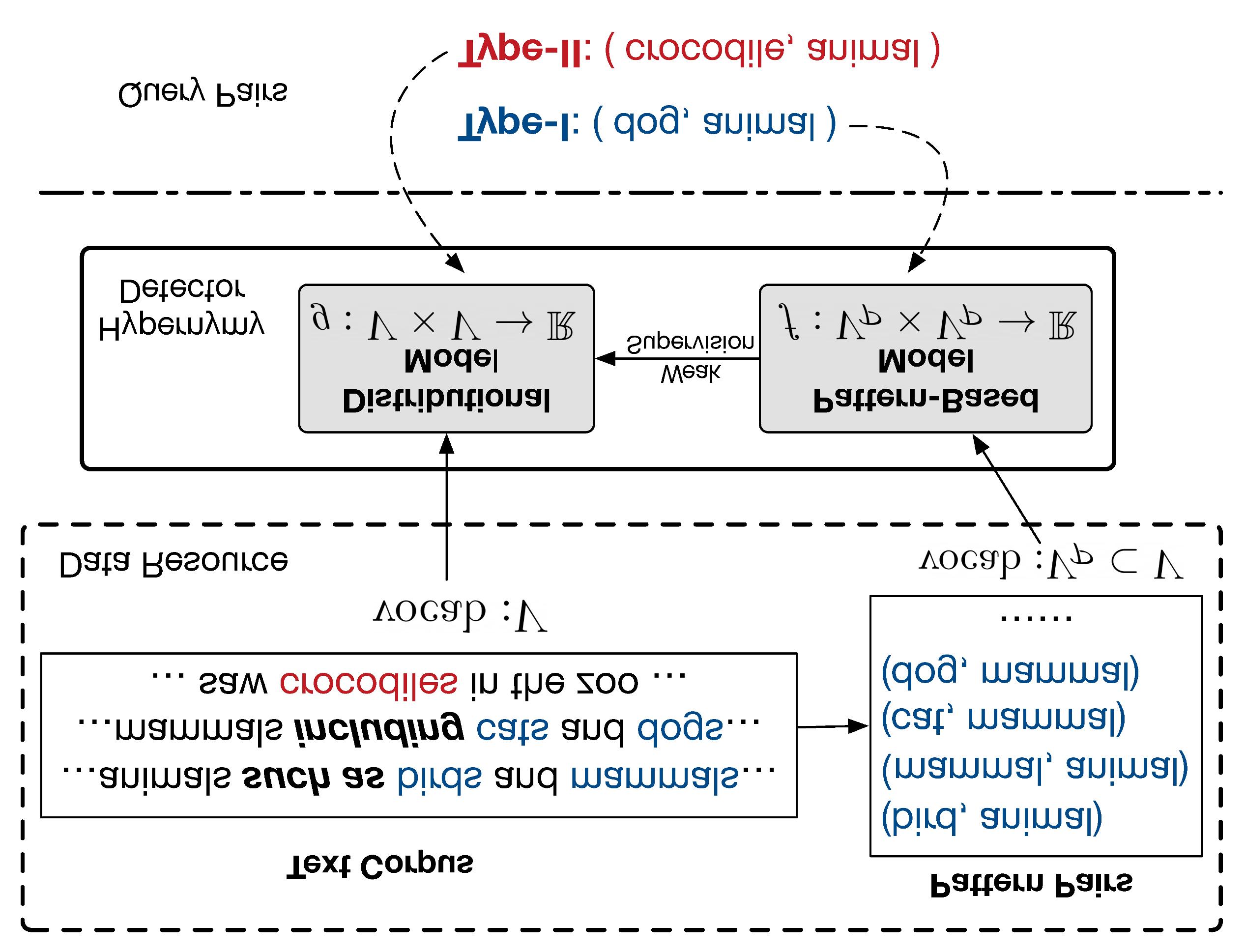

Pre-training in natural language processing makes it easier for an adversary with only query access to a victim model to reconstruct a local copy of the victim by training with gibberish input data paired with the victim's labels for that data. We discover that this extraction process extends to local copies initialized from a pre-trained, multilingual model while the victim remains monolingual. The extracted model learns the task from the monolingual victim, but it generalizes far better than the victim to several other languages. This is done without ever showing the multilingual, extracted model a well-formed input in any of the languages for the target task. We also demonstrate that a few real examples can greatly improve performance, and we analyze how these results shed light on how such extraction methods succeed.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

XL-WiC: A Multilingual Benchmark for Evaluating Semantic Contextualization

Alessandro Raganato, Tommaso Pasini, Jose Camacho-Collados, Mohammad Taher Pilehvar,

Multilingual Offensive Language Identification with Cross-lingual Embeddings

Tharindu Ranasinghe, Marcos Zampieri,

When Hearst Is not Enough: Improving Hypernymy Detection from Corpus with Distributional Models

Changlong Yu, Jialong Han, Peifeng Wang, Yangqiu Song, Hongming Zhang, Wilfred Ng, Shuming Shi,

X-FACTR: Multilingual Factual Knowledge Retrieval from Pretrained Language Models

Zhengbao Jiang, Antonios Anastasopoulos, Jun Araki, Haibo Ding, Graham Neubig,