A matter of framing: The impact of linguistic formalism on probing results

Ilia Kuznetsov, Iryna Gurevych

Interpretability and Analysis of Models for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

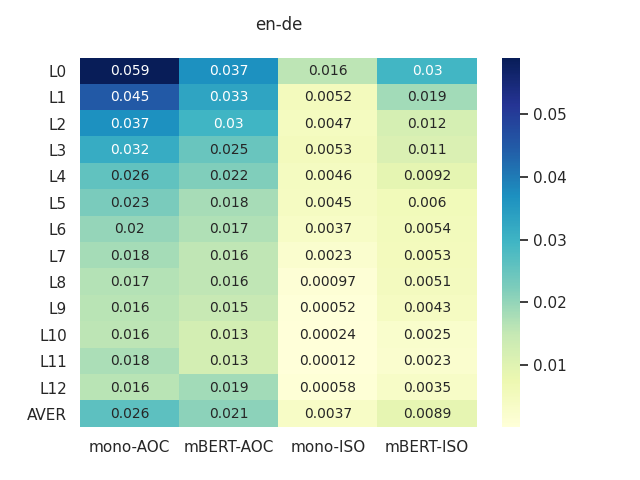

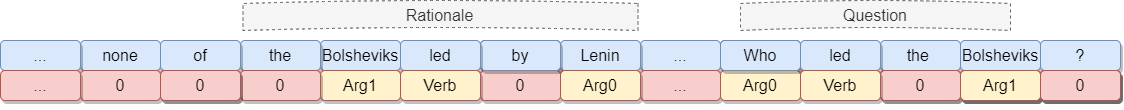

Deep pre-trained contextualized encoders like BERT demonstrate remarkable performance on a range of downstream tasks. A recent line of research in probing investigates the linguistic knowledge implicitly learned by these models during pre-training. While most work in probing operates on the task level, linguistic tasks are rarely uniform and can be represented in a variety of formalisms. Any linguistics-based probing study thereby inevitably commits to the formalism used to annotate the underlying data. Can the choice of formalism affect probing results? To investigate, we conduct an in-depth cross-formalism layer probing study in role semantics. We find linguistically meaningful differences in the encoding of semantic role- and proto-role information by BERT depending on the formalism and demonstrate that layer probing can detect subtle differences between the implementations of the same linguistic formalism. Our results suggest that linguistic formalism is an important dimension in probing studies, along with the commonly used cross-task and cross-lingual experimental settings.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Probing Pretrained Language Models for Lexical Semantics

Ivan Vulić, Edoardo Maria Ponti, Robert Litschko, Goran Glavaš, Anna Korhonen,

Syntactic Structure Distillation Pretraining for Bidirectional Encoders

Adhiguna Kuncoro, Lingpeng Kong, Daniel Fried, Dani Yogatama, Laura Rimell, Chris Dyer, Phil Blunsom,

Compositional and Lexical Semantics in RoBERTa, BERT and DistilBERT: A Case Study on CoQA

Ieva Staliūnaitė, Ignacio Iacobacci,

Pareto Probing: Trading Off Accuracy for Complexity

Tiago Pimentel, Naomi Saphra, Adina Williams, Ryan Cotterell,