Active Learning for BERT: An Empirical Study

Liat Ein-Dor, Alon Halfon, Ariel Gera, Eyal Shnarch, Lena Dankin, Leshem Choshen, Marina Danilevsky, Ranit Aharonov, Yoav Katz, Noam Slonim

Machine Learning for NLP Long Paper

You can open the pre-recorded video in a separate window.

Abstract:

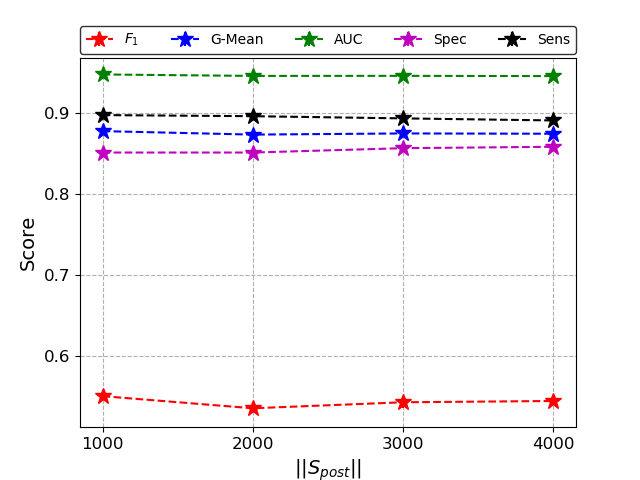

Real world scenarios present a challenge for text classification, since labels are usually expensive and the data is often characterized by class imbalance. Active Learning (AL) is a ubiquitous paradigm to cope with data scarcity. Recently, pre-trained NLP models, and BERT in particular, are receiving massive attention due to their outstanding performance in various NLP tasks. However, the use of AL with deep pre-trained models has so far received little consideration. Here, we present a large-scale empirical study on active learning techniques for BERT-based classification, addressing a diverse set of AL strategies and datasets. We focus on practical scenarios of binary text classification, where the annotation budget is very small, and the data is often skewed. Our results demonstrate that AL can boost BERT performance, especially in the most realistic scenario in which the initial set of labeled examples is created using keyword-based queries, resulting in a biased sample of the minority class. We release our research framework, aiming to facilitate future research along the lines explored here.

NOTE: Video may display a random order of authors.

Correct author list is at the top of this page.

Connected Papers in EMNLP2020

Similar Papers

Cold-start Active Learning through Self-supervised Language Modeling

Michelle Yuan, Hsuan-Tien Lin, Jordan Boyd-Graber,

SetConv: A New Approach for Learning from Imbalanced Data

Yang Gao, Yi-Fan Li, Yu Lin, Charu Aggarwal, Latifur Khan,

Adversarial Self-Supervised Data-Free Distillation for Text Classification

Xinyin Ma, Yongliang Shen, Gongfan Fang, Chen Chen, Chenghao Jia, Weiming Lu,

New Protocols and Negative Results for Textual Entailment Data Collection

Samuel R. Bowman, Jennimaria Palomaki, Livio Baldini Soares, Emily Pitler,